Service Blueprinting for Better Collaboration in Human-Centric AI: The Design of a Digital Scribe for Orthopedic Consultations

Reka Magyari and Fernando Secomandi *

Delft University of Technology, Delft, The Netherlands

This case study explored the application of the service blueprinting method during the conceptual design of an AI-enabled digital scribe—an intelligent documentation support system—tailored for orthopedic consultations. In this paper, we discuss how this method can be used to enhance collaboration between user experience designers and machine learning engineers. Specifically, we show how service blueprinting can help innovation teams create a common foundation for understanding design challenges, enrich data with user-related insights, and highlight the value of AI capabilities as an organizational resource. Building on recent academic research in the field of human-computer interaction, our findings provide additional insights for addressing the design challenges associated with developing human-centric AI and incorporating service design approaches.

Keywords – Blueprinting, Clinical Documentation, Digital Scribe, Human-centric AI, Service Design, UX Design.

Relevance to Design Practice – We explored how the service blueprinting method can be used to expand current UX design approaches and improve team collaboration during human-centric AI innovation projects.

Citation: Magyari, R., & Secomandi, F. (2023). Service blueprinting for better collaboration in human-centric AI: The design of a digital scribe for orthopedic consultations. International Journal of Design, 17(3), 63-77. https://doi.org/10.57698/v17i3.04

Received November 23, 2022; Accepted July 31, 2023; Published December 31, 2023.

Copyright: © 2023 Magyari & Secomandi. Copyright for this article is retained by the authors, with first publication rights granted to the International Journal of Design. All journal content is open-accessed and allowed to be shared and adapted in accordance with the Creative Commons Attribution 4.0 International (CC BY 4.0) License.

*Corresponding Author: f.secomandi@tudelft.nl

Reka Magyari is a user experience designer and researcher focusing on the conceptualization of data-driven user experiences and the integration of AI technologies in digital service solutions in the healthcare domain. She is currently working at ZEISS Digital Partners (Munich) after acquiring experience at Attendi (Amsterdam) and Philips Experience Design (Eindhoven). She graduated with an MSc in Integrated Product Design from the Department of Industrial Design Engineering at the Delft University of Technology.

Fernando Secomandi’s research mainly focuses on themes that emerge at the intersection of industrial design and the philosophy of technology. He currently investigates the design of AI-enabled service interfaces that mediate social relations in healthcare and other domains. Additionally, he is the associate editor for the Journal of Human-Technology Relations (TU Delft OPEN Publishing).

Introduction

Artificial intelligence (AI) is increasingly discussed in the context of healthcare due to its potential to assist medical practitioners with a range of tasks. Modern technologies such as Automated Speech Recognition (ASR) and Natural Language Processing (NLP) may enable new forms of digital scribes to assist with clinical documentation by partially automating note-taking activities, allowing for the transcription and conversion of clinician-patient consultations into structured clinical notes. Such an innovation could reduce the high administrative burden currently faced by clinicians who rely on manual documentation of patient consultations.

However, designing such AI tools presents new methodological challenges that design practitioners and researchers have only recently begun to come to terms with. Within the HCI domain, a growing body of research has treated AI—or, more precisely, machine learning (ML)—as a new material that designers can shape when creating new products and services to improve the user experience (Dove et al., 2017; Holmquist, 2017; Yang, 2017; Yang et al., 2018; Yildirim et al., 2022). This trend has been corroborated more broadly by developments within the field of AI innovation, where user experience (UX) design is emphasized for the creation of human-centric AI, i.e., AI devised for the benefit of human users and based on a deep understanding of AI’s capabilities (e.g., Lepri et al., 2021). At the same time, design researchers have expressed concern about the current lack of UX design knowledge and tools oriented toward human-centric AI, especially given the distinct behaviors that AI technologies may manifest at the user interface level (e.g., Dove et al., 2017; Holmquist, 2017).

The few empirical studies that explore the perspectives of UX designers working with AI technologies echo many of the previously mentioned expectations associated with AI, as well as some shared concerns (Dove et al., 2017; Yang et al., 2018; Yildirim et al., 2022). Practicing designers seem to agree that UX-related expertise is critical for understanding user needs within the broader context of a person’s life, particularly when imagining and prototyping new forms of interactions and evaluating the ethical impact of designed solutions. In addition, the existing research has helped to shed light on interactions between UX designers and ML specialists in real-world contexts. Notably, experienced designers seem untroubled by the fact that their AI-related expertise may not extend beyond matters of user-interface relations into the underlying technical infrastructure, so long as their knowledge is sufficient to allow them to collaborate with software engineers and other specialists (Yang et al., 2018; Yildirim et al., 2022). Instead, they aim to foster effective collaborations using various strategies, such as employing abstractions and popular examples to communicate ideas, getting involved at different stages from conceptual development to market release, embracing a data-driven culture, visualizing user research insights, and advocating their perspective on users to others involved.

In this paper, we contribute to the ongoing discussions about team collaboration among UX designers and ML engineers by reporting on the conceptual design of an AI-enabled digital scribe for orthopedic clinicians. In this context, ML engineers are the software designers who program and build scalable software to automate AI and ML models. UX designers are defined as designers whose task is to conceptualize user- and experience-centered interfaces and the resulting interactions between humans and the AI/ML models. This study especially focuses on the introduction of a design method—a service design method called “blueprinting”—to complement the current repertoire utilized by UX designers involved in human-centric AI innovation projects.

The service blueprinting method is not entirely new to the field of HCI. Lee and Forlizzi (2009) previously made use of this approach to improve the user experience in interactions with social robots. More recently, Yoo et al. (2019) employed the method to map designers’ roles and values in relation to other stakeholders in a new digital library service. In another study on designer roles in cross-functional AI teams, Yildirim et al. (2022) conducted practical experiments that adopted the method to facilitate communication about data across teams with different expertise in various areas. However, such research remains sparse, and except for the work of Yildirim et al. (2022), it has not been directly connected to the design of user interfaces as enabled by ML technology.

This gap in the literature can be at least partially attributed to the fact that there has been limited interaction between the HCI and service design fields. Even though a major influence on service design during its early development came from the HCI community (e.g., Holmlid & Evenson, 2008; Moggridge, 2007), these disciplines progressively diverged in subsequent years. Despite this separation, HCI researchers have continued to recognize significant areas of overlap and have identified potential benefits to be gained by adopting a service perspective (e.g., Forlizzi, 2010, 2018; Zimmerman & Forlizzi, 2013). In fact, some studies have addressed emerging technologies such as crowd-sourced computing (Zimmerman et al., 2011) and social robotics (Lee & Forlizzi, 2009) in a manner that has posed novel challenges for UX designers—challenges that mirror those faced by HCI researchers as they confront rapidly advancing ML technologies. This has led to calls for greater integration of service design principles into HCI practices, an appeal that is gaining traction among researchers (e.g., Lee et al., 2022; Leinonen & Roto, 2023; Roto et al., 2021; Yoo et al., 2019). Service design, after all, is equally committed to the user experience; in addition, it can expand UX design approaches by providing direction throughout the design process. It does so by highlighting the role of stakeholders beyond mere users and revealing the sociotechnical resources implicated in the co-creation of value by all stakeholders involved.

In summary, few academic publications have thus far addressed how service design can be used to improve team collaboration in relation to human-centric AI or, more broadly, UX design for emerging technologies (Lee et al., 2022; Leinonen & Roto, 2023; Yildirim et al., 2022). The present case study seeks to explore this relatively unaddressed area of academic research; specifically, it provides insights acquired by implementing the service blueprinting method during the design of a new AI-enabled digital scribe for clinical documentation.

The remainder of the paper is structured as follows: first, we present an overview of the current literature on technology-assisted documentation in clinical settings, with a focus on the use of AI. Next, we provide an overview of the design project on which this case study is based. Following this, we discuss in greater depth how service blueprinting was applied with a particular focus on how it impacted team collaboration, especially between UX designers and ML engineers. Based on these findings, we conclude by identifying some of the limitations of our approach and providing recommendations for improving collaboration within human-centric AI development teams using the service blueprinting method.

Digital Scribes in Clinical Documentation

Clinical documentation refers to typing a text record summarizing the interaction between a patient and a medical provider during a clinical encounter (Rosenbloom et al., 2011). In most healthcare settings, such interactions consist of conversations that take up a significant portion of the allotted consultation time, often requiring clinicians to multitask while talking to their patients by either manually taking notes in a physical notebook or typing them on an electronic device.

In recent years, the healthcare sector has seen rising rates of clinician burnout due to their daily administrative burden, among other factors. In the Netherlands, where our design project was carried out, clinicians spend as much as 35% of their time handling administrative tasks (Joukes et al., 2018), time that could be better spent addressing patient needs (Dugdale et al., 1999). In the context of outpatient care, it’s been estimated that for every hour that physicians spend face-to-face with patients, approximately another two hours are spent on documentation (Sinsky et al., 2016). Clinical documentation is crucial for various reasons, including ensuring patient safety; however, there is an urgent need to make this process more efficient.

In principle, automating certain note-taking and documentation tasks could save valuable time for clinicians, allowing them to re-emphasize patient care (Quiroz et al., 2019). One way in which automation is currently being implemented to assist with clinical documentation is in the form of AI-enabled digital scribes, computational systems that combine Automated Speech Recognition (ASR) and Natural Language Processing (NLP) technologies (Coiera et al., 2018). Usually, a microphone is used to record the audio of a consultation, which is then processed by ASR software to convert human speech into text (Jamal et al., 2017). The transcribed speech is further processed by NLP software to extract and summarize relevant information, which is then communicated back to the physician (Jeblee et al., 2019).

Using ASR and NLP technologies for clinical documentation presents numerous technical challenges (Jeblee et al., 2019; Kanda et al., 2019; Quiroz et al., 2019). The digital scribe must be able to record every relevant utterance, understand what is being said (and by whom), add punctuation marks appropriately, extract and classify meaningful parts from unstructured texts, summarize information from relevant sentences, and so forth. Ultimately, specialists believe the success of digital scribes will be strongly dependent on how well the interface between the clinician and the AI is designed, even though there is still little consensus about how to best model these user interactions (Coiera et al., 2018).

One of the proposed models for human-AI interaction relevant to digital scribes is known as mixed-initiative (Coiera et al., 2018). In this model, the responsibility and control over specific parts of the documentation process are distributed to either humans or AI technologies based on their distinct abilities to achieve a desired outcome. Recent research with primary care practitioners suggests that doctors favor this type of collaborative model, at least until stronger evidence emerges for the reliability and clinical safety of more fully automated documentation processes (Kocaballi et al., 2020). Specifically, doctors prefer to maintain their ability to adapt and personalize care based on particular circumstances, control for automation bias that can negatively impact patients, and retain control over core tasks while delegating less crucial ones to the technology. Generally speaking, they hope to utilize the distinct capabilities of AI in a way that can augment and benefit their own.

The flexibility offered by the mixed-initiative model led us to adopt this approach for our design project: an AI-enabled digital scribe for orthopedic clinicians. In the following section, we describe in detail the conceptual design of a new interface for the digital scribe which was guided by user experiences.

Designing the User Interface of an AI-enabled Digital Scribe

Our design project was carried out in the Netherlands in collaboration with two academic hospitals and a startup company specializing in language customization technologies for healthcare settings. At the start of the project, this young company had been focusing primarily on the technical development of their proprietary digital scribe for clinical documentation. Their development team comprised eight employees: six software developers (three specialists in ASR, two in NLP, and one in back-end development), a product manager, and a medical lead who previously practiced as an orthopedic surgeon.

The first stages of the startup’s product development roadmap focused on setting up a software infrastructure that could organize consultation recordings and generate a dataset for pre-training the system’s NLP algorithms. Because the startup lacked expertise in UX design, they hired a graduate student from a technical university with a longstanding master’s program in interaction design to create their digital scribe’s user interface. Such collaborations between industry and academia represent one of the common ways in which AI innovation advances in the Netherlands. The startup sought to validate the value proposition of their digital scribe efficiently and effectively by aligning with lean principles and methodologies. In our project, the first task we undertook was to validate the new concept with a group of primary adopters (i.e., clinicians); later, the focus would be shifted to other relevant stakeholders (e.g., patients) with additional investments made to develop the new technology and deploy it in actual healthcare systems.

The startup company decided to focus on orthopedic consultations as the initial testing ground for their technology, as such consultations tend to be short, occur in high volumes, and have well-structured anamnesis (i.e., information recorded by the doctor, which is obtained during a patient consultation and used to formulate a diagnosis). Hence, this setting appeared to offer the ideal conditions for the implementation of ASR and NLP technologies. The main function of the digital scribe was to automate a substantial portion of the doctors’ note-taking tasks while still granting them the flexibility to edit the resulting documentation. To achieve this, text entries needed to be as structured as possible, preferably by means of a template, with a curated set of questions and answers relevant to the specific type of consultation, thus limiting the range of variables resulting from free text input. It was expected that the main value of such a digital scribe for clinicians would be to reduce the volume of manual typing required to enter notes in electronic health record (EHR) systems, which is almost universally regarded by clinicians as time-consuming, repetitive, and monotonous.

Our design methodology aligned with prior studies on UX design for human-centric AI innovation in healthcare settings (e.g., Cai et al., 2019; Calisto et al., 2021; Gu et al., 2021; Yang, 2017). It covered the stages from user research to the conceptual design of the new interface and combined experience-centric methods commonly used in interaction design and service design practices, as previously demonstrated in several real-world projects (e.g., Leinonen & Roto, 2023; Yildirim et al., 2022). We planned and conducted user research through in-depth interviews and job shadowing to explore the problem space. Our findings were then processed and consolidated through the creation of user personas and journey maps to develop a list of requirements that guided the conceptual design stage. Based on this list, we conceptualized the user experience by mapping out user flows, devising the service blueprint, and designing the screen interface. These visual designs were iteratively refined and eventually evolved into an interactive mock-up that was tested by end-users (i.e., clinicians). Throughout this process, insights, ideas, and concepts were mainly derived from the work of the UX designer and were regularly discussed with professional clinicians and other members of the development team.

Below, we provide an overview of the main activities of our design project. This is followed by a detailed discussion of the service blueprinting method.

User Interviews

The UX designer interviewed a total of six orthopedic surgeons and two non-orthopedic clinicians, all of whom were recruited from collaborating hospitals. These interviews lasted from 30 to 45 minutes and covered various themes relevant to clinical documentation, including consultation styles, control over technology-assisted recordings, and the perceived value of automated transcripts. All interview sessions were conducted one-on-one, either in-person or virtually. Audio recordings from the sessions were transcribed and coded using the ATLAS.ti software using thematic analysis (Ritchie & Lewis, 2003) and were combined with the data collected during job shadowing, as explained below.

Analysis of the interviews revealed clear frustrations among the clinicians related to the strict time constraints affecting note-taking, which often forced them to multitask and touch-type during consultations. Moreover, the presence of a computer between them and their patients was perceived as hindering the attention they could provide and constraining the doctor-patient relationship. Over time, experienced clinicians tend to develop their own style for writing consultation notes, which is sometimes difficult for other colleagues or patients to interpret. The clinicians interviewed expressed a desire for a new intelligent system that would become self-learning; that is, a system employing adaptive learning algorithms to continuously adjust based on the details of an interaction. While clinicians were in favor of automation for repetitive tasks, they also expressed pride in their individual documentation styles. Ultimately, they emphasized the need to control the quality of content being entered into the EHR and feared quality may be compromised if they were forced to become overly reliant on an automated system.

Job Shadowing

To gain deeper knowledge about topics discussed in the interviews and to observe clinicians in a professional real-world context, the UX designer accompanied two orthopedic surgeons as they carried out their daily tasks at the two collaborating hospitals. During these job shadowing activities, the complete documentation process during clinician-patient consultations was carefully observed and described. In preparation for this activity, the designer devised a list of topics to guide observations and attempted to identify tasks that could be automated. Entire work shifts were observed, with the designer sitting unobtrusively alongside the doctor during patient consultations. At the end of a work shift, follow-up questions were raised with the doctors in an unstructured manner to provide additional insights, leading to the creation of user personas and journey maps.

User Personas

Based on an analysis of the research data collected through the activities discussed above, the UX designer synthesized two clinician profiles to create distinct user personas: the “Multitasker” and the “Balancer.” These names were inspired by the fact that, during consultations, the orthopedic surgeons were constantly forced to alternate between paying attention to documentation tasks or their interactions with patients. Multitaskers tended to complete all documentation during consultations, while Balancers strived to spend more time talking to patients, leaving some of the documentation to be completed after the consultation. It should be noted that the Multitaskers usually worked in non-academic hospitals, with responsibilities that included both orthopedic surgery and outpatient clinic consultations, while the Balancers worked in academic hospitals and were responsible for overseeing more complex cases and performing academic research. Multitaskers, who generally treated simpler cases, usually had shorter consultations with well-structured anamnesis. Importantly, this persona represented a use context in which automation was more urgent; therefore, the development team opted to prioritize this type of user as an early adopter of the new digital scribe.

As personas are representations of the target user groups, the goal was to summarize the key characteristics that could orient interface design deliberations. With their busy schedules, Multitaskers typically allow ten minutes for each consultation. These clinicians seek to deliver quality care for their patients within the allotted time, yet they expressed frustration over time constraints preventing them from providing more comprehensive assistance. After all, individual differences among patients necessitate distinct and personalized approaches to their care. As a result, some patients require more time than others, and if it is not possible for a clinician to finish documentation during the consultation, it additional time and attention is required following the work shift. Furthermore, Multitaskers reported dissatisfaction with the informational architecture of the EHR system wherein medical notes are typed and saved.

Journey Maps

The results of the user research also led to the creation of two journey maps for the Multitasker, with one representing the entire workflow of an orthopedic clinician during a shift, and another depicting a typical consultation from start to end. The former allowed for easy visualization of orthopedic surgeons’ primary activities in a chronological sequence, activities which typically consist of approximately thirty consultations between 8 a.m. and 5 p.m. The second journey map, focusing on individual consultations, comprised several key steps, such as preparing, conducting, and wrapping up patient sessions, and included all associated documentation tasks. These specific steps were further illustrated with actual quotes from clinicians and their perceived or expressed emotions, drawing on data from the interviews and observations.

Given that the digital scribe’s primary function is to assist with notetaking activities, a closer examination of the consultation journey is relevant here. Typically, each orthopedic consultation starts with a general survey of the patient to determine their current state and the reason for their consultation. Next, an evaluation phase takes place, in which the orthopedic surgeon assesses whether the treatment is working (in the case of follow-up patients) and asks more specific questions. During this stage, patients express complaints and reveal any pains that they are currently experiencing. The consultation then proceeds to a physical examination, in which the surgeon assesses the patient’s range of movement and overall mobility in an attempt to find the source of the patient’s discomfort. Although this phase consumes most of the consultation time and requires detailed and accurate documentation, it’s all but impossible for the clinician to take notes while simultaneously conducting the physical examination. In the final phase of the consultation, the surgeon formulates the diagnosis and addresses the patient’s questions. The sequence of these steps remained consistent throughout all consultations observed and is reflected in the structure of the surgeons’ notes.

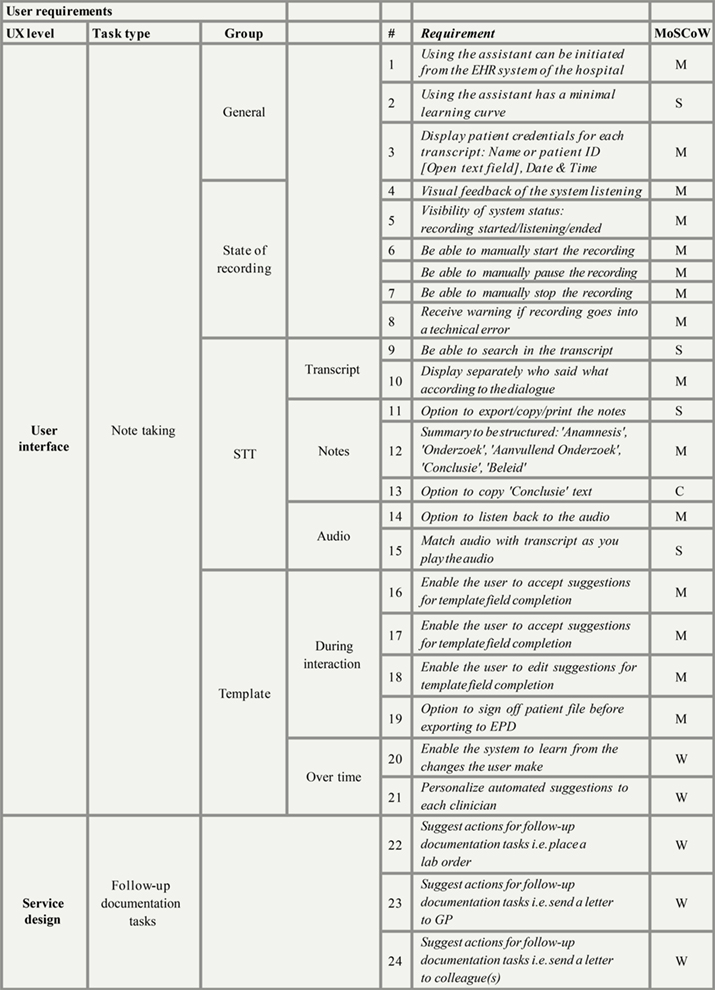

List of Requirements

To guide the conceptual design of the new user interface, a list of requirements was derived from the user research and augmented with general guidelines from recent studies on human-centric AI (e.g., Amershi et al., 2019). The development team discussed these requirements to establish mutual agreement about their relative importance and prioritize them accordingly. Additionally, the UX designer consulted with the medical lead to rate each requirement from a user perspective according to the MoSCoW method, a popular tool used in Agile software development (Davis, 2013). The method’s name is an acronym, where “M” stands for “must haves,” “S” for “should haves,” “C” for “could haves,” and “W” for “won’t haves.” Factors beyond user-related issues, such as technical limitations, business strategies, and product development roadmaps were also considered. Next, a joint session was held with the company’s product manager and technical lead, during which all requirements were rated to calculate averages. The final list of user requirements (Appendix) contained the main features and functionalities to be embodied in the digital scribe’s new interface. Importantly, all must-have requirements were clearly defined, as the initial focus was to validate the most fundamental usability-related functionalities of the new concept with clinicians.

Service Blueprinting

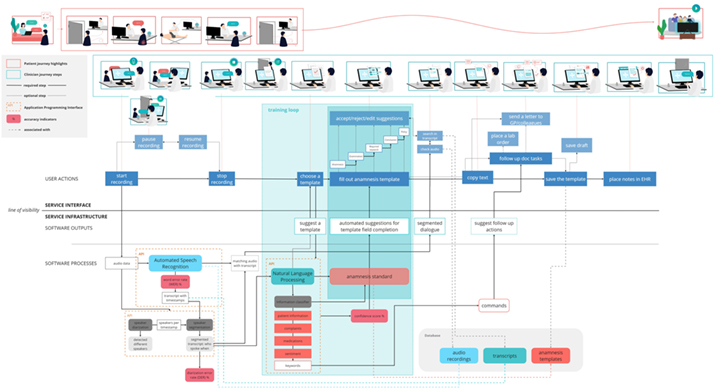

At the suggestion of the UX designer, a service blueprint was devised in collaboration with the rest of the team to visualize the new user interface in the context of clinician-patient interactions. It detailed key processes related to clinicians’ actions as well as ASR/NLP software, both of which were essential for the digital scribe to effectively complete clinical documentation. The development of the blueprint and its impact on team collaboration are discussed later in the paper.

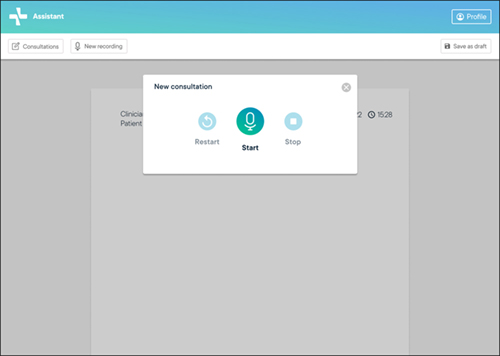

Screen Interface Design

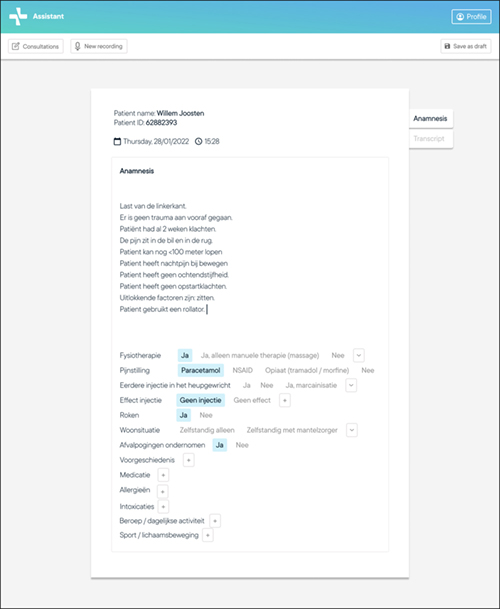

The conceptualization of the screen interface began by extracting user flows from the service blueprint. These user flows consisted of a series of steps, logically organized, that started from an initial status and ended with the completion of the task in question. Three user flows were ultimately identified, corresponding to key tasks that clinicians should complete when interacting with the digital scribe’s interface: recording the consultation, filling out the anamnesis template, and querying a patient file from the database. Based on these user flows, an interactive mock-up was devised to simulate the behavior of the AI-enabled interface for users as they performed the tasks. For instance, at the beginning of a consultation, when clinicians pressed Start, the digital scribe would record the audio of the conversation between the clinicians and their patients (Figure 1). If necessary, clinicians could pause and restart the recording. It was determined that the interface should also continuously provide visual feedback to users so they could remain aware of its current state. ASR technology would be used to convert the recorded audio into transcripts by identifying different speakers, creating segments, and adding timestamps. Meanwhile, the software would match the audio to the segmented transcript so that the integrated data could be transferred to the NLP pipeline.

Figure 1. Interactive mock-up of the user interface of the AI-enabled digital scribe, displaying the panel for controlling the recording of consultations.

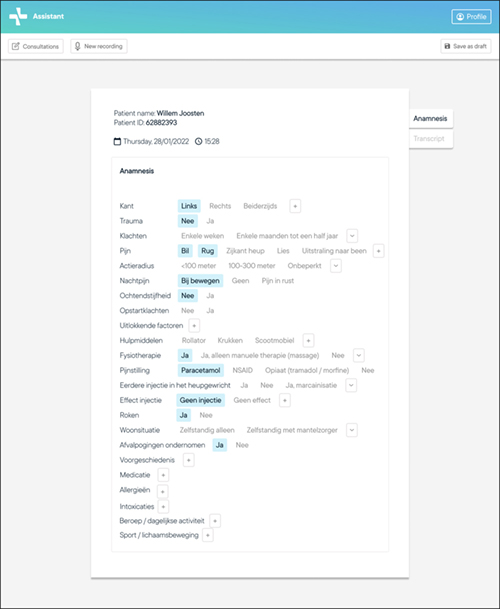

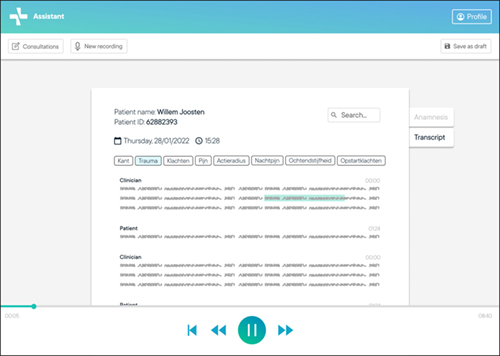

Once the recording was stopped, the user was presented with a template screen for anamnesis completion (Figure 2). This template was structured in the order that topics are addressed during a typical consultation, as identified in the job shadowing activity. As noted above, in orthopedic consultations, this order does not usually change from one patient to another. The principle for filling out the anamnesis template was based on partial automation; that is, different fields in the template were initially prefilled based on information automatically processed by the underlying AI technologies. These fields were highlighted and presented as clickable buttons, giving clinicians the ability to edit the final result, as our user research emphasized the importance of human control. Furthermore, clinicians were provided with the option to manually add notes in a free text field (Figure 3). To enhance transparency with regard to how data from the consultation transcript was used to prefill the template, users could access the transcript through a panel on the right of their screen where they could perform keyword searches (Figure 4).

Figure 2. Interactive mock-up of the user interface of the AI-enabled digital scribe, displaying an automatically completed anamnesis template for the consultation (in Dutch).

Figure 3. Interactive mock-up of the user interface of the AI-enabled digital scribe, displaying the manual input by the clinician on an automatically completed anamnesis template following the consultation (in Dutch).

Figure 4. Interactive mock-up of the user interface of the AI-enabled digital scribe, displaying results from a search conducted on an automatically transcribed consultation (in Dutch; some portions redacted for confidentiality).

This design approach resulted in a screen interface that embodied the mixed-initiative model of human-AI interaction, where humans share responsibility with the technology while retaining a high degree of control over the overall documentation process and outcomes. As previously noted in our healthcare literature review, clinicians typically prefer this kind of technology-assisted documentation model (Coiera et al., 2018).

User Testing

To obtain additional feedback about the proposed concept, the interactive mock-up was also tested with four orthopedic surgeons, who took part in a hypothetical scenario where they imagined they were using the digital scribe for documenting a patient consultation. Participants were asked to talk out loud during testing to explicitly reveal their thought processes as notes were being taken. The results from the test revealed that users of the digital scribe had high expectations for obtaining a polished summary of the conversation “in one click,” highlighting the need to clearly explain the potential as well as the limitations of the AI technologies involved. As for the anamnesis template feature, it was confirmed that the prefilled information, highlighted as buttons, was considered useful for accepting or editing automated suggestions. Clinicians also appreciated the option to skip certain fields of the template when completing it.

Following the test, clinicians completed a questionnaire regarding the concept’s perceived usefulness and ease of use. The questionnaire included 12 items drawn from the Technology Acceptance Model (TAM) scale (Rahimi et al., 2018), which is a validated instrument within the healthcare domain for assessing perceived usefulness and ease of use. The clinicians’ mean scores were above 5 on a six-point Likert scale where 6 was the positive end point. Although these results cannot be considered statistically significant or representative given the sample size, they were nonetheless incorporated into the qualitative analysis of user interactions with the mock-up, and the information was relayed back to the startup company to guide further development of the user interface. This stage marked the end of our design project’s conceptual design process. We now turn to an in-depth discussion of the service blueprinting method and its influence on team communication during the conceptual design stage.

Service Blueprinting for Better Collaboration Between UX Designers and ML Engineers

Introduction to the Method

Blueprinting was first introduced in the marketing field in the early 1980s (Shostack, 1982), and has since gone on to become a staple of service design methodology. For several years after its introduction, while discussions about services were still rare in design research, blueprinting was often the only design method featured in the primary service marketing textbooks (e.g., Zeithaml et al., 2008). However, around the early 2000s, the method began to gain traction among the early advocates of service design, many of whom had backgrounds in design disciplines (e.g., Moggridge, 2007; Morelli, 2003).

Broadly speaking, the service blueprint can be defined as a flowcharting technique centered on users or any other end-beneficiaries of services (Sampson, 2012). Its most defining and enduring structure, evident in various proposed formats over the years (e.g., Bitner et al., 2008; Fliess & Kleinaltenkamp, 2004; Kingman-Brundage et al., 1995; Shostack, 1984; Stacey & Tether, 2015), lies in its distinction between two domains of service production by a so-called “line of visibility.” These domains consist of the frontstage, or service interface, consisting of processes that are directly experienced by users, and the backstage, or service infrastructure, comprising processes that support user-facing processes typically controlled by service providers but not directly experienced by users (Secomandi & Snelders, 2011).

When applying the service blueprint to our case, we adopted this basic structure while adding other elements (e.g., storyboards) and modified the original blueprint to suit our particular context and objectives. This is a common approach within the specialized service design discipline (e.g., Stickdorn et al., 2018) and has also been observed in previous implementations of service blueprinting by HCI researchers (Lee & Forlizzi, 2009; Yildirim et al., 2022; Yoo et al., 2019). Since we aimed to validate the digital scribe’s value proposition for clinicians, the blueprint relied on data from user research with this specific target group. Consequently, it primarily elaborated on the clinician perspective within broader healthcare systems, focusing on their knowledge of and interaction with patients, existing technologies (e.g., EHR systems), and other colleagues.

Blueprinting the AI-enabled Consultation Service

As suggested in the overview of the design process, service blueprinting initiated the conceptual design phase and served as a bridge between the establishment of design guidelines at the end of the user research phase and the activities leading to the materialization of the new design concept (in the form of a screen-based user interface). As such, it aligned with the framework recently proposed by Leinonen and Roto (2023) based on best practices for knowledge transfer between service designers and software developers.

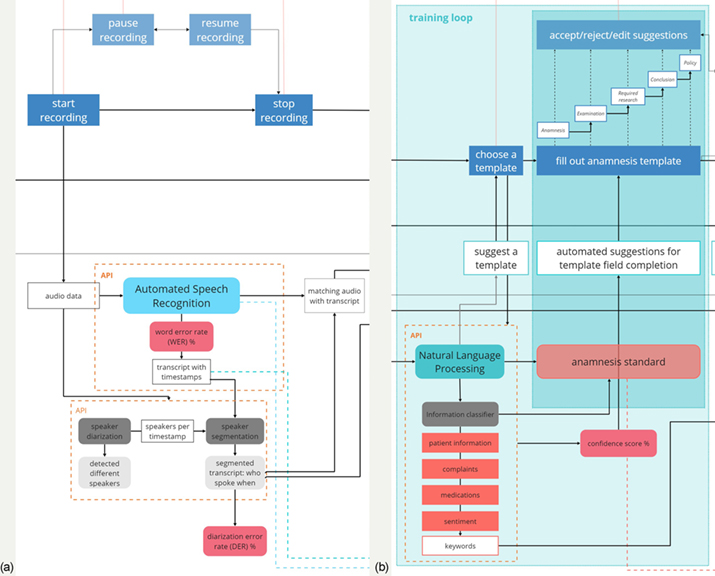

Initially, the blueprinting method was unfamiliar to the other team members, who required a detailed explanation from the UX designer. The blueprint creation started with a broad identification of the main steps of the consultation journey: recording the consultation, interpreting the text, and generating the report. These phases served as the starting point for mapping out the underlying technological processes and guided descriptions of the flow of data at various stages, including capturing audio with ASR and automating the results using NLP. Early in the process, the tech lead provided a diagram of the software architecture which outlined software processes in the form of large building blocks. While this visualization helped the UX designer gain a general understanding of the technology, it lacked pertinent details about subprocesses and did not include any information about user interaction with the technologies beyond vague references to “inputs” and “outputs.”

To refine this original diagram, the UX designer collaborated with other members of the development team, gradually adding details to the building blocks such as associated technologies, data formats, subprocess chains, and (sub)results. In addition, connecting arrows were added between (sub)process blocks, transforming the initial diagram into a comprehensive flowchart. Regular meetings with the tech lead were held to ensure accuracy and consistency in the representation of the underlying AI technologies.

The next step in blueprint creation was to integrate the results of the previous user research activities—especially clinician and patient actions before, during, and after consultations. These actions were illustrated in the blueprint using storyboards that depicted key moments in the journeys of clinicians and patients.

Figure 5 displays the final version of the service blueprint. Along the top, the red boxes represent a patient’s journey. The storyboard starts with their arrival at the front desk of the orthopedic department and ends after the consultation has taken place. The patient is offered the opportunity to listen to the recording of their interaction with the clinician following the consultation, perhaps even after returning home. The next row of boxes (outlined in blue) depicts the clinician’s journey, starting from when a patient first enters the consultation room and ending with the entry of the anamnesis into the electronic health record system after the patient has left. Within the service interface domain, several actions taken by clinicians using the new screen interface (“user actions”) are indicated above the line of visibility. These actions include starting/pausing/stopping audio recordings, choosing an anamnesis template, accepting/rejecting/editing automated suggestions, copying text for inclusion in a referral letter, and saving notes. Below the line of visibility, denoting the service infrastructure domain, processes associated with ASR and NLP software are presented, which also relate to user actions. For instance, the consolidation of data that feeds the ASR algorithm depends on the action of starting the recording (Figure 6a). Later, the user will depend on the output from NLP-related processes that have generated automated suggestions for specific fields to complete the anamnesis template (Figure 6b).

Figure 5. Service blueprint of an AI-enabled digital scribe for orthopedic consultations.

(Click on the figure to see a larger version.)

Figure 6. Detailed interfacing and infrastructural service processes (a) related to ASR and (b) related to NLP.

Benefits of Service Blueprinting for Team Collaboration

This subsection explores the primary ways in which the service blueprinting method can enhance team collaboration. These conclusions are exploratory and resulted from analyses of the intermediary materials produced during blueprint generation (e.g., meeting notes, visualizations, etc.), as well as interviews conducted with two ML engineers (one of whom specializes in computational linguistics), which provide unique perspectives on the use of this method. Following the conclusion of these interviews, this paper’s authors discussed preliminary interpretations of the analyzed data and collected feedback from members of the development team to clarify and better understand the results, which are explored below.

Shared Understanding About Joint Design Challenges

Because the service blueprint was built on the well-known technique of flowcharting, all members of the development team readily grasped its basic concept. UX designers commonly use flowcharts to describe user flows; ML engineers use them to map out software processes and data models. As previously mentioned, the designer utilized a diagram of the software architecture created by the team lead that explained information flows and outcomes pertaining to ASR and NLP processes. The user in this diagram was merely represented as a “black box,” with inputs and outputs connected to ASR/NLP processes.

The UX designer translated this diagram into the service blueprint, providing detailed insights into both the clinician interactions with the digital scribe and the contextual framework surrounding those interactions. Essentially, the blueprint gave the designer an opportunity to “unpack users” for the ML engineers by explaining, with visual support, how insights from user research related to the underlying software processes that would power the digital scribe. For the engineers, this offered a window into how specific processes they devised would ultimately impact user actions in a specific way, i.e., in the context of users’ working environment and daily life experiences. These actions were made even more tangible through the storyboards’ iconographic and representational style.

For the subsequent phase of our design project, the decision was made to focus on the completion of the anamnesis template, as this segment of the blueprint was anticipated to be of the greatest value to orthopedic clinicians (see darker blue selection in Figure 6b). Starting from the blueprint, the UX designer was then able to devise user flows and screen interfaces, precisely aware of how the main design challenge—prescribing the interface for users—was related to the inputs and outputs of software processes developed by the ML engineers. Moreover, this arrangement meant that these latter specialists could better discern whether anticipated user actions were already addressed by existing ASR/NLP processes or whether further innovation was needed to deliver value for users.

In summary, the blueprint encompassed both the interface and underlying structure of the service processes with a single visualization tool, facilitating a shared understanding within the entire team regarding the integrated solution being designed. Both the UX designer and ML engineers understood how user experiences related to their roles and responsibilities throughout the design process. This outcome reveals how the collaborative nature of the process was essential to its success, turning screen designs and software codes into collective challenges that involved the entire development team.

Enriched Data with User Insights that Drive AI Innovation

By linking the underlying software processes to the digital scribe’s user interface, the service blueprint helped the ML engineers understand how ASR/NLP input data was generated by user actions. Moreover, because the service blueprint represented user actions in a contextualized manner, ML engineers’ perceptions of these data and their implications for software design were further enhanced.

During our design phase, the ramifications of enriched user data became evident during the filling out of the anamnesis template (Figure 6b). Initially, ML engineers had created NLP processes that generated automated text suggestions for predefined fields in the template. These suggestions were to be accepted, rejected, or edited by clinicians, giving them greater control over the final details. However, user research made it clear that clinicians did not merely want to decide between automated suggestions; they wanted to receive suggestions that improved over time. Such auto-learning would more closely align with clinicians’ expectations of artificial intelligence while simultaneously rewarding the extra effort required to correct the digital scribe, ultimately leading to productivity gains.

In theory, the development team could have improved their understanding of AI data based on user research alone. However, the service blueprint made it possible to immediately realize how these data were connected to the underlying software processes that generated automated suggestions for the anamnesis template. This allowed the ML engineers to identify the additional steps needed to create an improved system that could integrate “learning-on-the-go” behavior as requested by users. Following this, a broader region of the blueprint was emphasized for future software development efforts (see lighter blue selection in Figure 6b). Despite the startup company ultimately deciding to defer this design challenge to a later stage, this example illustrates how enriched user data, as visualized in the service blueprint, helped the development team to identify new opportunities for human-centric AI innovation.

AI Design as an Organizational Resource for Value Co-Creation

As previously noted, the blueprint described ASR/NLP software processes as part of the service infrastructure. Their inclusion in this domain of the consultation service ensured that AI was framed as an organizational resource controlled by the digital scribe’s provider (i.e., the startup company), which was indirectly accessed by users (i.e., clinicians) through a screen-based interface. Approaching the digital scribe in this manner provided the development team with the opportunity to consider other types of organizational resources besides AI that clinicians could leverage when co-creating valuable outcomes with other stakeholders, such as the startup company and patients. Furthermore, this perspective had important implications for how the UX designer and ML engineers perceived and engaged in their collaboration.

Specifically, framing AI technologies as an organizational resource for value co-creation implied a two-fold transformation for the development team. Firstly, AI resources became relational, meaning they were not simply controlled by the digital scribe’s provider but were dependent on interactions among various stakeholders who controlled their own sociotechnical resources. In this case, the principal stakeholders besides the provider (the startup company) were the orthopedic clinicians. As users of the digital scribe, these clinicians utilized their own smartphones, computers, knowledge, and skills, which, together with provider-related resources, were indispensable for the realization of AI-enabled clinical documentation.

Secondly, by being framed as an organizational resource, AI also became part of a journey consisting of key moments between clinicians and the digital scribe during and immediately after patient consultations. However, the blueprint also identified other important moments and stakeholders; most notably among these were the patients themselves and other individuals they engaged with, including their families and the hospital staff. By extension, the journey involved additional medical practitioners to whom clinicians would send extracts from the completed anamnesis and, more distantly, the UX designer and ML engineers responsible for designing the digital scribe. Although these members of the development team were not explicitly portrayed in the blueprint, they were nonetheless alluded to by the interface- and infrastructure-related processes laid out for the implementation of the new service.

Throughout this project, the basis for design decisions remained focused on clinician interactions with the digital scribe inside the consultation room. However, this is not to suggest that other journey moments or stakeholders, such as those identified above, were neglected during the design process. For instance, on one occasion the development team imagined a scenario in which a piece of hardware owned by clinicians (e.g., their smartphone’s microphone) malfunctioned. Beyond envisioning new software processes and screens that would allow users to recover from this error, the team explored the idea of establishing a “support channel” for clinicians to receive direct assistance from the provider of the digital scribe service. This consideration prompted the development team to reflect on the startup company’s existing competencies. In addition to mastering the design of AI technologies, they realized they may need to expand into customer relationship management as well—a move that would entail developing and controlling a range of novel sociotechnical resources. Alternatively, they could collaborate with a partner who specializes in that area. The discussion rapidly shifted to the value the startup was expected to provide within the current stakeholder network or within a potential network that could be assembled wherein an improved digital scribe service that included the support channel option would need to be designed. As the issue demanded extensive time and strategic considerations outside of the scope of the UX design project, it was set aside for the time being.

This example illustrates how the blueprinting method framed AI as one organizational resource among many that could be intertwined in the co-creation of value among multiple service stakeholders. This framing allowed UX designers and ML engineers to reflect on their roles and skills, not just in relation to one another, but also with regard to other areas of expertise. Besides designing ML models and user interfaces, they recognized the additional resources needed to deliver an improved AI-enabled service. Moreover, they became more cognizant of the strategic value of their collective design competencies as these related to the startup company and its prospective customers (i.e., clinicians).

Discussion

Designing digital scribes for clinical consultations represents just one of many intriguing opportunities for implementing human-centric AI technologies in healthcare settings. In the case presented here, we developed a user interface for an AI-enabled digital scribe that integrates both ASR and NLP technologies. The resulting concept presents an original solution in which an anamnesis template allows for the partial automation of documentation while simultaneously accommodating clinician preferences for maintaining individual documentation styles and control over the final documentation details.

As this design project concluded following the conceptualization stage, any projections about the eventual successes or failures of the digital scribe in a real-world context must be made with due caution. Nonetheless, valuable insights can be drawn from the practical implementation of service blueprinting during the conceptual design stage. These are summarized below and provided as suggestions for improving team communication using this method.

Firstly, use the blueprinting method during the conceptual design stage to consolidate insights from user experience research and connect these to the underlying processes of related AI technologies. This can help create a common understanding about the design challenges facing the development team and refer specific challenges to the team members with the appropriate expertise. For example, interfacing service processes can be directed to UX designers while infrastructure issues can be handled by ML engineers, all while facilitating an integrated and collective approach. In addition, consider how knowledge about user actions in a given context (which is often uncovered by research carried out by UX designers) can enhance an understanding of the data feeding AI algorithms. Doing so can lay a foundation for improving existing AI systems in ways that had not been previously considered by ML engineers. Yildirim et al. (2022) discussed a similar case in which this method was used to represent data flows in human-centric AI design.

Secondly, aim to expand the representation of user journeys to address more than just interactions with single AI interfaces. Blueprinting provides an opportunity to identify and represent multiple stakeholders and a host of sociotechnical resources under their control that may be implicated in the co-creation of a new service. This is true even when a human-centric AI project does not initially appear to have an expansive network of organizations or complex user journeys across multiple service interfaces. By carefully reflecting on the categories of user and provider, it might be possible to better differentiate between stakeholder groups and the actual or potential roles they could play in the new service envisioned. Moreover, by considering actions beyond those immediately surrounding a single user interface, additional stakeholders and sociotechnical resources begin to emerge within an expanded co-creation network that can extend even to the designers themselves. Though it did not specifically occur in an AI context, Yoo et al. (2019) reported a similar case where service blueprinting was used to acknowledge designers as stakeholders who bring unique value to an innovation project, impacting outcomes.

Finally, our analysis sheds light on the potential use of the service blueprinting method for strategizing about AI innovation. Notably, this method can facilitate discussions among the development team and other stakeholders about topics such as organizational capabilities, collaboration networks, and the business value of AI expertise, among others, which may extend beyond the traditional scope of HCI-driven AI projects. Though it was not feasible to further explore this aspect in the present case study due to time constraints and other objectives, there are clear indications that a service perspective can expand current user- and experience-centered approaches to human-centric AI design.

UX designers are increasingly engaging with AI technologies, advancing their knowledge of this field, and adopting user-centered methods for AI innovation (Dove et al., 2017; Holmquist, 2017; Yang et al., 2018; Yildirim et al., 2022). Meanwhile, calls are ongoing for greater integration of service design and UX design approaches (Lee et al., 2022; Roto et al., 2021; Yoo et al., 2019; Zimmerman & Forlizzi, 2013) as service designers continue to find themselves involved in software- and AI-related projects (Leinonen & Roto, 2023; Yildirim et al., 2022). Our case study is perhaps the first to focus on and connect these streams of research within HCI by revealing how service blueprinting can complement UX designers’ current repertoire and enhance their collaborations with ML engineers.

Due to the exploratory nature of our research and the specific context of our design project (a startup company collaborating with a graduate student in the Dutch healthcare system), any generalizations from this case study should be made with care. We therefore invite researchers to further expand on this study’s findings. For instance, we welcome more rigorous development, implementation, and evaluation of service blueprinting across various human-centric AI innovation contexts. Such efforts could contribute to bringing the disciplines of HCI and service design closer together, furnishing UX designers with more sophisticated and validated methodologies for human-centric AI than those currently available.

Acknowledgments

We are grateful to all those involved in developing and supervising this design project and for the helpful feedback of Dirk Snelders, Hosana Morales Ornelas, Judith Rietjens, Jung-Joo Lee, Paula Melo, plus three anonymous reviewers, on earlier drafts of this paper.

References

- Amershi, S., Weld, D., Vorvoreanu, M., Fourney, A., Nushi, B., Collisson, P., Suh, J., Iqbal, S., Bennett, P. N., Inkpen, K., Teevan, J., Kikin-Gil, R., & Horvitz, E. (2019). Guidelines for human-AI interaction. In Proceedings of the SIGCHI conference on human factors in computing systems (Article No. 3). ACM. https://doi.org/10.1145/3290605.3300233

- Bitner, M. J., Ostrom, A. L., & Morgan, F. N. (2008). Service blueprinting: A practical technique for service innovation. California Management Review, 50(3), 66-94. https://doi.org/10.2307/41166446

- Cai, C. J., Reif, E., Hegde, N., Hipp, J., Kim, B., Smilkov, D., Wattenberg, M., Viegas, F., Corrado, G. S., Stumpe, M. C., & Terry, M. (2019). Human-centered tools for coping with imperfect algorithms during medical decision-making. In Proceedings of the SIGCHI conference on human factors in computing systems (Article No. 4). ACM. https://doi.org/10.1145/3290605.3300234

- Calisto, F. M., Santiago, C., Nunes, N., & Nascimento, J. C. (2021). Introduction of human-centric AI assistant to aid radiologists for multimodal breast image classification. International Journal of Human-Computer Studies, 150, Article 102607. https://doi.org/10.1016/j.ijhcs.2021.102607

- Coiera, E., Kocaballi, B., Halamka, J., & Laranjo, L. (2018). The digital scribe. Npj Digital Medicine, 1(1), Article 1. https://doi.org/10.1038/s41746-018-0066-9

- Davis, B. (2013). Agile practices for waterfall projects: Shifting processes for competitive advantage. J. Ross Publishing.

- Dove, G., Halskov, K., Forlizzi, J., & Zimmerman, J. (2017). UX design innovation: Challenges for working with machine learning as a design material. In Proceedings of the SIGCHI conference on human factors in computing systems (pp. 278-288). ACM. https://doi.org/10.1145/3025453.3025739

- Dugdale, D. C., Epstein, R., & Pantilat, S. Z. (1999). Time and the patient-physician relationship. Journal of General Internal Medicine, 14(1), S34-S40. https://doi.org/10.1046/j.1525-1497.1999.00263.x

- Fliess, S., & Kleinaltenkamp, M. (2004). Blueprinting the service company: Managing service processes efficiently. Journal of Business Research, 57(4), 392-404. https://doi.org/10.1016/S0148-2963(02)00273-4

- Forlizzi, J. (2010). All look same? A comparison of experience design and service design. Interactions, 17(5), 60-62. https://doi.org/10.1145/1836216.1836232

- Forlizzi, J. (2018). Moving beyond user-centered design. Interactions, 25(5), 22-23. https://doi.org/10.1145/3239558

- Gu, H., Huang, J., Hung, L., & Chen, X. “Anthony.” (2021). Lessons learned from designing an AI-enabled diagnosis tool for pathologists. Proceedings of the ACM on Human-Computer Interaction, 5(CSCW1), Article 10. https://doi.org/10.1145/3449084

- Holmlid, S., & Evenson, S. (2008). Bringing service design to service sciences, management and engineering. In B. Hefley & W. Murphy (Eds.), Service science, management and engineering: Education for the 21st century (pp. 341-345). Springer Science+Business Media.

- Holmquist, L. E. (2017). Intelligence on tap: Artificial intelligence as a new design material. Interactions, 24(4), 28-33. https://doi.org/10.1145/3085571

- Jamal, N., Shanta, S., Mahmud, F., & Sha’abani, M. (2017). Automatic speech recognition (ASR) based approach for speech therapy of aphasic patients: A review. AIP Conference Proceedings, 1883(1), Article 020028. https://doi.org/10.1063/1.5002046

- Jeblee, S., Khan Khattak, F., Crampton, N., Mamdani, M., & Rudzicz, F. (2019). Extracting relevant information from physician-patient dialogues for automated clinical note taking. In Proceedings of the 10th international workshop on health text mining and information analysis (pp. 65-74). Association for Computational Linguistics. https://doi.org/10.18653/v1/D19-6209

- Joukes, E., Abu-Hanna, A., Cornet, R., & de Keizer, N. F. (2018). Time spent on dedicated patient care and documentation tasks before and after the introduction of a structured and standardized electronic health record. Applied Clinical Informatics, 9(1), 46-53. https://doi.org/10.1055/s-0037-1615747

- Kanda, N., Horiguchi, S., Fujita, Y., Xue, Y., Nagamatsu, K., & Watanabe, S. (2019). Simultaneous speech recognition and speaker diarization for monaural dialogue recordings with target-speaker acoustic models. In Proceedings of the IEEE automatic speech recognition and understanding workshop (Article No. 19380614). IEEE. https://doi.org/10.1109/ASRU46091.2019.9004009

- Kingman-Brundage, J., George, W. R., & Bowen, D. E. (1995). “Service logic”: Achieving service system integration. International Journal of Service Industry Management, 6(4), 20-39. https://doi.org/10.1108/09564239510096885

- Kocaballi, A. B., Ijaz, K., Laranjo, L., Quiroz, J. C., Rezazadegan, D., Tong, H. L., Willcock, S., Berkovsky, S., & Coiera, E. (2020). Envisioning an artificial intelligence documentation assistant for future primary care consultations: A co-design study with general practitioners. Journal of the American Medical Informatics Association, 27(11), 1695-1704. https://doi.org/10.1093/jamia/ocaa131

- Lee, J.-J., Yap, C. E. L., & Roto, V. (2022). How HCI adopts service design: Unpacking current perceptions and scopes of service design in HCI and identifying future opportunities. In Proceedings of the SIGCHI conference on human factors in computing systems (Article No. 530). ACM. https://doi.org/10.1145/3491102.3502128

- Lee, M. K., & Forlizzi, J. (2009). Designing adaptive robotic services. https://minlee.net/materials/Publication/2009-IASDR-adaptive_robot_service.pdf

- Leinonen, A., & Roto, V. (2023). Service design handover to user experience design–A systematic literature review. Information and Software Technology, 154, Article 107087. https://doi.org/10.1016/j.infsof.2022.107087

- Lepri, B., Oliver, N., & Pentland, A. (2021). Ethical machines: The human-centric use of artificial intelligence. IScience, 24(3), Article 102249. https://doi.org/10.1016/j.isci.2021.102249

- Moggridge, B. (2007). Designing interactions. MIT Press.

- Morelli, N. (2003). Product-service systems, a perspective shift for designers: A case study: The design of a telecentre. Design Studies, 24(1), 73-99. https://doi.org/10.1016/S0142-694X(02)00029-7

- Quiroz, J. C., Laranjo, L., Kocaballi, A. B., Berkovsky, S., Rezazadegan, D., & Coiera, E. (2019). Challenges of developing a digital scribe to reduce clinical documentation burden. Npj Digital Medicine, 2(1), Article 1. https://doi.org/10.1038/s41746-019-0190-1

- Rahimi, B., Nadri, H., Lotfnezhad Afshar, H., & Timpka, T. (2018). A systematic review of the technology acceptance model in health informatics. Applied Clinical Informatics, 9(3), 604-634. https://doi.org/10.1055/s-0038-1668091

- Ritchie, J., & Lewis, J. (2003). Qualitative research practice: A guide for social science students and researchers. Sage.

- Rosenbloom, S. T., Denny, J. C., Xu, H., Lorenzi, N., Stead, W. W., & Johnson, K. B. (2011). Data from clinical notes: A perspective on the tension between structure and flexible documentation. Journal of the American Medical Informatics Association, 18(2), 181-186. https://doi.org/10.1136/jamia.2010.007237

- Roto, V., Lee, J.-J., Lai-Chong Law, E., & Zimmerman, J. (2021). The overlaps and boundaries between service design and user experience design. In Proceedings of the conference on designing interactive systems (pp. 1915-1926). ACM. https://doi.org/10.1145/3461778.3462058

- Sampson, S. E. (2012). Visualizing service operations. Journal of Service Research, 15(2), 182-198. https://doi.org/10.1177/1094670511435541

- Secomandi, F., & Snelders, D. (2011). The object of service design. Design Issues, 27(3), 20-34. https://doi.org/10.1162/DESI_a_00088

- Shostack, G. L. (1982). How to design a service. European Journal of Marketing, 16(1), 49-63. https://doi.org/10.1108/EUM0000000004799

- Shostack, G. L. (1984, January). Designing services that deliver. Harvard Business Review. https://hbr.org/1984/01/designing-services-that-deliver

- Sinsky, C., Colligan, L., Li, L., Prgomet, M., Reynolds, S., Goeders, L., Westbrook, J., Tutty, M., & Blike, G. (2016). Allocation of physician time in ambulatory practice: A time and motion study in 4 specialties. Annals of Internal Medicine, 165(11), 753-760. https://doi.org/10.7326/M16-0961

- Stacey, P. K., & Tether, B. S. (2015). Designing emotion-centred product service systems: The case of a cancer care facility. Design Studies, 40, 85-118. https://doi.org/10.1016/j.destud.2015.06.001

- Stickdorn, M., Hormess, M. E., Lawrence, A., & Schneider, J. (2018). This is service design doing: Applying service design thinking in the real world. O’Reilly Media.

- Yang, Q. (2017). The role of design in creating machine-learning-enhanced user experience. https://cdn.aaai.org/ocs/15363/15363-68257-1-PB.pdf

- Yang, Q., Banovic, N., & Zimmerman, J. (2018). Mapping machine learning advances from HCI research to reveal starting places for design innovation. In Proceedings of the SIGCHI conference on human factors in computing systems (Article No. 130). ACM. https://doi.org/10.1145/3173574.3173704

- Yildirim, N., Kass, A., Tung, T., Upton, C., Costello, D., Giusti, R., Lacin, S., Lovic, S., O’Neill, J. M., Meehan, R. O., Ó Loideáin, E., Pini, A., Corcoran, M., Hayes, J., Cahalane, D. J., Shivhare, G., Castoro, L., Caruso, G., Oh, C., …Zimmerman, J. (2022). How experienced designers of enterprise applications engage AI as a design material. In Proceedings of the SIGCHI conference on human factors in computing systems (Article No. 483). ACM. https://doi.org/10.1145/3491102.3517491

- Yoo, D., Ernest, A., Serholt, S., Eriksson, E., & Dalsgaard, P. (2019). Service design in HCI research: The extended value co-creation model. In Proceedings of the symposium on halfway to the future (Article No. 17). ACM. https://doi.org/10.1145/3363384.3363401

- Zeithaml, V. A., Bitner, M. J., & Gremler, D. D. (2008). Services marketing: Integrating customer focus across the firm. McGraw Hill.

- Zimmerman, J., & Forlizzi, J. (2013). Promoting service design as a core practice in interaction design. http://design-cu.jp/iasdr2013/papers/1202-1b.pdf

- Zimmerman, J., Tomasic, A., Garrod, C., Yoo, D., Hiruncharoenvate, C., Aziz, R., Thiruvengadam, N. R., Huang, Y., & Steinfeld, A. (2011). Field trial of tiramisu: Crowd-sourcing bus arrival times to spur co-design. In Proceedings of the SIGCHI conference on human factors in computing systems (pp. 1677-1686). ACM. https://doi.org/10.1145/1978942.1979187

Appendix