JourneyBot: Designing a Chatbot-driven Interactive Visualization Tool for Design Research

Soojin Hwang 1 and Dongwhan Kim 2,*

1 Technology Innovation, University of Washington, Seattle, WA, USA

2 Graduate School of Communication and Arts, Yonsei University, Seoul, Republic of Korea

Recent trends underscore the growing adoption of chatbots in user research due to their ability to connect with multiple users simultaneously, bypassing physical and time constraints. In this research, we introduce JourneyBot, a novel chatbot-driven interactive visualization tool that captures users’ experiences from medical clinic visits and displays their journey on a flexible and interactive platform. Particularly beneficial during periods of social distancing, JourneyBot also tackles broader challenges in user experience research, such as overcoming geographical barriers and ensuring inclusivity for participants confined to their homes. Our approach was two-fold: Initially, we crafted a rule-based chatbot to gather genuine feedback from users across various stages of their medical visits, subsequently portraying this on individual treatment journey maps. We then merged these individual maps to create a comprehensive and holistic interactive visualization, assisting user experience designers in promptly pinpointing user challenges and emotional shifts during their clinic experiences. Our findings highlight JourneyBot’s ability to improve research techniques and its considerable potential for the UX community.

Keywords – Chatbot, Journey Map, Interactive Visualization, Design Tool, User Experience Design.

Relevance to Design Practice – The introduction of JourneyBot, a chatbot-driven tool capturing user experiences during medical clinic visits, offers design professionals a novel and streamlined methodology. This solution enables rapid, remote, and simultaneous data collection and visualization, assisting in highlighting user obstacles and emotional changes, ultimately enriching the design process.

Citation: Hwang, S., & Kim, D. (2023). JourneyBot: Designing a chatbot-driven interactive visualization tool for design research. International Journal of Design, 17(3), 95-110. https://doi.org/10.57698/v17i3.06

Received March 24, 2022; Accepted October 2, 2023; Published December 31, 2023.

Copyright: © 2023 Hwang & Kim. Copyright for this article is retained by the authors, with first publication rights granted to the International Journal of Design. All journal content is open-accessed and allowed to be shared and adapted in accordance with the Creative Commons Attribution 4.0 International (CC BY 4.0) License.

*Corresponding Author: dongwhan@yonsei.ac.kr

Soojin Hwang is a technical product designer currently shaping generative AI-driven products at T-Mobile digital innovation lab. With a foundation in visual design and UX, and holding Master’s degrees in Technology Innovation from the University of Washington and Design Intelligence from Yonsei University, Soojin combines emerging technologies such as artificial intelligence, AR, and VR with user experience design to craft impactful digital solutions. She is deeply involved in innovative design projects and consistently advocates for user-centered approaches across various digital platforms.

Dongwhan Kim is an associate professor at the Graduate School of Communication and Arts, Yonsei University. He earned a M.S. in Human-Computer Interaction from Carnegie Mellon University and a Ph.D. in Communication from Seoul National University. His distinct blend of studies in computers, information design, and human behavior has led him to specialize in areas such as HCI, social computing, computational journalism, user experience and interaction design, and information visualization. He directs the Design & Intelligence Lab at Yonsei, emphasizing the integration of design principles with computational techniques to spur innovation in diverse research fields.

Introduction

Designers use various methodologies to conduct design research with potential and existing users, aiming to develop new services or improve existing practices. Understanding users’ behavioral patterns, experiences, and perceptions is crucial to pinpoint and address the right problems to solve (Garrett, 2010; Goodman et al., 2013; Goodwin, 2011; Krüger et al., 2017). Despite the rich methodological opportunities, executing user research in real-world settings can be challenging. Often, user research demands substantial time and cost (Abras et al., 2004; Cooper et al., 2014). Due to costs and the unpredictability of successful design outcomes, user research is occasionally streamlined or bypassed in real-world settings (Goodwin, 2011).

However, technological advancement has increasingly made chatbots an efficient tool for data collection and analysis (Adiwardana et al., 2020; Gupta et al., 2019; Kim et al., 2019; Lee et al., 2019; Li et al., 2017; Luger & Sellen, 2016; Tallyn et al., 2018; Xiao, Zhou, Chen et al., 2020). Kim et al. (2019) demonstrated that chatbots can gather high-quality data by stimulating more active user participation. Additionally, a study by Vaccaro et al. (2018) depicted a chatbot taking over the designer’s user research process through automated analysis of conversation syntax structures. Such studies indicate chatbots’ potential in saving the time and resources of design professionals by automating user feedback collection.

In our study, we crafted JourneyBot, a chatbot aimed at aiding designers in identifying and addressing core issues through actual user conversations. JourneyBot is tailored to carry out user interviews and distill these dialogues into actionable insights. While many studies have delved into the technology-driven potentials of research tools, our initiative stands out by offering a tool that amalgamates user journeys into an interactive, tailored visualization. Crafting such visualizations is often labor-intensive (Thomas & Cook, 2006). However, interactive visual aids are known to assist designers in gleaning crucial takeaways, even from massive datasets (Keim et al., 2008; Keim et al., 2010). In design research, the sheer volume of user data can increase exponentially with the number of participants, often leading to the involvement of fewer participants in studies. However, JourneyBot’s unique capability to consolidate multiple journey maps into one cohesive interactive visualization promises a more efficient exploration of extensive user data, thus enhancing the extraction of insights.

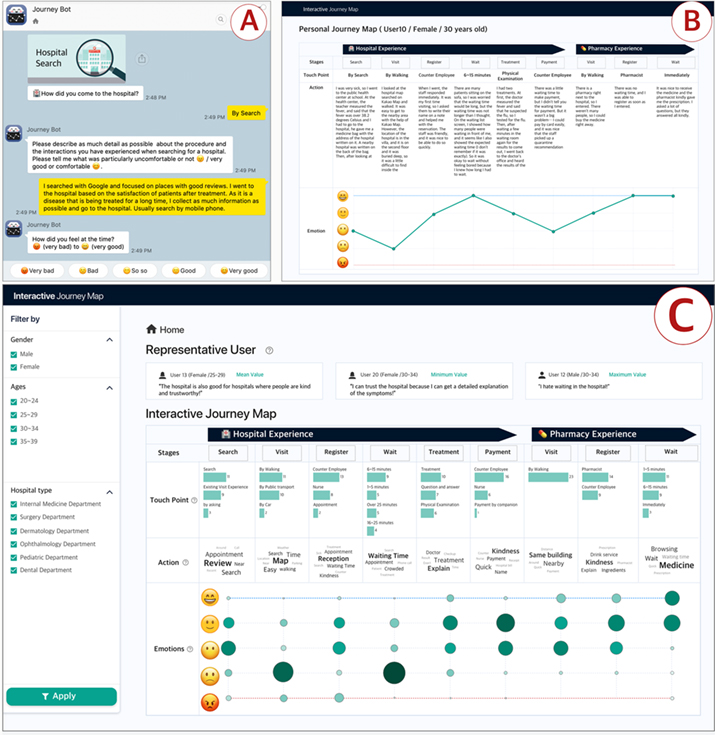

This study is structured in two phases. Initially, we designed JourneyBot to record users’ behaviors and emotions during medical clinic visits and treatments via chatbot interactions (Figure 1A). The medical visit process mirrors the general healthcare routine in Korea. In South Korea, where our research took place, the consistency in clinic visits can be largely attributed to comprehensive health insurance coverage (Song, 2009). After gathering the conversational data, JourneyBot rendered a visual representation of each participant’s journey from their most recent clinic visit (Figure 1B). These visualizations were then evaluated for accurate interpretation and depiction of chatbot conversations. Subsequently, we combined all the individual user journeys into an interactive map, allowing designers a multifaceted data exploration (Figure 1C).

Figure 1. The three-step research process with JourneyBot. (A) Gathering user feedback via conversations with JourneyBot, (B) Transforming text data into an individual user’s journey map, (C) Merging all users’ journeys into an interactive visualization to uncover design insights.

Related Works

Discovering and Defining Problems

The British Design Council’s double diamond model is a cornerstone in the design process, with widespread recognition for its utility to designers (Bicheno & Holweg, 2000; Tschimmel, 2012). Its first two phases, discover and define, actively engage designers in probing users’ challenges and subsequently refining these into clear design problems to address (Design Council, 2015). The primary objective of this initial segment is to equip designers to deeply understand specific user group needs and formulate the optimal solutions in the following phases (Gibbons, 2019).

Designers employ a variety of methods, such as contextual interviews and focus groups, to deeply understand users’ needs. Contextual interviews involve designers engaging with users in their own work settings, ensuring a genuine understanding of their context-specific needs (Beyer & Holtzblatt, 1999; Stickdorn & Schneider, 2011). In contrast, focus groups act as platforms to gather initial feedback, validating service concepts within more contrived or temporary settings (Litosseliti, 2003; Morgan, 1996). While these techniques excel in capturing genuine user responses (Fern, 2001), they sometimes miss a holistic perspective on potential service usage (Goodwin, 2011; Nyumba et al., 2018).

Identifying critical problem areas often relies on insights gathered through methodologies such as persona and journey maps. A persona is a composite portrayal of a user archetype, crafted from the observed behaviors and intentions of potential and actual users (Cooper et al., 2014; Pruitt & Adlin, 2010). Though this method plays a pivotal role in guiding a goal-directed design process, recent critiques challenge its effectiveness, highlighting concerns such as perpetuating stereotypes (Marsden & Haag, 2016) and not always capturing the depth of real user behaviors (Chapman & Milham, 2006). Moreover, in our data-centric era, its continued relevance is a topic of debate (Salminen et al., 2018). Meanwhile, a journey map provides a visual representation of a user’s interaction with a service. It breaks down the user experience into discrete stages, meticulously documenting each interaction, touchpoint, and emotion encountered throughout (Dove et al., 2016).

While methodologies ranging from interviews to journey maps offer deep insights into the factors influencing user experiences, challenges such as cumbersome data collection, cost constraints, and the intensive labor of production often inhibit their full utilization in real work settings (Goodwin, 2011; Gould et al., 1991; Zimmerman et al., 2007). However, recent advancements in computational technologies are introducing a suite of approaches with the potential to enhance or even replace traditional user research techniques.

Computational Approaches to User Research

Recently, text-based chatbots have gained attention in design research as a valuable data collection tool (Kim et al., 2019; Xiao, Zhou, Liao et al., 2020). Tallyn et al. (2018) utilized such a chatbot to gather ethnographic data from users. While chatbots have found applications across diverse domains, their aptitude in eliciting information is particularly noteworthy (Xiao, Zhou, Chen et al., 2020; Xiao et al., 2019; Zhou et al., 2019). For example, Vaccaro et al. (2018) employed a machine learning-equipped chatbot to discern consumer preferences and subsequently align them with online fashion stylists. By seamlessly capturing fashion nuances, the chatbot streamlined data for stylists, ensuring prompt and informed decision-making. Moreover, Kim et al. (2019) compared the effectiveness of chatbots against web surveys, advocating for the former’s superior capacity in extracting quantitative data.

While many studies have introduced innovative techniques to enhance efficiency and overcome time and cost barriers inherent in traditional methods, it is still designers’ responsibility to conduct thorough analyses derived from the amassed research data. Meanwhile, the surge in web services tailored to assist in user research is evident. For instance, Beusable offers insights into user behaviors via clickstreams and browser logs. UXPressia and Smaply, on the other hand, have developed digital tools to craft customer journey maps that are shaped by collaborative feedback from users. Yet, a gap persists: these tools often stumble when integrating intricate visualization with detailed interview and survey data analysis—a process that is particularly resource-demanding. To address this gap, this study proposes a tool designed to streamline the design process by autonomously collecting, analyzing, and rendering user data into an interactive visualization. Central to our strategy are two integral components:

- The creation of an autonomous agent capable of sourcing user quotes through natural conversations and transforming individual textual inputs into illustrative journey maps.

- The design and assessment of an interactive visualization platform that consolidates all user journeys into an interactive map, empowering designers with actionable insights.

Phase 1: Developing an Autonomous Agent for Data Collection and Visualization

Designing Journeybot

In the first phase, we developed JourneyBot, an autonomous agent designed to play the role of a UX researcher. Its primary mission was to engage participants in conversations about their clinical experiences through intuitive, text-based dialogues. We meticulously designed JourneyBot’s conversational architecture by taking into account the idiosyncrasies of the Korean healthcare system. In Korea, universal and consistent health insurance coverage grants patients freedom in choosing their healthcare providers (Song, 2009). This freedom laid the foundation for our delineation of standardized phases associated with clinical visits.

We commenced our approach by administering a survey, the aim of which was to spotlight recurring trends during medical clinic visits. With insights drawn from this survey, we calibrated JourneyBot’s conversational structure to mirror these patterns. We then segmented a typical clinic visit into nine sequential stages: (1) Searching for a clinic, (2) Visiting the clinic, (3) Registering at the reception area, (4) Waiting to see the doctor, (5) Receiving treatment, (6) Paying the bill, (7) Going to a nearby pharmacy, (8) Dropping off the prescription, and (9) Taking the medicine.

JourneyBot is equipped to gather data on touchpoints within each stage, capturing interactions with encountering people or objects, user actions, and their emotional states. It further probes with additional questions, seeking a deeper understanding and more detailed insights. Through this detailed and structured dialogue, JourneyBot aims to identify service pain points, bridge service gaps, and highlight opportunities to refine the healthcare experience. The essence of JourneyBot can be distilled into five core elements:

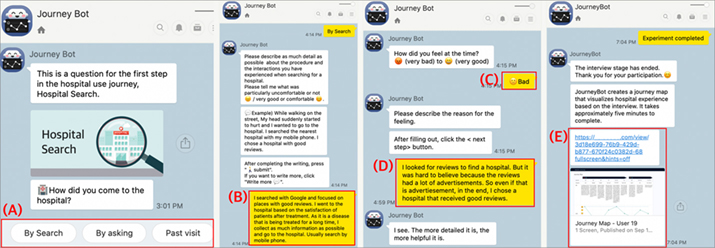

- (A) Touchpoint: These are pivotal interactions with entities or processes throughout the user’s journey. JourneyBot assimilates these experiences either through multiple-choice selections or open-ended user narrations (Figure 2A).

- (B) Action: This refers to the activities users undertake at each step of their clinic visit. JourneyBot encourages comprehensive descriptions by posing scenario-based questions and providing sample answers for guidance (Figure 2B).

- (C) Emotional state: This gauges the emotional resonance tied to every phase. Users quantify their sentiments on a five-point Likert scale, mapping emotions from the most negative to the most positive for each corresponding stage (Figure 2C).

- (D) Emotional statement: Beyond gauging sentiment, JourneyBot nudges users to articulate a specific rationale for their emotional rating at any given stage (Figure 2D). These articulations are then showcased as pop-ups within the journey map visuals.

- (E) Link to journey map: Post-dialogue, JourneyBot visualizes the user’s narrative into a journey map, sending a verification link to users for validating the depicted journey (Figure 2E).

Figure 2. JourneyBot’s process in nine stages. (A) Touchpoint, (B) Action, (C) Emotional state, (D) Emotional statement, (E) Link to journey map.

How JourneyBot works

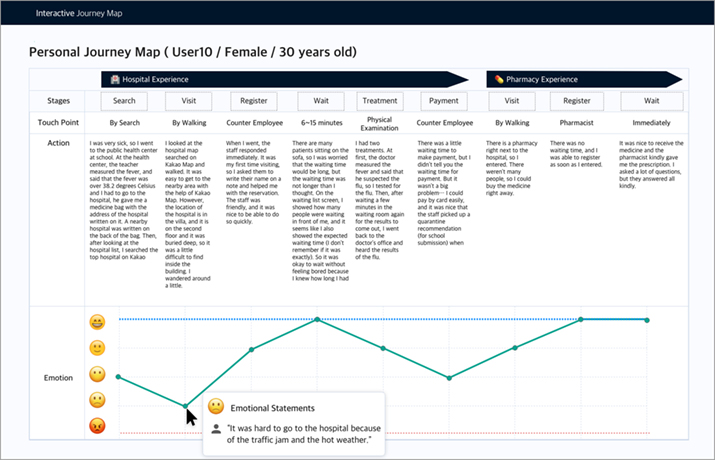

JourneyBot was built on the Kakao Open Builder platform and is integrated with KakaoTalk, which is the leading messaging service in Korea. After being registered with the KakaoTalk Administration Center, the chatbot is enabled to record conversations with users. Using this conversational data, JourneyBot fills in the necessary details on a journey map. The conversation data, stored in the Kakao database, is subsequently converted into a web-based interactive visualization (Figure 3). After the conversation concludes, users receive a link guiding them to the map. This allows them to confirm if the chatbot effectively captured their text inputs during the dialogue.

Figure 3. A sample journey map created after a user participated in a conversation session with JourneyBot.

Method

Participants

Participants were recruited through announcements on social media platforms and academic community websites. The eligibility criteria included individuals aged 20 years and above who had received clinical treatment and visited pharmacies in the past month. Familiarity with the KakaoTalk messenger was a prerequisite, given its role in the study’s interaction with the chatbot. From this process, we recruited 24 participants, comprising 9 males and 15 females. Their ages ranged from 24 to 38 years, with a mean age of 31 years. The participants came from a variety of professional backgrounds, encompassing fields such as development, biosciences, business, and academia. While some possessed familiarity with design thinking and journey mapping, others were being introduced to these concepts for the first time. All interviews were recorded and transcribed upon receiving participants’ consent. After the experiment, each participant was compensated with $10 in cash for their participation.

Procedure

Before the experiment commenced, a preliminary survey was administered to gather demographic data and gauge participants’ prior experience with chatbots. Participants were informed about their rights, the experimental procedures, and their option to withdraw at any stage. The study was conducted remotely via web conferencing tools like Zoom and Google Meet. Participants initiated conversations with JourneyBot using the KakaoTalk messenger on their personal computers while concurrently sharing their chat screen with the researcher. After the chat session with JourneyBot, participants were asked to examine and navigate the journey map generated from their chat data. At the conclusion of the experimental procedure, participants were interviewed about their experiences with JourneyBot and then asked to fill out questionnaires evaluating the system’s perceived usability.

Measures

To assess participants’ engagement with JourneyBot, we employed both quantitative and qualitative methods. Quantitatively, we gauged the user experience of the journey map using the System Usability Scale (SUS). This widely recognized measure is known for yielding reliable outcomes with minimal question misinterpretation (Sauro & Lewis, 2011). The SUS questionnaire comprises ten items rated on a five-point Likert scale ranging from 1 (strongly disagree) to 5 (strongly agree).

Qualitatively, we conducted 30-minute interviews with participants, delving into their interactions and experiences with JourneyBot. While the precise interview questions were tailored based on individual experiences, they consistently centered on users’ interactions with the chatbot, their overarching user experience, and perceptions of the resultant journey map (Refer to Table 1 for sample questions).

Table 1. Sample questions aimed at evaluating user experience and expert assessment of JourneyBot interactions and visualization.

| For the actual users (recent clinic visitors) | Additional questions for experts |

|

|

After the interviews, we initiated a bottom-up thematic analysis to extract insights from participants’ feedback. Thematic analysis entailed detailed coding of the transcripts, pinpointing dominant themes and patterns. This qualitative exploration complemented the SUS’s quantitative data, bringing to light subtle facets of user experience that the questionnaire might have overlooked. This approach established a robust foundation for a thorough grasp of user engagements with JourneyBot and the utility of the produced journey maps.

Result and Discussion

SUS Score

The usability test results for the chatbot-based interview suggest that JourneyBot is an acceptable system, garnering an average SUS score of 78.3 (SD = 15.4). As per Bangor et al. (2009), scores below 64 are deemed unacceptable, those between 65 and 84 are acceptable, and those 85 and above are excellent. JourneyBot’s score situates it comfortably in the acceptable bracket, showcasing its commendable usability.

Participants particularly appreciated JourneyBot’s consistency and intuitive nature, noting they could employ it without any additional learning. This feedback aligns with interview outcomes, which highlighted JourneyBot’s potential as a dependable and uncomplicated research tool. Importantly, the findings spotlight JourneyBot’s capacity to streamline the discovery and definition phases of design. Its ability to concurrently and remotely collect user data provides a swift and efficient method to amass user experiences, thereby condensing these foundational design stages.

JourneyBot’s capability to swiftly visualize user journeys emphasizes its merit in design research, bolstering its relevance in the domain. The affirmative SUS scores and patterns observed in our research convey that JourneyBot not only meets usability standards but also offers substantial advantages in remote and simultaneous user data acquisition.

Interview Findings

The following implications were derived from the interviews with participants.

Facilitates Scalable Participant Recruitment without Time or Geographical Limitations

Many participants noted that their interactions via JourneyBot were remarkably accessible and free from time and location restrictions. Significantly, JourneyBot can be invaluable during situations like pandemic outbreaks (such as COVID-19), when traditional in-person user research becomes challenging.

It is highly convenient that I can participate regardless of the location and without seeing anyone in person, especially during a (pandemic) crisis like COVID-19. (U2)

Therefore, this research methodology offers scalability and adaptability, especially when engaging with geographically dispersed participants.

It seems to be a device that can supplement (traditional) surveys and interviews for those who have difficulty with speaking. If I use JourneyBot for an interview, I can do it at any time, whenever I feel convenient, whether at night or in the morning. (U22)

Affords Participants Enough Time for Reflection and Response Organization

One of the standout benefits of JourneyBot is that it provides participants the freedom to answer thoughtfully, allowing them sufficient time to contemplate and articulate.

It was nice that I could write down the things I would talk about while thinking, edit my answers if needed, and have an interview at my own pace. (U19)

While protracted silences can be uncomfortable in person-to-person interactions, this is not an issue with a chatbot. This ability for research participants to deeply reflect and systematically structure their thoughts can be particularly beneficial in chatbot-led research.

When I am in front of a person, I often feel like I need to say something quickly, but with a chatbot, I can recount my experiences without feeling hurried. (U13)

Encourages Candid Responses Compared to Interactions with Human Researchers

Several participants noted their increased comfort in expressing honest feelings when conversing with a chatbot rather than a human interviewer. The inherent pressure to be mindful of another person’s feelings can sometimes curb complete honesty. However, this apprehension is mitigated with a chatbot, given there’s no emotional response or reaction to consider.

During interviews, I tend not to speak too honestly because I care about the person who is listening. However, since it is a chatbot that does not have emotions, I was able to talk more honestly. (U9)

To a chatbot, I was able to talk about my inner feelings and all the negative comments I had. (U21)

Many participants highlighted the ease of engaging in regular conversations with the chatbot as a distinct advantage. They emphasized that conversing with unfamiliar individuals can occasionally induce stress. As one participant articulated, “Interacting with strangers often makes me uneasy, but with the chatbot, there is no in-person pressure. It feels like an everyday chat” (U21). This sentiment mirrors the findings of Lee et al. (2020), who observed that dialogues with non-human entities tend to lower conversational barriers.

Requires Improved Convenience for Extended Responses

When providing extensive narrative answers to the chatbot’s questions, participants voiced a desire for more user-friendly response methods. They found the task of reading the chatbot’s textual questions and subsequently typing out lengthy responses to be somewhat cumbersome.

If I have participated via a mobile phone, it might have been difficult to write long. And especially for the elderly. If I talk in person, I would just talk excitedly, but with the chatbot, I have to type myself. (U10)

If a person is familiar with chatbots, it will not be too difficult to use. But many clinic users are older, and it is making chatbot interaction less intuitive. (U22)

Moreover, participants shared potential solutions to enhance the chatbot’s interactive experience. One participant mentioned, “I think it would be nice if it can read them out when asking questions” (U5). Another added, “It would be convenient if it can help write words with automatic word completion function” (U3), suggesting the need for a method that could decrease participants’ fatigue in having to type everything themselves. A different participant highlighted, “Because the chatbot does not have any response to my answers, it is somewhat comfortable. On the other hand, I wondered if I was doing well, and I felt quite bored” (U19). Such feedback underscores the potential benefit of incorporating features that emulate a more dynamic, human-like conversation.

Visualization Bolsters Trust in Objective Data Analysis

One of JourneyBot’s distinguishing features, as highlighted by participants, is its ability to ensure that their input is not skewed by the subjective biases of the researcher. Participants felt reassured that their statements and intentions were captured authentically. The direct presentation of their responses as data in the form of a journey map immediately post-interview further reinforced this trust.

It seemed that the chatbot was trying to ask as objectively as possible by dividing emotion and mood into five levels. I was always worried that I would not explain my feelings correctly when interviewing in person, but I do not have that concern with this chatbot. (U17)

Furthermore, participants appreciated the journey map’s ability to demystify and structure their abstract emotions, enabling reflective insights. They also pointed out the ability to organize abstract emotions by looking back on their own experiences as an advantage of journey map visualization.

In the past, I did not know the standards of a good clinic, but through this (journey) map, I learned what elements I consider important for choosing a clinic. (U9)

Suppose these kinds of journey maps are accumulated. In that case, I guess this can analyze my tendencies and recommend new services to me, referring to my journeys. (U21)

Phase 2: Designing an Interactive Journey Map as A Tool for Design Research

Designing an Interactive Journey Map

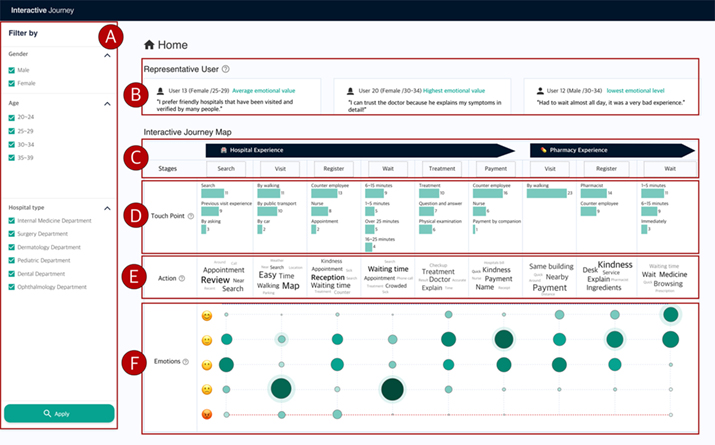

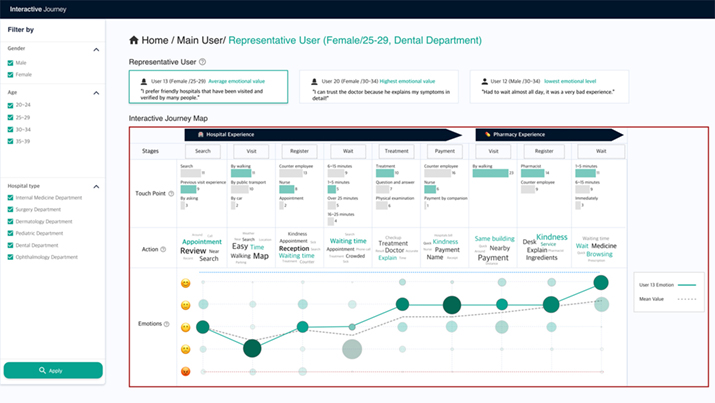

In the initial phase of our study, JourneyBot gathered conversational data from participants and transformed this textual data into individualized journey maps. In the second phase, we enhanced JourneyBot’s capabilities to amalgamate all these individual journeys into a unified interactive map (Figure 4). This comprehensive map presents the research outcomes through diverse visualization techniques, including bar graphs, word clouds, and bubble charts. A distinct feature of this map is its ability to delve deeper into specific segments of the user journey through a mouse-hover interaction. For example, the dimension of a bubble within the ‘emotion’ segment is indicative of the number of users that selected a specific emotional rating. Hovering over this bubble reveals a dialog box containing granular data, elucidating which participants opted for that emotional score and the corresponding statements they provided for that particular journey phase. In essence, this enhanced tool equips researchers with the means to not only gain a holistic view of the research outcomes but also to scrutinize individual participant data. Its overarching goal is to pinpoint and articulate the critical challenges that need addressing in the user research realm.

(A) Filtering: The tool offers a dynamic filtering mechanism, allowing for granular data examination. Filters can categorize the complete dataset based on demographics like gender, age, or specific clinics frequented. Activating a filter reshapes the visualization of touchpoints, key action terms, and emotional states, tailoring them to the subset of participants selected. Correspondingly, the depiction of the “representative user” adjusts in line with the filtered data (Figure 4A).

Figure 4. The main page of the interactive journey map. (A) Filtering options, (B) Representative users, (C) Stages of the medical treatment journey, (D) TouchPoints as bar graphs, (E) Actions that are collections of high-frequency words, and (F) Emotional states at each stage using bubble visualization.

(B) Representative User: The platform discerningly identifies ‘representative’ participants by scrutinizing the first study’s data patterns (Figure 4B). There are three types of representative users in the group. The first is a user whose emotional scores align closely with the group’s average across the journey map stages (User 13 in Figure 4B). The fluctuations in this user’s emotions provide a representative snapshot of the typical patient experience throughout the care process. The second archetype singles out the participant registering the apex of emotional scores (User 20 in Figure 4B). Conversely, the third archetype focuses on the user who rated the lowest emotional scores consistently during all clinic visits (User 12 in Figure 4B). These users capture the full spectrum of patient satisfaction during their most recent clinic visit, reflecting a range of experiences one might encounter. Clicking on any of these users draws attention to their responses and emotive ratings on the interactive map (Figure 5). Such insights grant a more intimate glimpse into discrete users’ medical trajectories.

(C) Stage: Conversations facilitated by JourneyBot help segment the entire patient journey into nine distinct phases (Figure 4C). The initial six segments revolve around clinic visits and treatments, while the succeeding trio pertains to prescription receipt and medication retrieval from drugstores. These segments echo the conventional care-receiving trajectory observed in local Korean clinics, insights gleaned from a pilot survey preceding the main study. Nevertheless, the tool’s flexibility ensures designers can recalibrate the phase count to better resonate with their specific user research milieu.

Figure 5. The selected representative user’s data is highlighted on the interactive map.

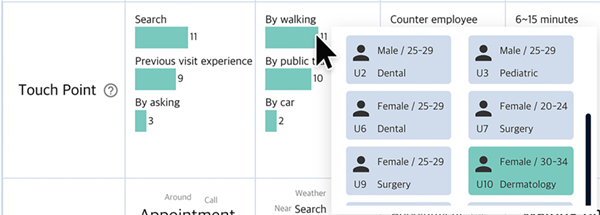

(D) TouchPoint: The visualization encapsulates the myriad touchpoint experiences collected from the participants by JourneyBot during the first study. The bar graph representation (Figure 4D) succinctly portrays the frequency of these experiences at each touchpoint. Adhering to length as a visual encoding technique—a method with consistently low error rates during quantitative comparisons (Cleveland & McGill, 1986)—ensures the information’s clarity and precision. The inclusion of this visual encoding was grounded in the study of Heer and Bostock (2010), underscoring its efficacy for quantitative information dissemination. Augmenting the design’s user-centricity, an interactive hover-over feature has been embedded. As illustrated in Figure 6, hovering over any bar brings forth a pop-up that divulges the individual participant data feeding that specific bar, facilitating an in-depth, yet uncluttered data exploration (Van Wijk, 2013).

Figure 6. Mouse hovering on a bar shows the collection of individual participants who selected each particular touchpoint during conversation with JourneyBot.

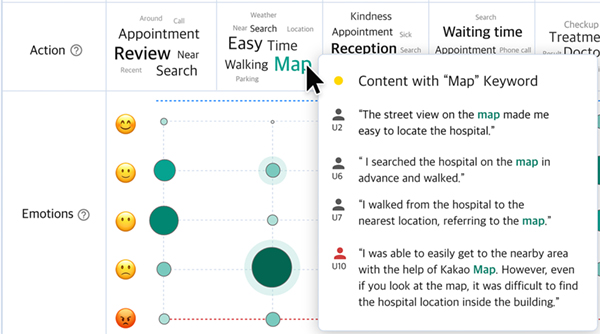

(E) Action: This visualization extracts and visualizes key insights from the participants’ feedback through morpheme analysis. This intricate analysis is represented in a carefully designed word cloud (Figure 4E). Recognized for its prowess in highlighting prevalent terms (Heimerl et al., 2014), the word cloud adapts the size of each term to mirror its frequency in dialogues with JourneyBot, facilitating instant recognition of predominant themes. This visualization is not merely about presenting data; it is an amalgamation of user-centric design principles and potent data representation strategies (Van Wijk, 2013; Cleveland & McGill, 1986). Interactivity is seamlessly woven into this design tapestry: hovering over any term brings forth the associated dialogue and its originator, the participant (Figure 7). This dynamic feature ensures the visualization retains its elegance and clarity while proffering deeper contextual nuances of each keyword.

Figure 7. By hovering the cursor on the word cloud, designers can check the conversational data containing each keyword.

A layer of sentiment analysis augments this interactive design. Negative sentiments are visually earmarked with a distinct red icon, exemplified by the feedback from participant U10 in Figure 7. Furthermore, clicking on the user icon or text will open the participant’s individual journey map, allowing for a more in-depth exploration of individual user experiences (Munzner, 2009). The decision to use circular layouts in our word clouds draws on research findings demonstrating their efficacy in displaying high-frequency terms (Heimerl et al., 2014).

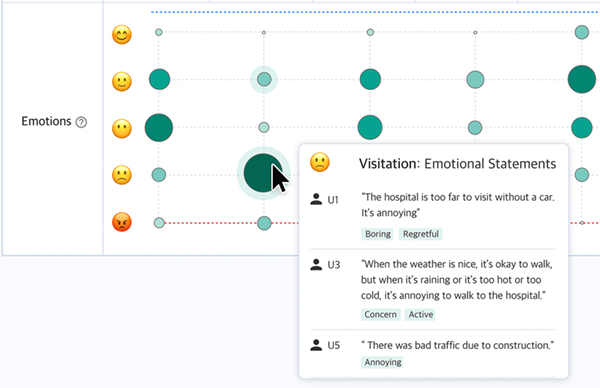

(F) Emotion Evaluation: This visualization captures participants’ emotional assessments across different stages, scaled from very bad to very good (Figure 4F). Emotions and their frequencies manifest as a matrix bubble chart, with the bubble’s size and shade intensifying with an increasing number of participants echoing a particular sentiment. Hovering over a bubble unveils a pop-up window, showcasing the participant ID and their detailed feedback, complemented with emotion labels extracted from the stage-specific emotional survey data (Figure 8).

Figure 8. By hovering over the matrix bubble chart, designers can determine how participants rated their experiences at each stage using emotional statements.

The decision to employ bubble charts is rooted in their proven efficacy to distill complex volume data into an easy-to-digest visual format (Heer & Bostock, 2010). Though there is an inherent difficulty in making exact quantitative comparisons using areas (Cleveland & McGill, 1986), the bubble chart was chosen for its unparalleled prowess in elucidating volume and variance nuances, a decision backed by both empirical research and esteemed visualization guidelines (Lam et al., 2011). Adding another dimension to user engagement, an interactive layer has been incorporated: a pop-up materializes upon hovering over a bubble. It offers a window into the intricate data, revealing the participant’s ID and their respective feedback (Figure 8). This interactive feature, firmly grounded in user-centric design principles, provides straightforward access to granular data without overcomplicating the visualization (Van Wijk, 2013).

Method

Participants

For the second study phase, we recruited designers who have worked in or were currently working in UX-related professions. The criteria included designers over the age of 20 along with individuals who had experience using a journey map as a user research tool. A total of 11 interviewees (1 male, 10 female) were recruited. The age range was between 25 and 31 years old (M = 28). All interviews were documented and transcribed upon obtaining participants’ consent. After the interview, each participant received a 10-dollar cash incentive.

Procedure

The interactive journey map was developed as a prototype using Adobe XD, drawing upon the comprehensive conversational data garnered from the first study phase. Each graph’s representation was proportionally constructed in line with the genuine responses received from participants. For example, the bubble dimensions in the Emotions segment precisely depict the volume of participants resonating with a particular emotion during a specific stage. Likewise, the word cloud in the Action section has been devised utilizing genuine dialogue data accumulated from the study’s first batch of participants.

Prior to initiating the study, a preliminary questionnaire was presented to participants to collect basic demographic details, encompassing age, gender, and their familiarity with drawing a journey map. Participants were informed of their rights to discontinue the experiment at any time and were given explanations of the experimental procedures. This was followed by comprehensive, individualized, semi-structured qualitative dialogues. The focal group for these interviews encompassed UX professionals with experience in both using and crafting journey maps. These interviews were orchestrated remotely, leveraging platforms such as Zoom and Google Meet. Participants interfaced with the prototype on their PCs and concurrently mirrored their screens to the investigator. As they navigated through the interactive map, the think-aloud technique was invoked, obliging participants to articulate their thoughts, feelings, and feedback spontaneously. This facilitated a direct and transparent critique of their engagement with the instrument. After completing these assigned tasks, participants shared their reflections through an interview and then furnished their feedback on the tool’s user-friendliness via a questionnaire.

Participants were tasked with navigating the interactive map’s main page, engaging directly with three distinct sections: Representative Users, Touchpoint, and Action Keywords.

- Representative users: Participants were instructed to examine three distinct categories of representative users and juxtapose their characteristics with the comprehensive data showcased on the main page.

- Touchpoint: Participants were asked to delve into the quantitative bar graph in the Touchpoints section. They were instructed to hover over the bar graph to unveil demographic details of the encapsulated users, such as their gender and age. Moreover, they were prompted to select a user to transition to that specific user’s personal journey map.

- Action Keywords: Participants were directed to search for the specific keyword Map by hovering over it and subsequently reviewing the list of interview scripts that incorporated this keyword. In addition, they were guided to discern the emotional undertones of a statement by observing the color of the user icon (with red indicating negative feedback and green signifying positive sentiments).

Measures

We undertook a comprehensive evaluation of the interactive visualization map tailored for design research, encompassing both quantitative and qualitative methodologies. For the quantitative assessment, we utilized the positive SUS (Sauro & Lewis, 2011) as an established tool that gauges user experience. The SUS questionnaire is comprised of 10 questions gauged on a five-point Likert scale, ranging from 1 (strongly disagree) to 5 (strongly agree).

Subsequent to the completion of the survey, a qualitative component was incorporated wherein participants were interviewed for approximately 30 minutes to delve deeper into their overall user experience. To systematically analyze their open-ended responses, we adopted a thematic analysis approach with a focus on a bottom-up strategy. Our approach to thematic analysis was methodical and structured. We began by coding the interview transcripts to discern recurrent patterns. As we delved deeper into this process, salient themes started to surface. The insights harvested from this qualitative endeavor not only augmented the data procured from the quantitative SUS survey but also spotlighted the intricate aspects of the participants’ interactions with the tool. These nuanced details might have been missed or understated in the structured questionnaire. By leveraging both methodologies, we achieved a comprehensive insight into the user experiences with the interactive visualization map, affirming its viability as a cutting-edge instrument in the realm of design research.

Table 1. Sample questions aimed at evaluating user experience and expert assessment of JourneyBot interactions and visualization.

| Interview questions for UX-professionals |

|

Results

SUS Score

The user testing results for the interactive map yielded an average SUS score of M = 87.5 with a standard deviation of SD = 8.6. Drawing on the framework provided by Bangor et al. (2009), any score above 85 is considered to reflect excellent usability. This clearly signals the interactive visualization map’s potential as a formidable tool in design research. Although JourneyBot’s autonomous interview and visualization features were seen as usable, a system that interactively integrates individual research outcomes into a unified view was deemed even more valuable.

The feedback from study participants underscored two salient features: the tool’s intuitive design and its immediate applicability. The majority of participants acknowledged the ease with which they could familiarize themselves with the tool. Through the questionnaire, many expressed a keen interest in weaving this tool seamlessly into their routine design research practices. From a design perspective, the commendable SUS score testifies to JourneyBot’s prowess. It offers a streamlined, user-centric interface that adeptly captures and represents user data. By facilitating remote and concurrent data gathering, JourneyBot holds promise in streamlining the discover and define phases of the design methodology. Consequently, JourneyBot emerges as not just a tool for effectively mapping user journeys, but also as a potential accelerator in the design process.

Interview Findings

The following implications were derived from interviews with participants in the second study phase.

Data Collection Process is Automated, Streamlining and Simplifying Research Efforts

The interactive journey map enhances JourneyBot’s range and efficacy as a design research tool. It achieves this by meticulously analyzing and illustrating research data gleaned from several user testing sessions. Compared to traditional research methods, this tool presents a time- and cost-efficient advantage by automating interview procedures and subsequent analysis. Numerous participants highlighted the tool’s potential to reduce research duration, noting that quantitative interview analysis typically demands significant time. As participant U3 articulated, “Typically, the interview analysis (coding) took much time. This tool makes the process automated, so it is very convenient.”

In real-world settings, research methodologies demanding substantial resource commitment from designers are challenging to deploy (Goodwin, 2011). However, the majority of participants in this study anticipate that JourneyBot will prove highly beneficial in practical applications.

It is convenient and shortens research time. I guess it cuts the time by less than 1/10 compared to the existing research method. I will use it for sure. (U2)

Since it analyzes natural language and presents it visually, data can be processed more efficiently. Originally, to create a single journey map, I had to extensively analyze research data and use many post-its. However, with this (JourneyBot), the process is reduced in length and shortened in time. It reduces the energy (in doing the research), which is its biggest advantage. (U6)

It has a great advantage of being able to explore such a big amount of qualitative data quickly. It would take a month for designers to organize the data as an interactive map interface. It is good that it reduces all that. (U9)

The Interpretation of User Data Remains Consistent and Unbiased as It Bypasses Designer Subjectivity

One of JourneyBot’s most notable strengths is its ability to analyze data without the influence of designer subjectivity in both data collection and interpretation. Many participants concurred that results are deemed more trustworthy when raw data is displayed alongside a collated view. Therefore, data interpretation remains undistorted, and the dimensions of keywords or emotional bubbles are visualized based on their frequency.

Typically, journey maps are subjective. Whether created by me or someone else, I often find them hard to trust. However, this tool is quantitative and systematic. I can trust it because it visualizes the data precisely as it appears. (U7)

Conventional journey maps include the subjectivity of designers; hence, their reliability is often in question. The results of interview analysis can vary based on who conducts them. In contrast, this tool is highly reliable since it visualizes emotion charts based on numerical values and displays the actual interview data without alteration. (U2)

You can see research data that is meticulously analyzed and very detailed. Typically, when conducting research, you don’t consolidate individual journey maps into a holistic one through detailed and precise analysis. This tool excels in that it offers in-depth details and more dependable data. It can be very objective and will be a useful resource for collaboration. (U9)

The interpretation criteria for interviews can differ based on a designer’s personal biases. However, many participants found JourneyBot’s consistent analysis beneficial. One participant remarked, “Its foundation in objective data means there is little room for subjective influence. Different designers would arrive at the same conclusions using this map, which makes it trustworthy” (U8). Another participant added, “Designers might sometimes overlook details they think are not important, and their interpretations can vary based on their perspectives. In contrast, this tool provides an objective and highly accurate analysis” (U10).

Visual Data Clarity Offers Invaluable Insights for Designers

A prevalent sentiment among participants was that the tool, even when managing copious amounts of data, such as touchpoints, action keywords, and emotional values, remained “intuitively understandable” (U11) due to its data visualization features. One participant remarked, “The user’s behavior is mapped as keywords, so it is clear to find their pain point, and the process of making a solution is easier (than the existing method)” (U6). The tool’s bar graphs, word clouds, and matrix bubble charts resonated as instinctive to many participants. Another participant pointed out the convenience of having a word cloud visualization: “Because keywords organize the interview contents, I can recognize what participants said at a glance” (U4).

I think it is good that I can see the overall distribution map. Immediately, I can see where the users’ emotions are crowded, so it is easy to come up with improvements. (U11)

Numerous participants noted the value of visualizing users’ emotional shifts, with the size and spread of bubbles at each journey phase helping designers pinpoint critical pain points and extract insights. Additionally, participants appreciated the capability to observe the emotion distribution throughout the journey, emphasizing its utility.

Because I can see how a user thinks and feels (with emotional statements), I gained a new perspective. (U3)

(For emotional states) A big circle means there are many users. I think it is good to be able to refer to the interview of not only one person but as the collective group of people. (U10)

Through action keywords, I can quickly check in detail how people feel on specific actions or touchpoints. (U9)

It is good that I can see the entire data at once. When planning a service, popularity needs to be considered rather than personalization (at first). Showing the popularity of data is one of its biggest pros. (U6)

JourneyBot visually underscores emotional shifts throughout the users’ journeys by varying the sizes of the bubbles. Some participants pointed out that this approach helps derive unique insights, especially in instances where scores are not presented as an average value. One respondent highlighted, “I am curious about even the smallest bubbles on the chart. The unique responses help me to consider what I have overlooked” (U5). Another participant suggested a potential efficiency in future research applications: using JourneyBot could streamline the process by focusing on specific target users for follow-up interviews rather than interviewing all participants who interacted with the chatbot. They remarked, “If there is a unique answer (not by everyone), I can focus on interviewing just those specific individuals. Because all the information is provided on the map, I can find which person to interview, which is really nice” (U2).

Additionally, participants felt that the results shown on the map did not feel artificial but actually felt like the work of a designer, which they viewed as a significant benefit. One participant remarked, “I think it is good because the visualization results neatly organize quantitative data” (U7). Another commented, “The chatbot creates results by automation, but the results seem like human’s work, which is quite impressive” (U6).

Mouse-Over Interaction Boosts Contextual Understanding and Usability

The feature where actual user quotes pop up upon hovering the mouse over action keywords and emotion scores was highly praised as a significant benefit of the interactive journey map. Hovering over a specific keyword allows for the display of all sentences containing that term, granting immediate context to the user’s descriptions and associations with that keyword.

It is quite persuasive that the cause of users’ emotions is objective: I can see the qualitative reasons right away by hovering the mouse. (U4)

If it shows the content of the conversation in the line, I would just skip it, thinking they felt bad without deeply thinking about it. However, since emotional statements and action descriptions are extracted separately, it really touches my heart. I think I can create a more emotional service that empathizes with emotions. (U6)

Mouse-over interaction is nice in that I can quickly find what I want without having to read all the contents of the script. It is good that it is efficient and that it cuts the sentences for easy quoting. (U1)

I’m not only looking at the distribution of emotions in bubbles but also seeing ‘why’ the user was in such emotion with the mouse-over interaction. (U10)

Because these interactions are never possible on paper or flat surfaces, the system is a lot better. I guess this map will be used well by many research institutes. (U8)

Customizability should Align with The Designer’s Intention

One of the main critiques from participants about the interactive journey map was its limited customization capabilities. They suggested the inclusion of more flexible features, like adjusting the stages in the journey, refining filtering options, and setting user groups. As one participant remarked, “If all the factors in touchpoints are used as the elements of the filter, it will be possible to create a more delicate group” (U5).

Several participants highlighted the need for enhanced communication tools within the platform. For example, one individual mentioned the potential benefit of adding notes and annotations for deriving insights or facilitating discussions: “It will be more useful if I can add notes and annotations to derive insights or to communicate,” expressed (U3). Furthermore, the ability to export results in commonly used formats, such as PDF, CSV, or Excel, was brought up. As one participant put it, “It would be nice if I can download the results as a PDF, CSV, or excel file. I think the map is good enough to be a report” (U1), indicating the utility of sharing these findings more broadly.

A few participants suggested that the tool could benefit from additional features to create more nuanced user profiles, such as the development of personas based on user behavior patterns within specific groups. One participant remarked, “It would be nice if the representative user changes depending on the filter. It would be great if a filter could create and provide a persona according to the patterns. Then, I can better understand the behavioral patterns of predictable users” (U9).

Discussion

This study reinforces the conclusions of prior research suggesting that chatbots can reliably capture high-quality interview data (Ahn et al., 2020; Kim et al., 2019; Kim et al., 2020; Narain et al., 2020; Tallyn et al., 2018). Traditional research methods can sometimes see designers’ subjectivity potentially skewing qualitative data analysis. In contrast, JourneyBot stands out due to its preservation of original conversation content without arbitrary editing. This ensures an authentic representation of user responses.

The interactive journey map has been particularly lauded for its capacity to objectively collate diverse user responses while still offering a deep dive into each individual’s raw data. Another significant finding is that when interviewees interact with non-human entities, they are often more forthright. This is because they do not feel the need to factor in the interviewer’s emotional cues, which could influence their responses in human-to-human interactions. Our findings echo prior studies indicating that interactions with non-human agents alleviate the psychological burden linked with response time (Lucas et al., 2014; Ravichander & Black, 2018; Xiao, Zhou, Chen et al., 2020). This dynamic allows interviewees ample time for contemplation, thereby enhancing the depth and authenticity of their answers.

Participants expressed a consensus on the practical utility of the interactive journey map for designers. One notable feature is the filter function, which allows designers to create and juxtapose groups based on distinct characteristics, facilitating deeper analysis. The map’s capability to trace emotional trajectories augments designers’ insights. Notably, it allows for the identification of not only typical users based on frequency and average scores but also outliers with extreme emotional responses. This addresses a limitation found in conventional journey maps, which often produce results too constrained to meaningfully enhance the user journey (Thompson, 2016). Another commendable feature is the mouse-hover interaction that reveals individual interview results linked to specific action keywords and emotional states. This positions JourneyBot as a valuable user research tool, catering to designers eager to delve into raw data and frame it within their analytical paradigms for more qualitative approaches.

While the study provides insightful contributions, it is essential to recognize several inherent limitations in this study. As natural language processing has grown increasingly sophisticated, deep learning-based chatbots have paved the way for novel human-computer interactions in daily life (Adiwardana et al., 2020). Yet, JourneyBot relies on predefined conversational pathways. The primary aim of our initial study phase was to craft an experimental chatbot emulating the role of a human researcher. As such, we adopted rule-based queries and responses optimized for journey map creation. A notable limitation of this approach was the segmentation of the user experience into predefined stages and the linear sequence of questions. While this method streamlined the development of JourneyBot by allowing for block reuse, it limits its scalability. Under this framework, an attempt to adapt the chatbot for alternative services would necessitate entirely new conversational block sets. Future endeavors will aim to integrate advanced language models for system responses, broadening JourneyBot’s applicability across diverse sectors.

As participants navigated through the journey, they initially provided extensive and detailed descriptions, but as they progressed, the responses shortened. A participant highlighted that the lack of feedback and non-verbal cues made typing out detailed explanations cumbersome and less engaging. This observation echoes prior research emphasizing the importance of interaction in sustaining respondent engagement. Hence, when leveraging chatbots for interviews, incorporating features like response feedback (Conrad et al., 2005) and probing prompts (Oudejans & Christian, 2010; Xiao, Zhou, Chen et al., 2020) could enhance the depth and activity level of responses.

Lastly, another challenge in the early stages of JourneyBot’s deployment is the potential cold start issue. For the second phase of our study, we benefited from the conversational data of 24 participants collected during the first phase. Without a diverse pre-existing dataset, extracting insights from JourneyBot could prove more challenging than from traditional journey map visualizations. Furthermore, we need to consider broadening the research demographic to include older generations. Given their limited access to technology, this demographic might find it more challenging to use chatbots or interactive interfaces. Additionally, focusing on user groups more inclined to visit different types of clinics could yield more valid and pragmatic research findings than merely recruiting participants from the broader population.

Conclusion

This study aimed to capture user experiences from clinic visits using text-based conversations with JourneyBot and subsequently developed an interactive visualization of these user journeys tailored for designers. The findings indicate that JourneyBot stands as a cost-effective and efficient research tool, facilitating engagement with a vast user base without the restrictions of time and location. Notably, participants found their dialogue with JourneyBot, a non-human agent, to be a trustworthy method for interview participation. Those with design backgrounds particularly commended the interactive journey map feature, viewing it as a practical tool with real-world application potential. Its integrated visualization offers a holistic view of user data while preserving access to granular details for reasoning and proving. Features such as word clouds, bar graphs, and matrix bubble charts were positively received for their capacity to spotlight user challenges and necessities.

The choice to deploy JourneyBot initially in the medical domain was influenced by the global impact of the coronavirus pandemic. The overarching ambition was to arm designers with the tools necessary to craft solutions that streamline medical treatment and prescription processes. Looking ahead, our future research endeavors will assess JourneyBot’s versatility across diverse user experiences, including gallery visits, sports events, outdoor activities, and other public services catering to large user groups.

Acknowledgments

This work was supported by the Yonsei University Research Grant of 2022.

References

- Abras, C., Maloney-Krichmar, D., & Preece, J. (2004). User-centered design. In W. Bainbridge (Ed.), Encyclopedia of human-computer interaction (pp. 445-456), Sage.

- Adiwardana, D., Luong, M. T., So, D. R., Hall, J., Fiedel, N., Thoppilan, R., Yang, Z., Kulshreshtha, A., Nemade, G., Lu, Y., & Le, Q. V. (2020). Towards a human-like open-domain chatbot. https://doi.org/10.48550/arXiv.2001.09977

- Ahn, Y., Zhang, Y., Park, Y., & Lee, J. (2020). A chatbot solution to chat app problems: Envisioning a chatbot counseling system for teenage victims of online sexual exploitation. In Proceedings of the SIGCHI conference on human factors in computing systems (pp. 1-7). ACM. https://doi.org/10.1145/3334480.3383070

- Bangor, A., Kortum, P., & Miller, J. (2009). Determining what individual SUS scores mean: Adding an adjective rating scale. Journal of Usability Studies, 4(3), 114-123.

- Beyer, H., & Holtzblatt, K. (1999). Contextual design. Interactions, 6(1), 32-42. https://doi.org/10.1145/291224.291229

- Bicheno, J., & Holweg, M. (2000). The lean toolbox. PICSIE Books.

- Chapman, C. N., & Milham, R. P. (2006). The Personas’ new clothes: Methodological and practical arguments against a popular method. Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 50(5), 634-636. https://doi.org/10.1177/154193120605000503

- Cleveland, W. S., & McGill, R. (1986). An experiment in graphical perception. International Journal of Man-Machine Studies, 25(5), 491-500. https://doi.org/10.1016/S0020-7373(86)80019-0

- Conrad, F. G., Couper, M. P., Tourangeau, R., Galesic, M., & Yan, T. (2005). Interactive feedback can improve the quality of responses in web surveys. In Proceedings of the 60th conference of the American Association for Public Opinion Research (pp. 3835-3840). AAPOR.

- Cooper, A., Reimann, R., Cronin, D., & Noessel, C. (2014). About face: The essentials of interaction design. John Wiley & Sons.

- Design Council. (2015). Design methods for developing services. https://www.designcouncil.org.uk/fileadmin/uploads/dc/Documents/DesignCouncil_Design%2520methods%2520for%2520developing%2520services.pdf

- Dove, L., Reinach, S., & Kwan, I. (2016). Lightweight journey mapping: The integration of marketing and user experience through customer driven narratives. In Extended abstracts of the SIGCHI conference on human factors in computing systems (pp. 880-888). ACM. https://doi.org/10.1145/2851581.2851608

- Fern, E. F. (2001). Advanced focus group research. Sage.

- Garrett, J. J. (2010). The elements of user experience: User-centered design for the web and beyond. New Riders.

- Gibbons, S. (2019). User need statements: The ‘define’ stage in design thinking. Nielsen Norman Group. https://www.nngroup.com/articles/user-need-statements/

- Goodman, E., Kuniavsky, M., & Moed, A. (2013). Observing the user experience: A practitioner’s guide to user research. IEEE Transactions on Professional Communication, 56(3), 260-261. https://doi.org/10.1109/TPC.2013.2274110

- Goodwin, K. (2011). Designing for the digital age: How to create human-centered products and services. John Wiley & Sons.

- Gould, J. D., Boies, S. J., & Lewis, C. (1991). Making usable, useful, productivity-enhancing computer applications. Communications of the ACM, 34(1), 74-85. https://doi.org/10.1145/99977.99993

- Gupta, S., Jagannath, K., Aggarwal, N., Sridar, R., Wilde, S., & Chen, Y. (2019). Artificially intelligent (AI) tutors in the classroom: A need assessment study of designing chatbots to support student learning. In Proceedings of the Pacific Asia conference on information systems (No. Article 213). AIS eLibrary.

- Heer, J., & Bostock, M. (2010). Crowdsourcing graphical perception: Using mechanical turk to assess visualization design. In Proceedings of the SIGCHI conference on human factors in computing systems (pp. 203-212). ACM. https://doi.org/10.1145/1753326.1753357

- Heimerl, F., Lohmann, S., Lange, S., & Ertl, T. (2014). Word cloud explorer: Text analytics based on word clouds. In Proceedings of the 47th Hawaii international conference on system sciences (pp. 1833-1842). IEEE. https://doi.org/10.1109/HICSS.2014.231

- Keim, D., Andrienko, G., Fekete, J. D., Görg, C., Kohlhammer, J., & Melançon, G. (2008). Visual analytics: Definition, process, and challenges. In A. Kerren, J. T. Stasko, J. D. Fekete, & C. North (Eds.), Information visualization: Human-centered issues and perspectives (pp. 154-175), Springer.

- Keim, D. A., Mansmann, F., & Thomas, J. (2010). Visual analytics: How much visualization and how much analytics? ACM SIGKDD Explorations Newsletter, 11(2), 5-8. https://doi.org/10.1145/1809400.1809403

- Kim, S., Eun, J., Oh, C., Suh, B., & Lee, J. (2020). Bot in the bunch: Facilitating group chat discussion by improving efficiency and participation with a chatbot. In Proceedings of the SIGCHI conference on human factors in computing systems (pp. 1-13). ACM. https://doi.org/10.1145/3313831.3376785

- Kim, S., Lee, J., & Gweon, G. (2019). Comparing data from chatbot and web surveys: Effects of platform and conversational style on survey response quality. In Proceedings of the SIGCHI conference on human factors in computing systems (Article No. 86). ACM. https://doi.org/10.1145/3290605.3300316

- Krüger, A. E., Kurowski, S., Pollmann, K., Fronemann, N., & Peissner, M. (2017). Needs profile: Sensitising approach for user experience research. In Proceedings of the 29th Australian conference on computer-human interaction (pp. 41-48). ACM. https://doi.org/10.1145/3152771.3152776

- Lam, H., Bertini, E., Isenberg, P., Plaisant, C., & Carpendale, S. (2011). Empirical studies in information visualization: Seven scenarios. IEEE Transactions on Visualization and Computer Graphics, 18(9), 1520-1536. https://doi.org/10.1109/TVCG.2011.279

- Lee, M., Ackermans, S., van As, N., Chang, H., Lucas, E., & IJsselsteijn, W. (2019). Caring for Vincent: A chatbot for self-compassion. In Proceedings of the SIGCHI conference on human factors in computing systems (Article No. 702). ACM. https://doi.org/10.1145/3290605.3300932

- Lee, Y. C., Yamashita, N., Huang, Y., & Fu, W. (2020). “I hear you, I feel you”: Encouraging deep self-disclosure through a chatbot. In Proceedings of the SIGCHI conference on human factors in computing systems (pp. 1-12). ACM. https://doi.org/10.1145/3313831.3376175

- Li, J., Zhou, M. X., Yang, H., & Mark, G. (2017). Confiding in and listening to virtual agents: The effect of personality. In Proceedings of the 22nd international conference on intelligent user interfaces (pp. 275-286). ACM. https://doi.org/10.1145/3025171.3025206

- Litosseliti, L. (2003). Using focus groups in research. Bloomsbury Publishing.

- Lucas, G. M., Gratch, J., King, A., & Morency, L. P. (2014). It’s only a computer: Virtual humans increase willingness to disclose. Computers in Human Behavior, 37, 94-100. https://doi.org/10.1016/j.chb.2014.04.043

- Luger, E., & Sellen, A. (2016). “Like having a really bad pa”: The gulf between user expectation and experience of conversational agents. In Proceedings of the SIGCHI conference on human factors in computing systems (pp. 5286-5297). ACM. https://doi.org/10.1145/2858036.2858288

- Marsden, N., & Haag, M. (2016). Stereotypes and politics: Reflections on personas. In Proceedings of the SIGCHI conference on human factors in computing systems (pp. 4017-4031). ACM. https://doi.org/10.1145/2858036.2858151

- Morgan, D. L. (1996). Focus groups as qualitative research. Sage.

- Munzner, T. (2009). A nested model for visualization design and validation. IEEE Transactions on Visualization and Computer Graphics, 15(6), 921-928. https://doi.org/10.1109/TVCG.2009.111

- Narain, J., Quach, T., Davey, M., Park, H. W., Breazeal, C., & Picard, R. (2020). Promoting wellbeing with sunny, a chatbot that facilitates positive messages within social groups. In Extended abstracts of the SIGCHI conference on human factors in computing systems (pp. 1-8). ACM. https://doi.org/10.1145/3334480.3383062

- Nyumba, T., Wilson, K., Derrick, C. J., & Mukherjee, N. (2018). The use of focus group discussion methodology: Insights from two decades of application in conservation. Methods in Ecology and Evolution, 9(1), 20-32. https://doi.org/10.1111/2041-210X.12860

- Oudejans, M., & Christian, L. M. (2010). Using interactive features to motivate and probe responses to open-ended questions. In M. Das, P. Ester, & L. Kaczmirek (Eds.), Social and behavioral research and the internet: Advances in applied methods and research strategies (pp. 304-332). Routledge.

- Pruitt, J., & Adlin, T. (2010). The persona lifecycle: Keeping people in mind throughout product design. Morgan Kaufmann.

- Ravichander, A., & Black, A. W. (2018). An empirical study of self-disclosure in spoken dialogue systems. In Proceedings of the 19th annual SIGDIAL meeting on discourse and dialogue (pp. 253-263). ACL. https://doi.org/10.18653/v1/W18-5030

- Salminen, J., Jansen, B. J., An, J., Kwak, H., & Jung, S. G. (2018). Are personas done? Evaluating their usefulness in the age of digital analytics. Persona Studies, 4(2), 47-65. https://doi.org/10.21153/psj2018vol4no2art737

- Sauro, J., & Lewis, J. R. (2011). When designing usability questionnaires, does it hurt to be positive? In Proceedings of the SIGCHI conference on human factors in computing systems (pp. 2215-2224). ACM. https://doi.org/10.1145/1978942.1979266

- Song, Y. J. (2009). The South Korean health care system. JMA Journal, 52(3), 206-209.

- Stickdorn, M., & Schneider, J. (2011). This is service design thinking: Basics, tools, cases. John Wiley & Sons.

- Tallyn, E., Fried, H., Gianni, R., Isard, A., & Speed, C. (2018). The ethnobot: Gathering ethnographies in the age of IoT. In Proceedings of the SIGCHI conference on human factors in computing systems (Article No. 64). ACM. https://doi.org/10.1145/3173574.3174178

- Thomas, J. J., & Cook, K. A. (2006). A visual analytics agenda. IEEE Computer Graphics and Applications, 26(1), 10-13. https://doi.org/10.1109/MCG.2006.5

- Thompson, M. (2016). Common pitfalls in customer journey maps. Interactions, 24(1), 71-73. https://doi.org/10.1145/3001753

- Tschimmel, K. (2012). Design thinking as an effective toolkit for innovation. In Proceedings of the 23rd ISPIM conference on action for innovation (pp. 1-20). The International Society for Professional Innovation Management. https://doi.org/10.13140/2.1.2570.3361

- Vaccaro, K., Agarwalla, T., Shivakumar, S., & Kumar, R. (2018). Designing the future of personal fashion. In Proceedings of the SIGCHI conference on human factors in computing systems (Article No. 627). ACM. https://doi.org/10.1145/3173574.3174201

- Van Wijk, J. J. (2013). Evaluation: A challenge for visual analytics. Computer, 46(7), 56-60. https://doi.org/10.1109/MC.2013.151

- Xiao, Z., Zhou, M. X., Chen, W., Yang, H., & Chi, C. (2020). If I hear you correctly: Building and evaluating interview chatbots with active listening skills. In Proceedings of the SIGCHI conference on human factors in computing systems (pp. 1-14). ACM. https://doi.org/10.1145/3313831.3376131

- Xiao, Z., Zhou, M. X., & Fu, W. T. (2019). Who should be my teammates: Using a conversational agent to understand individuals and help teaming. In Proceedings of the 24th international conference on intelligent user interfaces (pp. 437-447). ACM. https://doi.org/10.1145/3301275.3302264

- Xiao, Z., Zhou, M. X., Liao, Q. V., Mark, G., Chi, C., Chen, W., & Yang, H. (2020). Tell me about yourself: Using an AI-powered chatbot to conduct conversational surveys with open-ended questions. ACM Transactions on Computer-Human Interaction, 27(3), Article 15. https://doi.org/10.1145/3381804

- Zimmerman, J., Forlizzi, J., & Evenson, S. (2007). Research through design as a method for interaction design research in HCI. In Proceedings of the SIGCHI conference on human factors in computing systems (pp. 493-502). ACM. https://doi.org/10.1145/1240624.1240704

- Zhou, M. X., Mark, G., Li, J., & Yang, H. (2019). Trusting virtual agents: The effect of personality. ACM Transactions on Interactive Intelligent Systems, 9(2-3), Article 10. https://doi.org/10.1145/3232077