Materializing Movement—Designing for Movement-based Digital Interaction

Lise Amy Hansen* and Andrew Morrison

Institute of Design, The Oslo School of Architecture and Design, Norway

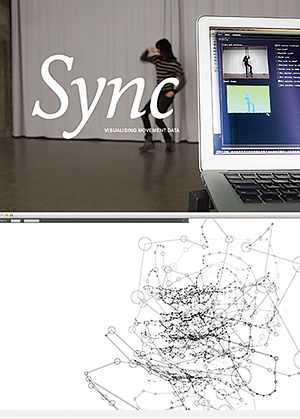

Designers today have access to full-body movement data to explore the rich, interpersonal, non-verbal communication we read, interpret, enact, and perform every day. In this paper, we describe an approach to movement as a design material, where movement is seen as embodied communication. We discuss the mix of qualities in data derived from full-body movement as it encompasses the corporeal and computational, and how to present such data. The aim for this exploration is to tease out the rich communicative potential of full-body movement for digital interactions by enabling an explorative engagement with movement data. People increasingly move with, for and through technology. We argue that designers need to be aware of the nature of movement data and how such data may be applied, addressed, and influenced. Because we are concerned with the meaning-making design process of movement-based interactions, the main analytical approach taken in this study is communication and social semiotics. We suggest that for interaction design, movement may be parsed by Velocity, Position, Repetition and Frequency. We further describe the development of a tool, Sync, for generating dynamic movement data visualizations, and reflect on abstracting and visualizing movement data in order to inform and enable design processes of movement-based digital interactions.

Keywords – Communication, Design Materials, Full-body Movement, Interaction Design, Social Semiotics, Visualization.

Relevance to Design Practice – A Movement Schema allows for discussion on the conceptualization of movement dynamics as a design material. Sync gives interaction designers access to dynamic visuals of full-body movement data.

Citation: Hansen, L. A., & Morrison, A (2014). Materializing movement—Designing for movement-based digital interaction. International Journal of Design, 8(1), 29-42.

Received June 8, 2012; Accepted July 5, 2013; Published April 30, 2014.

Copyright: © 2014 Hansen and Morrison. Copyright for this article is retained by the authors, with first publication rights granted to the International Journal of Design. All journal content, except where otherwise noted, is licensed under a Creative Commons Attribution-NonCommercial-NoDerivs 2.5 License. By virtue of their appearance in this open-access journal, articles are free to use, with proper attribution, in educational and other non-commercial settings.

*Corresponding Author: liseamy.hansen@aho.no

Lise Amy Hansen is a PhD Research Fellow at The Oslo School of Architecture and Design (AHO) and a designer. She holds an MA in Communication Art & Design, Royal College of Art, London and a BA (Hons) from Central Saint Martins (CSM), and has been a lecturer at CSM and AHO. She has run a design agency Witt Hansen Ltd, working with arts and culture as well as urban regeneration in London. Her research evolves around the role of movement as a material in digital interactions and how we may explore movement and an understanding of movement through design research.

Andrew Morrison is the Director of the Centre for Design Research at The Oslo School of Architecture and Design (AHO). He has a BA in English and Law, BA(Hons) and MA in English, MSc in Applied Linguistics and PhD in Media Studies. He has published widely in these areas and in the past decade specifically on interdisciplinary Design. Andrew has also contributed to AHO’s doctoral school and been its Co-ordinator. He is on the board of several journals and reviews widely. For project and publications see: www.designresearch.no/people/andrew-morrison

Introduction

A Design Material View on Embodied Dynamic Movement

In everyday life we use our bodies to non-verbally navigate, negotiate, and communicate. We alter our posture, the dynamics and scope with which we move our limbs and handle our weight according to the spaces in which we find ourselves, the people we are with, and what we hope to express. Goffman (1959) describes these choices of glances, gestures, and positionings as a performance. If we see the way we present ourselves as a choice and an act, then we may understand that this communication can be read or sensed by technology as well as by other people.

Today, people move with and through an increasing amount of technology, whether the technology is in our pockets or just pervasively available through WIFI. This influences what we do, where we move, and in particular how we move. This tracking and influencing of movement reveals the importance of understanding movement as it is abstracted, applied, and influenced through interaction design. An analysis of how we move can give us an understanding of what movement is. Because designers now increasingly facilitate, build, and extend communications with movement data, if we shift the focus to how movement comes to be, we may better understand how movement might be influenced.

Designers today have access to movement data through readily available sensors, such as the iPhone or the Kinect. In addition, the open source community makes software increasingly accessible with for instance openFrameworks and Processing. However, few resources exist in interaction design to meaningfully engage with full-body movement data. This leaves us with the potential to draw knowledge and innovation from our everyday movement practice, including full body actions. There is also a need for technology and interaction design to envision the whole body beyond fingers swiping screens (Victor, n.d.).

This paper explores how we may approach movement for interaction design, and in particular how we may facilitate explorations of movement data for digital interactions. If we are to understand how we may build on movement data and how to design with such data, we need to know the properties and particularities of these data as a design material. As Hollan and Stornetta (1992) wrote, our needs to communicate do not depend on any media, yet how we communicate and the mechanisms with which we communicate are inextricably connected to particular media. Kirsch (2013) argued further that by exploring how we think through things, designs may draw upon our embodied, distributed, and situated cognition, our ‘physical-digital coordination’ (p. 28). In other words, communication is not only media specific, but body-media specific.

Movement data is distinctive in that it encompasses both computational and corporeal qualities. These qualities appear in the data as it is abstracted and in the visualization as movement is re-presented (i.e., both as sign and as signification). Below, we discuss concerns regarding this relational mix of the corporeal and the computational in movement data. To do this we draw on various approaches to the study of movement, such as dance and choreography, non-verbal communication, and modeling and animation of movement data. These we draw together in a schema for identifying semantic properties of movement dynamics for interaction design, informed by social semiotics. Through the schema we propose a parsing of movement according to a set of core categories of Velocity, Position, Repetition and Frequency. We further describe the design process of a digital tool called Sync that dynamically visualizes movement data. We then reflect on Sync and how it may address the concerns of movement as communication and inform interaction design.

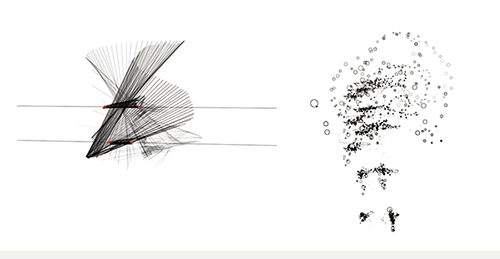

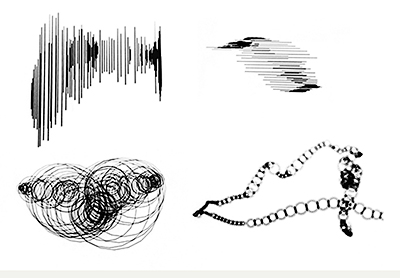

Figure 1. The tool Sync enables a visual reading, identification, and interpretation of data drawn from full-body movement.

Here the two visuals refer to the same arm-waving movement, with each visual foregrounding different aspects of the movement

as well as different aspects of the data handling.

The Body as a Sign: Embodiment and Communication

When we refer to everyday movement communication, we think perhaps first of gestures in conversation, typically “arm and hand movements” (Knapp & Hall, 2006, p. 225). Kendon defined gesture as “visible action as utterance” (2004, p. 7) and classified a communicative gestural phrase in three parts: preparation, nucleus, and retraction/reposition. These parts have been further expanded to include: size (distance between the beginning and end of a stroke), gesture timing (length of time between the beginning and end of a stroke), point of articulation (main joint involved in the gestural movement), locus (body space involved by the gesture), and x, y, and z axis (location of gesture within an imposed imaginary spatial plane) (Kendon, 2004).1

However, these linguistically centered classifications of gesture do not inform us how movement may come to be seen as meaningful or as gesture. “That is, the observer notes the occurrence of a gesture and then records its type. This kind of recording fails to capture the parameters of movement that makes one particular gesture appear over another, as well as what makes the gesture appear at all” (Chi, Costa, Zhao, & Badler, 2000, p. 173). Similarly, a music score does not contain information as to the mechanics of performing, beyond specifying the music to be realized (Puri & Hart-Johnson, 1995, p. 162). When we approach movement as a design material—as something that may be shaped through communicating technology and digital systems—we are interested in how movements become meaningful through their dynamic form.2 This shift from an analysis of what movements are to an investigation of how they become meaningful is similar to that of going from analyzing designs to investigating designing.3

What then are the qualities of movement? For Stern (2010), “we naturally experience people in terms of their vitality. We intuitively evaluate their emotions, states of mind, what they are thinking and what they really mean, their authenticity, what they are likely to do next, as well as their health and illness on the basis of the vitality expressed in their almost constant movements” (p. 3). This vitality is a challenge to classify as Sheets-Johnstone (2011) said “There is nothing rock solid in movement […] The observation is significant in itself and significant academically; simply put, it is easier to study objects” (p. 124). She also critiques the languaging of movement in that “the challenge derives in part from an object-tethered English language that easily misses or falls short of the temporal, spatial, and energetic qualitative dynamics of movement” (Sheets-Johnstone, 1999a, p. 268). Streeck, J., Goodwin, C., & LeBaron, & C. D. (2011) also point to the analytical orientation of picturing people “doing things with things” (p. 6). Design has also been described as concerned with “thing-ing” (Koskinen, 2011, p. 125). These references point to challenges in understanding the vital dynamics of movement that is central in human communication. These various dynamic dimensions of movement pose conceptual challenges for designers, and especially interaction designers, in working with movement as a material.

In addition to these aspects, the body is complex in that it communicates multi-modally, such as through glances, gestures, position, and utterances (Goffman, 1959). Distinctive as a sign, movements require a body, and movement requires physical embodiment. And from this it follows that the sign will also contain references to age, gender, race, etc. (Franko, 1995). Also, “space is not an inert backdrop for movement, but is integral to it, often providing fundamental orientation and meaning” (Reed, 1998, p. 523). Lastly, in terms of interpersonal communication, we interpret actions in others informed uniquely by the knowledge of our own movements (Wachsmuth, Lenzen, & Knoblich, 2008).

In other words, the body is both a movement sign and signifier: in approaching movement as communication, we negotiate the embodied and the rhetorical, the event and representation (Foster, 1995). However, as Csordas (2002) wrote as he explored the experienced body, as opposed to the observed body, these approaches, the semiotic and the somatic, are not mutually exclusive, but exist in concert. Noland (2009) navigated these concerns when she explored an account of agency in how “gestures as learned techniques of the body are the means by which cultural conditioning is simultaneously embodied and put to the test” (p. 2). In anthropology, Farnell and Varela (2008) argued that visual studies should move from seeing the body as an object to dynamically embodied persons in action. In a similar turn, Williams (2004) proposed “semasiology” as a semiotic approach to the embodied, signifying, moving person.

This rich and complex meaning-making in human-human interaction provides us with resources to design interactive systems that draw upon, facilitate, or create such communication. Sensoring technology now extends beyond the push of a key, the tap of a button, or swiping of a screen to include marker-less sensors such as infra-red sensors for automating doors to figure recognition in the Kinect. Designers are thereby in a position to abstract movement and to communicate it as data and digital mediation. As Kendon (1995) put it, “if signs are to be transmitted, they must be seen” (p. 116). Designers can then create structures and systems from, with, and for such communication.

This is complex, as Farnell (1999a) pointed out: “the meanings of perceivable actions involve complex intersections of personal and cultural values, beliefs, and intensions, as well as numerous features of social organisation” (p. 148). Schiller (2006) proposed that “if we accept this entanglement between human-created techniques and movement as a dynamic structural and relational event, then we replace discussions of the body and space or body and machine with the fluid surprises of relational dynamics” (p. 109). When we as designers influence this relational dynamics, we need to first untangle these actions and events to appreciate what we are working with. Specifically, this is a matter of understanding how to abstract movement for interaction design.

Movement Studies: Notation and Abstraction

Notational systems for movement come primarily from dance, devised as early as the 15th Century (Guest, 1989). The most comprehensive system for notating contemporary choreographies, independent of styles or schools of dance, is Labanotation developed by Rudolf Laban (Guest, 2005). This notation system was further developed into Laban Movement Analysis (LMA) to focus on analysis of movement qualities (Newlove & Dalby, 2009).4

When movement is abstracted, selected visual qualities are translated into a system of signification. Two key movement notations (outside of dance) are that of Hall’s (1996) “proxemics,” which draw attention to man’s use of space as a specialized elaboration of culture (p. 1). Here the body is understood as a location in space. At a more detailed level in structuralist linguistics, Birdwhistle (1971) devised “Kinetics” as a method and notational system for analyzing everyday movements in micro-social context.5 However, as Farnell (1999b) wrote: “the stretch of functional-anatomical terminology explains nothing about the sociolinguistic or semantic properties of the action involved” (p. 360). In addition, Helen Thomas (2003) pointed out that not only do we have and are bodies, but they are rarely static, as most theories of the body seem to argue (p. 63). This lack of focus on movement dynamics led Sklar (2008) to call for “qualities of vitality” in descriptions of symbolic action (p. 103).

Attention to the level of structural detail of the body’s movement may indeed prevent rather than enable a reading of abstracted movement. For interaction design this is important, as the computational modeling of movement is increasingly precise, without necessarily informing the practice of designing for movement in regards to the role and agency of movement in interaction.6 This also extends to how movement is visualized. For “whilst there has been substantial advances in human motion reconstruction, the visual understanding of human behavior and action remains immature.” (Moeslund, Hilton, Krüger, 2006, p. 116).

As these studies of movement, notation, and communication indicate, there is a wealth of possible detail and relations, and a great complexity in movement as it dynamically plays out, from which we need to make informed choices when we abstract. However, “the danger of trying to codify, generalize, and formally model the aesthetic experience for technology design is that it may miss precisely the phenomenon that was originally of interest. In abstracting from specific embodied contexts, many of the ineffable aspects of the aesthetic experience - those escaping formal articulation - may be either overlooked or designed away” (Boehner, Sengers, & Warner, 2008, p. 12:3). Addressing similar concerns, Loke and Robertson (2013) argued for designers to include the movers’ perspective, to ensure the felt, lived experience is considered in the design of movement-based interactions . Here notation is interesting for design as it gives us the possibility to go from script to score, from “taking in” to “acting out” (Ingold, 2007, p. 12). In other words, we may use how something appeared to inform how future scenarios may play out and be influenced. The potential for variation here is important. “The greatest value of the systems is not necessarily how precise they could be, but the possibility they have to record more than one stratum of precision” (Bastien, 2007, p. 48).7 By understanding how movement has been modeled computationally, we may be able to assess the level of detail and what kind of information is valuable for interaction design when we design movement-based interactions. This is addressed in the Sync tool, as we discuss later (See Figure 2).

Figure 2. The video and depth data feed from the Kinect as well as the Sync GUI.

Digital Mappings: From Script to Score

In 1979, Badler and Smoliar discussed how human movement may be represented digitally, and based “primitive movement concepts” on Labanotation to build a machine language for representing movement (1979, p. 36). This early work was developed further in the EMOTE model, an animation system drawing further on Laban’s work on effort in movement to simulate natural and expressive movement (Chi et al., 2000).8 In computer science, work has also covered the identification and modeling of movement, such as classification for movement recognition (Sminchisescu, Kanaujia, & Metaxas, 2006), social signal processing (Vinciarelli, Pantic, Herv, Bourlard, & Pentland, 2008), and surface articulation (Horaud, Niskanen, Dewaele, & Boyer, 2009).

Moeslund et al. (2006) have surveyed this progress of vision-based human motion capture research, and point in particular to progress regarding initialization, tracking, human motion reconstruction, pose estimation, and recognition. Niebles, Chen, and Fei-Fei (2010) proposed an algorithmic model which identifies and classifies temporal qualities, and the model developed by Kulkarni, Boyer, Horaud, and Kale (2011) identified “actemes” (akin to linguistics’ phonemes) in order to address dynamics beyond position. Lucena, Blanca, Fuertes, Marín-Jiménez (2009) addressed optical flow accumulated local histograms in order to obtain good video sequence classifiers for human action recognition. This was also explored by Pers, Sulic, Kristan, Perse, Polanec and Kovacic (2010) in regard to identification and security.

However, there has been little consensus on what the general purpose descriptors should be across the variety of computational modeling of movement. This was pointed out early by Gavrila (1999). This remains the case, as Poppe (2007, 2010) mentioned in his overviews of vision-based motion capture research on how the evaluation of motion analysis algorithms requires a common database. Whereas attempts such as the HumanEva database aim to build a consensus of descriptors (Sigal, Balan, & Black, 2010). Although much work has been done in this area “many issues remain open such as segmentation, modeling and occlusion handling” (Wang, Hu, & Tan, 2003, p. 596).

These algorithmic analyses of movement from computer science inform the cutting edge developments of technology, such as sensors and software, that concern the precision of movement identification and prediction. The focus is on mathematically identifying or modeling movement. The evaluation is of the resulting models and mathematics, presented in graphs, statistically, or as equations often without visual reference to the origins of the data, or the movements. For the collaborative creative processes involved in much of interaction design that engages non-programmers and non-developers, these computational approaches present a challenge. Choices will have been made and parameters set regarding extraction, abstraction, and presentation, in particular of temporal dynamic relations and their representations. It is these choices that are often difficult to gauge in the descriptions of the final designs. Blaauw and Brooks (1997) wrote that “when reading the professional paper describing the architecture of a new machine, it is often difficult to discern the real design dilemmas, compromises, and struggles behind the smooth, after-the-fact description” (p. vii).

For movement in particular these choices are not yet guided by conventions (as we see by the attempts of the HumanEva project to standardize descriptors), yet they are important if we are to understand the potential of movement data as communication, and as semantic properties of their dynamics. In particular this is because “through computation, we are in a position to develop more personalized, customized, and richer technologies. By abandoning the tendency to generalize reality, digital mapping technologies undermine the role ergonomic surveys play in the measurement of organic bodies” (Silver, 2003, p. 109). It is arguably here we can see a role for design in exploring movement for digital communication.

A Design Perspective: From Score to Tool for Design

In order to inform design and design practice, we decided to approach movement as a design material. Designers work informed by their materials. Sennett (2008) referred to such knowing as “engaged material consciousness” (p. 120). The designing of movement-based interactions introduces elements that until recently have not been thought of as conventional materials for designers. These include software (Blevis, Lim, Stolterman, 2006; Hallnäs, Melin, Redström, 2002; Löwgren & Stolterman, 2004), time and space (Mazé & Redström, 2005), screens (Eikenes & Morrison, 2010), and also networked objects (Nordby, 2010).

Our focus then, is on the dynamic, moving body as a material and a mode. In other words, we are interested to understand how movement data may be read, interpreted, shaped, presented, and applied in order to design from it, with it, and for it. “Computational technology gives us a very rich material to express interaction design form” (Hallnäs, 2011, p. 77). Software then is also a material that the designer may shape as it draws on movement. However, computation is a challenge as “computations need to be combined with other materials to come to expression as material” (Vallgårda & Redström, 2007, p. 513). When designers explore a material, they touch and stretch and shift and shape it. With traditional materials such as clay or wood this can be direct and instant, but with computation these explorative physical acts are less available. Thus, when designers work with movement data, there is an initial design stage where the data is ordered and itself abstracted; the data is presented and visualized usually as numbers or graphs, but it is also possible to re-map the data and present it in such a way that we can see the data as we see movement.

Schön (1991) described such processes as the designer having a conversation with the material. Dearden (2006) further discussed how the process of designing with digital materials can “be sought in the material properties of digital systems” (p. 399). Ashbrook and Starner (2010) pointed to the fact that motion gesture control rarely appears outside the game console and that the reason for this might be that interaction designers are not experts in pattern recognition. Therefore, how may movement data be made available to designers in order for them to recognize patterns and structures or meaningful movement from the data?

We address this question by developing a tool that shows how the movement data comes to be such a computational composite. This is essential if we are to explore movement data in interaction design. For each digital abstraction of movement, we get data that we then need to present or visualize in order for us to understand what is registered and how, and then again to identify which data we need or how we may use it. What is needed is a tool that allows for various levels and types of abstractions to be drawn from the data. This enables a kind of stretching, molding, shifting, and shaping of the data, similar to what we might do to clay or fabric in order to understand its nature, the properties, and potentials for design. Hansen (2011) has previously argued that for interaction design to explore expressive movement as communication, designers may draw on choreographic practice, and in particular the digital tools and techniques that computation now make possible for choreography. From a design perspective, Hansen (2011) found that movement data needed to be accessible, visualized, and generative in order to communicate the potentials and possibilities of the movement data as a material for designing. Designers, artists, and other non-programmers are now increasingly able to access software developments and applications though open source code, such as Pure Data, Processing, and open Frameworks. These are described as toolkits, where you can use and build upon available code, and in turn add your own. In this way, interaction design is increasingly exploring creative computation. However, code for movement has been explored with a predominant focus on sound. Birringer (2002) pointed to the fact “that choreographers have been working with code that was by and large written by and for musicians (e.g., BigEye, Image/ine, Max/MSP, VNS). Such code may not be ideal for physically rich and complex action” (Birringer, 2002, p. 146). Also, digital tools for advanced motion tracking mainly deal with markers or site-specific annotation. Motion capture systems such as Qualisys and Optitrack are sophisticated and precise but prohibitively expensive, immobile, and with a high user threshold.9

Additionally, performance and dance-related research projects are now gradually developing their own digital tools as part of their research, such as Motionbank’s Piecemaker, TKB’s Creation-tool, Openendedgroup’s Field or Badco.’s Whatever Dance Toolbox.10 These projects exemplify high-level handling of movement data as an expressive material, where the movements usually relate to a particular choreographer and the tools are designed for creating movement for the stage, such as TroikaRanch’s Isadora11 or Actionplot (Carlson, Schiphorst, & Shaw, 2011).

As we were exploring movement and movement data, we found it particularly fitting for us to engage in “reflective practice, the framing and evaluation of a design challenge by working it through, rather than just thinking it through” (Klemmer, Hartmann, & Takayama, 2006, p. 142), and also acknowledged the connected nature of physical action and cognition (Schön, 1991). Foster (1995) argued that the conventions through which meaning of the body is conveyed needs to be accessible and “as long as every body works to renew and recalibrate these codes, power remains in many hands. Otherwise the conventions will take us ‘unawares’ and gain the upper hand” (p. 19). Foster further critiqued the ease with which other disciplines adopt concepts and concerns built up through bodily-based practices, e.g., performativity (Foster, 1998). This is why we think it is important to move our bodies as designers (Hummels, Overbeeke, & Klooster, 2007), and to explore the nature of movement data ourselves by developing an approach to movement for interaction design. Further, we see it as essential that this be extended to the development of tools for visualizing movement data. In short, we took a practice-based, bodily-present approach to research through designing in the exploration of movement as a design material.

In framing code as a way to handle data from a designer’s perspective, code becomes a tool for the designer, as well as a material. Discussing how tools are designed is important as Haigh (2009) argued, “software tools encapsulate craft knowledge, working practices, and cultural assumptions” (p. 7). Hence, such exploration of movement data can inform a professional vision as “socially organized ways of seeing and understanding events that are answerable to the distinctive interests of a particular social group” (Goodwin, 1994, p. 606). In turn, this informs practice in the sense that just as a trained typographer will see the potential in a poster, as well as the actual poster, a trained choreographer will see movement potential as well as the actual movement. We enable such a skilled vision (of movement) by finding ways to materialize movement for designers.

Identifying Movement Qualities for Interaction Design

In investigating how to conceptualize movement for interaction design, with an aim of addressing the notion of vitality (Stern, 2010), we found that we needed to work out a way to identify movement qualities. Similar to most design projects, there was a need to identify and understand the constituent parts of what we were designing with. This is different from studies of the comparison of movement phrases (e.g., Gesture Follower) or other higher level abstractions that model emotional states (e.g., Eyesweb).12

In order to inform a conceptualization of movement for design, we developed a schema for parsing movement visually. To do this we drew on a variety of knowledge from training as a dancer, the professional practice of a graphic designer and an interaction design educator, as well as design and research in new media and communication design. This diverse knowledge and experience was drawn together with the body of interdisciplinary research presented above. We further drew on social semiotics in order to devise a set of semantic properties for understanding movement dynamics.

Analyzing meaning-making processes involved in working with design materials also has close correlations to the process of meaning-making or signification in social semiotics (rather than aiming to settle on overarching rules or specific structural grammars). Social semiotics explores how meaning is made in the process of adopting, using, and modifying signs or resources in situated use (Kress & van Leeuwen, 2001). It is therefore appropriate to frame movement material as a semiotic resource, defined as “the actions and artefacts we use to communicate, whether they are produced physiologically—with our vocal apparatus; with the muscles we use to create facial expressions and gestures, etc.—or by means of technologies—with pen, ink and paper; with computer hardware and software; with fabrics, scissors and sewing machines, etc.” (van Leeuwen, 2005, p. 3). By investigating the nature of movement data in this way we explored movement as material beyond its current functions and applications. It is important to note that to date little work has been done on movement in social semiotics, despite the focus on multimodality in the past decade (e.g., Morrison, 2010).

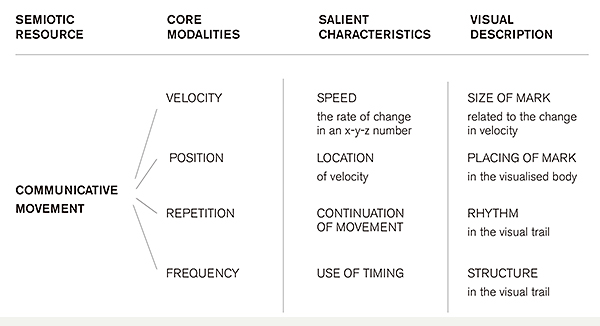

In designing with movement as design material we drew on Kendon’s approach to classifying greetings. Kendon (1990) found that there was no “absolute” boundary for identifying a greeting, and resolved to search for “patterns of organization at the most inclusive level first” (p. 10). Similarly, we aimed to identify the visual aspects that comprised the dynamics of communicating full-body movement. We further drew on van Leeuwen’s (1999) approach to sound.13 In parsing sound, van Leeuwen listed its modalities and each modality is then described by its salient or “marked” characteristics. Those could again be described and more specific characteristics named. This process creates a network of choices for mapping material. In our case it formed a system of choices on movement. This was formulated in what we have called a Movement Schema (see Figure 3).

Figure 3. A schema for identifying semantic properties of movement dynamics for interaction design.

This Movement Schema is designed to assist an understanding of how to communicate movement through the notions of modalities in Semiotic Resources. These are mapped to an increasing degree of detail, form left to right. First, Core Modalities are listed that cover Velocity, Position, Repetition and Frequency. These address the major modes of movement. Each then has a set of Salient Characteristics (Speed, Location, Continuation of Movement, and Use of Timing). There then follows Visual Description that entails Size of Mark, Placing of Mark, Rhythm, and Structure. Further details on these aspects are described below. First, we describe the categories related to Core Modalities:

Velocity: As Stern (2010) discussed, vitality is central to human experience, yet it is “hidden in plain view” (p. 3). As we try to get to this quality in movement data, we address the critique by Sheets-Johnston (1999b) of the prevailing limited view of movement as an equivalent to a change in position: What changes position are objects in motion, not movement. Movement is thus not equivalent to objects in motion. This gives a focus on how a part of the body moves, not just changing from position to position, rather the main communicative element may indeed be in how this is achieved. This allowed us to visually emphasize the velocity of each point and find ways to visualize the rate of change in the position of a body part, rather than where it started or ended or what it produced.

Position: This leads to a consideration of location. This is not a matter of the overall pose or posture and its location, but the position of the velocity. This comes from an understanding that the body is multimodal as well as embodied, and indeed that when we communicate there are several semiotic systems at play at any one time (Streeck, Goodwin, & LeBaron, 2011). In this sense it is important to see what part is moving and where (relative to the rest of the body). This is a shift of focus from the position of the body to the meaning of position of moving body parts.

Repetition and Frequency: A shift—such as turn-taking or framings, to use Goffman’s term (1986)—can be seen in the velocity and its position over time, e.g., how and when a certain change or velocity is repeated and the frequency of this repetition. For instance, meaning depends on a reading of a movement in context: are all movements repeated or only a single one? This can place greater emphasis on that particular movement. This happens in a conversation when we quickly adapt to the “language” of the other to ensure we are understood and that we understand the other (Tversky, Morrison, & Zacks, 2002). In this way a single movement is always interpreted in context of all the other movements. This led us to add Repetition and Frequency as central to understanding movement data, or, to refer to Kendon, as central patterns of the most inclusive level.

The description of these four categories or modalities indicate some of the complexity of communicating with our bodies. The choices of identifying these particular modalities was also informed by previous parsing of movement in dance, linguistics, and anthropology as mentioned previously: such as dance (e.g., Guest, 2005; Schrader, 2005) and computer science (e.g., Bacigalupi, 1998; Badler & Smoliar, 1979). However, it is important to note that this is a suggestion of how to parse movement with the aim to visualize movement data for interaction design. This perspective is not an overarching nor fixed taxonomy but rather an initial step in identifying relevant qualities for interaction design as movement is increasingly read and applied in interaction design. Such a Schema also opens up areas for discussion and evaluation. The Schema therefore provides a meta-vocabulary, and with it suggestions for communicative characteristics of movement. While Morrison and Tversky (2005) argued that “naming seems to activate the functional aspects of bodies” (p. 696), by providing the means for describing and explaining these resources in regards to meaning making, social semiotics in turn provides the means for describing and explaining how these resources may be understood and taken up, and then designed and applied.

We also added the last column Visual Description to the Schema by proposing a visualizing component. This is further explored in the design of the tool Sync described and illustrated in detail below. The Schema formed the basis for designing a tool for visualizing such movement modalities.

Designing Ways to Visualize the Computational and Corporeal in Movement Data

Sync was developed by the author and designer Lise Amy Hansen and Hellicar&Lewis, the interaction designers Peter Hellicar and Joel Gethin Lewis. Thus, we drew on specialist knowledge from design and dance, design and skateboarding as well as design and mathematics, covering movement, programming and visual communication. The design methods for the making of the tool were collaborative, reflecting the kinds of processes the tool aims to aid (Sanders & Stappers, 2008). Below follows a brief description of the process; the many alternate iterations and investigations are beyond the scope of this article.

An initial workshop in 2010 started with discussions around movement issues (as those outlined in this article) and the current role of movement in interactions, installations, and interactive performances. This contributed to a joint understanding of movement as communication and the kind of interactions this could inform. We also had a shared knowledge of the kinds of tools we had at our disposal and what they could do (e.g., relevant programming languages such as openFrameworks14 and available sensors). We saw that current digital tools for marker-less movement data mainly employed body outline data (e.g., blob recognition), and we realized there was much to unravel as to how movement played out ‘within’ a single body. We were also concerned to address the whole body, in other words beyond fingers, hands, or arms typically dealt with in confined spaces such as desktop-based scenarios.

Our focus was on identifying how designers may arrive at semantic properties of the dynamics of movement in the data e.g., abstracting how the parts of the body or the body moved. The Movement Schema was our design brief, in the sense that we sought to find parameters for visualization that reflected the modalities in a variety of ways (rather than finding a separate visual for each modality). Fry (2007) described such data visualization processes in seven stages: acquire, parse, filter, mine, represent, refine, and interact. We decided to draw upon the movement data from Microsoft’s Kinect sensor.15 It uses video and depth data from infra-red sensors to identify 14 points in an x-y-z axis representing feet, knees, hips, shoulders, elbows, hands, torso, and head (see Figure 2 and 4). However, the tool we developed may equally well take its feed, e.g., the x-y-z points from other sensors.

Figure 4. An indication of the scope of the Kinect sensor and an example of the set-up with a laptop running Sync, with the GUI menu. For exact measurements of Kinect’s scope see Dutta (2012).

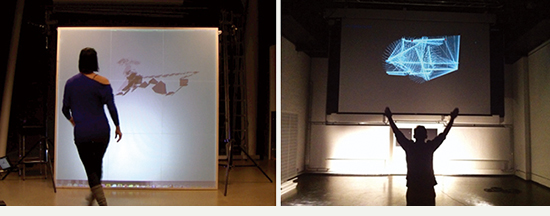

We collaborated remotely as well as in workshops in 2011 and 2012. We continuously tried out the visualizations by exploring our own movements through them, as well as allowing colleagues from choreography and interaction to explore the various visualizations. On these occasions we projected the generated movement data visuals on large screens so people could see their own data as it played out (See Figure 5 and 6).

Figure 5. Moving their own data around in real time in the design workshops 2011 and 2012.

Figure 6. Here Sync visualizes hands, hips, and shoulders by connecting each pair with a line, and shows the data from a martial art sequence.

Sync: A Tool for Visualizing Movement Data

The outcome of these design workshops was the tool Sync. It is a script, or lines of code, that call upon the movement data and in so doing it “organizes the data and presents patterns and relations, structures and dynamics that may otherwise be near invisible to us” (Hansen, 2013).

Parameters and Options

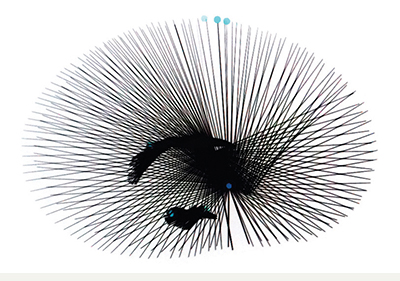

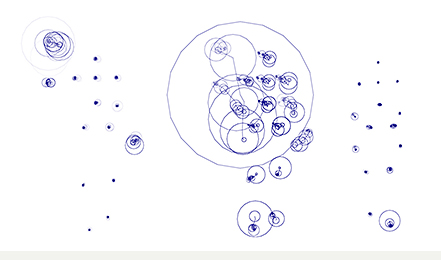

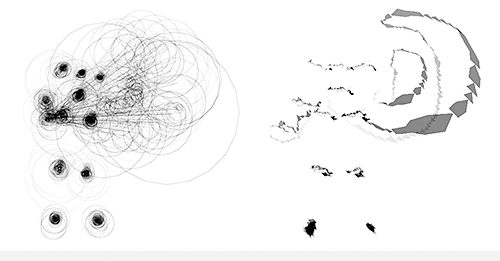

The tool has a graphical user interface (GUI) with a range of options as to how the movement data is presented dynamically. The data draws on the Kinect in its tracking and identification of the body through 15 points in an x-y-z axis. With Sync, these x-y-z points may be visualized in different ways: vertical and horizontal lines, circles and ribbon (see Figure 7). Each visualization choice will foreground different qualities of the movement data.

Figure 7. Each x-y-z point can be visualized by Sync as one or several or all marks; circle, vertical line, horizontal line, and ribbon.

The rate of change in an x, y or z number (e.g., the position of a point relative to the Kinect sensor) was indicated by change in the size of the visual mark representing that point. That is, movement is registered as a change in any of the x, y, or z planes, measured by the rate of change in its position. This in turn is visualized, so that an increase of speed will extend the marks, extend the size of the circle or extend the length of the lines or the width of the ribbon (see Figure 8).

Figure 8: This composite image show three stills of visualized data from a simple raised (right-hand) waving action ending with a small (left) foot shuffle. The size or scope of the movement is available in the line trailing the x-y-z points, whilst the size of the circles reflect the rate of change of each point.

The marks are continuously drawn, which allows for a visual trail where repetitions may build up visually and relationally e.g., the frequency may become apparent through comparison in the visual trail (See Figure 9).

Figure 9. In these comparative visualizations of a wave, the x-y-z points are visualized by the ribbon mark (right) and by the circle mark (left). The velocity or rate of change in a point, here the elbow and hand in particular, is visualized by a wider ribbon or bigger circle.

The interface further allows for the mark visuals to be shaped according to size, line width, and density through sliding controllers (see Figure 2). This allows for decisions on how the movement data appears in regards to sensitivity (a large sized mark can obscure a reading of small movement for instance) detail (the thin line width of the mark allows for precise positionings of a movement) and history (the denser the mark, the harder it is to see the history as it builds up visually and overlaps). In addition, there is a history or visual trail for each mark and a slider controlling the length of the history.

We also created an option to track pairings of points: shoulders, hips and hands. These may be visualized by a line connecting each pairing of points or by a line extending to the edges of the screen, amplifying their alignment (See Figure 1).

Sync has screens showing the video and depth data feed as well as a screen where the data is visualized according to the parameters set in the GUI.

Record, Repeat, and Reveal

We also chose to include a recorder function in the tool. This allows the movement data from the Kinect along with video and depth information to be stored, accessed, and replayed. This enables an exploration of alternative representations of the same movement as the data may “run” over and over again. For each run, the designer may choose a different visualization, and by playing with the options available in Sync, the designer may then become familiar with both the tracking parameters (e.g., what the Kinect registers and how) as well as the movement repertoire (e.g., the kind of movements performed in that specific context and location). This is important with the wealth of possible data for visualization (see Figure 10).

Figure 10. The graph becomes visually complicated if too many parameters are visualized. Sync video and depth data feed as well as a close-up of the data visualization (available here http://kinetically.wordpress.com/sync-download/)

This enables the designer to fine-tune exactly which aspects of a movement are to be tracked, again giving a more sophisticated reading of movement. Sync can be set to only show fast movement, or it can be set to show a long history of movement and dense marked areas, which would identify points where there is little movement, and so on (See Figure 1).

Reflections on Syncand Movement Data

Materializing Movement Data

Sync allows designers to decide the ways in which movement data is presented. It opens up the options for designers to set the parameters of algorithms that call upon movement data, namely the x-y-z- points. In this way, Sync generates dynamic visuals that digitally re-present full-body movement. By having the possibility to compare these visualizations to a video and the depth data, the designer in a way gains a particular access to the algorithms, in the sense that they can explore the movement data through play, by twisting, shifting and shaping the parameters, and thus the visuals. This elasticity is itself a key difference to the tools mentioned previously. Such flexibility also opens up for a material understanding of the possibilities of movement data that emerges in and through use. This is similar to squeezing and pulling clay to gain insights into aspects such as density and resistance as material properties. Exploring the various parameters and algorithms with movement data is a similar materializing process through which a designer can appreciate how movement and movement data can be worked with, selected, and read.16

Observing a finished object may help communicate material possibilities beyond those employed. However, in order to make informed choices such as scope and fit as well as effect and ethics, we need to know the material properties and potentials. Through computation, designers now have novel access to abstracted movement or movement data. With Sync, designers are in a position to explore the notion of communicating digitally with our movements and “as we create new interfaces between our bodies and our symbolic systems we are in a unusual position to rethink and re-embody this relationship” (Utterback, 2004, p. 226).

Viewing the Corporeal

By re-presenting movement and abstracting it to a level where we may still appreciate a body in the dynamic presentation of the data, we can still draw on our “everyday” yet sophisticated reading of movement as we identify and familiarize ourselves with the data and thereby the movement. We have aimed to keep an alignment of the data that can be traced back to the actual body. In this way, we can link the data to physical movement and may re-embody the relational dynamics as we, as designers, became familiar with how the corporeal is stretched into the computational and how the computational abstracts the movements.

However, the same ability to interpret movement data visuals extends beyond what is “there.” As we materialize movement by visualizing the data, we can also notice missing data. Bleeker (2008) addresses this aspect of skillful viewing in her writing about visuality in the theatre: “we always see less than is there” and further points to the fact that we also see more than what is there (p. 18). With computer vision the same can be said to be true; a tool that represents data visually is set to register only a certain selection of available information, from which we then read more into than what is “there.” It is important then that we engage in altering, twisting, and shaping the data to find the grain, plasticity or malleability, and material restraints. First though, movement itself needs to be understood as a dynamic and accessible material for interaction design.

Accessing the Particulars

In Sync, the data from the environment, such as proximity or the nature of the surroundings, is not registered. However, the Sync set-up is portable and relatively un-intrusive. Consequently, Sync enables a design process to take place in specific settings, and in this way addresses the “orientation and meaning” that a backdrop may give (Reed, 1998, p. 523).

Sync is a bottom-up tool; it does not place the movement data in a system of immediate signification nor does it currently analyze movement in relation to other sets of information, such as location data, time of day, or work tasks for instance. It allows for analysis of specific particular movement, and thus, to a certain extent, situated movement, and avoids the need to read movement according to a particular vocabulary. Sync then enables designers to address Franko’s concern regarding embodied elements, such as culture and gender, in the sense that it opens for designing according to specific settings and for specific movements, specific needs and specific expressions. Digital depth cameras, such as the Kinect, have existed for a while in a variety of forms. However, “when they become cheap and distributed throughout the culture [...] suddenly people have a new way of expressing themselves” (Levin, n.d.), to which we add, so do designers.

What Does this Mean for Design?

For designers, these temporary visual representations may function like a sketchbook that “encourage exploration of rich and non-obvious spaces of opportunity” (Gaver, 2011, p. 1560). The visuals may be seen as mappings that can inform design briefs for future designs. Sync allows designers to visualize a dynamic that otherwise would normally be buried in numbers in the lines of data that are generated by the change in each x-y-z point. This may be accessible for a computer programmer reading lines of numbers, but it is hard to interpret for others. Staying close to the actual movement, and visualizing the movement data with comparison to video and depth data, as well as providing dynamic, generative visualizations enable the designer to “see” the data and to make informed decisions in linking movement data to communication, function, and aesthetic. This kind of seeing is the skill Goodwin (1994) calls “professional vision,” and we suggest it is what is needed for interaction design to appreciate movement as material.

Accordingly, such mapping or exploration of materials may inform use of movement beyond current functions. And because the arguments and designs laid out in this paper are propositional and explorative, thus they align with such outcomes. Hansen has further written about the importance of teasing out and making available the creative decisions for design in handling movement data. These decisions are crucial in the materializing process as the data is selected, read, and called upon in order for it be visualized and the creative potential communicated (Hansen, 2013). In this sense, by making Sync available, the tool also invites skill and virtuosity in handling this material. The tool is published open source, and as such is designed to prompt, inspire, and motivate interaction designers and others to creatively engage with movement data, and by extension, movement as material.

Conclusion

Movement data, and by extension movement, remain largely inaccessible for designers. Few resources exist that allow designers to creatively explore the potential in various ways to conceptualize and apply movement qualities in a design process. In our enquiry we framed movement data as a material that can be shaped, which in turn shapes the design process. We were motivated by the notion that every material will have properties that give particular possibilities for expression and communication.

Above, we outlined notions of the moving, expressive body in interpersonal communication, as an embodied sign and signifier, socially situated, culturally performed, and read. In order to approach such complex movement for digital interactions we looked at how movement has been studied and notated in dance and choreography. We also examined how it has been taken up in non-verbal communication, new media, and communication design. We further looked at how computer science has abstracted and modeled movement, and discussed how computation allows for an increasingly detailed mapping and dynamic re-presentation of movement. Overall, we argued that it is important for interaction designers to be able to appreciate the ways in which movement may be abstracted and re-presented. Materializing movement may then benefit interaction designers in designing for and building upon the nature of movement data.

We presented a Movement Schema that identifies movement qualities according to Velocity, Position, and Repetition and Frequency. This schema addresses the dynamics of movement, rather than more static readings such as of posture and location. We then presented an open source, digital application Sync, that allows designers to generate dynamic visuals from movement data with comparison to actual movement. Access to such tools opens up spaces for design in the shaping of movement-based interactions, and enables these spaces to become semiotic and communication design resources. The tool Sync enables designers to explore ways in which movement may become a design material. It does this by allowing a variety of choices regarding the parameters of how the movement data is visualized. It reveals aspects of both the corporeal and computational qualities in the data. By materializing movement in this way designers may creatively engage in shaping the complex communication potential of digital interactions and our expressive, relational, lived bodies.

Endnotes

- 1. Further, McNeill classified gesture in relation to language into four groups: iconic, metaphoric, deictic, and beat-like gestures (1992). Cadoz and Wanderley categorizes gesture in regards to their perceived function: semiotic (communicating meaningful information), ergotic (manipulating the physical world and creating artefacts), and epistemic (learning from the environment through tactile or haptic exploration) (2000).

- 2. Sheets-Johnstone describes this form as “by the very nature of its spatio-temporal-energetic dynamic bodily movement is a formal happening [….] Form is the result of the qualities of movement and of the way in which they modulate and play out dynamically” (1999: 268).

- 3. Similarly, just as “choreography and dancing are two distinct and very different practises” (Forsythe 2008: 5), we can say that designing interaction and experiencing an interaction are two distinct and very different practices: “expression is what makes experience possible, which is why concepts and theories of experience can never provide a logical foundation for design aesthetics” (Hallnäs 2011: 75).

- 4. Related to interaction design, Labanotation and LMA have been applied in HCI from Badler & Smoliar (1979) to Loke et al (2007), Loke & Robertson (2010), as well as studies of dance and anthology (Farnell 1995) and Williams (2004).

- 5. However, Williams critiques Birdwhistle’s notation in a description of hitch-hiking: “When we are told by Birdwhistell that a ‘macro-kinesic’ explanation of this state of affairs is something like this: ‘two members of the species homo sapiens, standing with an intra-femoral index of approximately 45 degrees, right humeral appendages raised to an 80 degree angle to their torsos, in an antero-posterior sweep, using a double pivot at the scapular clavicularjoint, accomplish a communicative signal’ we are justified in saying ‘no.’ That is not what we see. We see persons thumbing a ride” (2004, p. 184).

- 6. Similarly, Labanotation is considered to be the most comprehensive of notation systems, but it is also necessarily complex so whilst it is precise, designers would be mostly unable to identify potential in seeing what could be changed and altered, and the effect this would have on the rest of the body.

- 7. This differs from analysing movement through photography (such as Muybridge and Marey) or through video in the studies from Hall (1966) and Birdwhistle (1971) to Kendon (2004) and Streek et al. (2011).

- 8. Related work drawing on Labanotation and LMA is Eyesweb, a software for video analysis of movement aiming to recognise the expressive qualities of movement (Camurri et al., 2007; Camurri et al., 2004).

- 9. “These days I think very few people remember or recall that software is made by people, and that software is something that they could make themselves. […] I think it is essential for artists to have a seat at the table in determining the future trajectories of technologies” (Levin, n.d.).

- 10. See http://motionbank.org/en/piecemaker-2/, http://tkb.fcsh.unl.pt/ctkb-introduction, http://openendedgroup.com/field/ and http://badco.hr/works/whatever-toolbox/

- 11. See http://troikatronix.com/

- 12. See http://ftm.ircam.fr/index.php/Gesture_Follower and http://www.infomus.org/eyesweb_ita.php.

- 13. This work is based on the work by linguist Halliday’s systemic-functional approach (2004).

- 14. openFrameworks is a cross platform open source toolkit for creative coding in C++ ( see www.openframeworks.cc). This choice gave us access to the libraries of C++ as well as a speed of computation which a visual approach would need in processing the complexities of tracking physical movement. The open source software also aligns itself with an important goal of this research project of maintaining relevance to practice (we were able to draw upon cutting edge developments) and dissemination (we also publish the application: http://kinetically.wordpress.com/sync-download/).

- 15. The sensor constitutes a simple portable set-up with the small-sized sensor connected to a laptop. This set-up is easily portable thus highly adaptable for observing and working with movement not just in a controlled lab setting, but a variety of settings or contexts, however transient, from street corners, to bus stops and café entrances.

- 16. The lines of code used in Sync and the actual movement data remain “out of view” and is accessed through the GUI. However, the code that comprises Sync is published, see https://github.com/HellicarAndLewis/Sync

References

- Ashbrook, D., & Starner, T. (2010). Magic: A motion gesture design tool. In G. Fitzpatrick, C. Hudson, K. Edwards, & T. Rodden (Eds.), Proceedings of the 28th International Conference on Human Factors in Computing Systems. New York, NY: ACM press.

- Bacigalupi, M. (1998). The craft of movement in interaction design. In T. Catarci, M. F. Costabile, G. Santucci, & L. Taranfino (Eds.), Proceedings of the Working Conference on Advanced Visual Interfaces (pp. 174-184). New York, NY: ACM press.

- Badler, N. I., & Smoliar, S. W. (1979). Digital representations of human movement. ACM Computing Surveys, 11(1), 19-38.

- Bastien, M. (2007). Notation-in-progress. In S. deLahunta (Ed.), Capturing intention (pp. 48-55). Amsterdam, the Netherlands: Emio Greco | PC and Amsterdam School of the Arts.

- Birringer, J. (2002). Dance and media technologies. A Journal of Performance and Art, 24(1), 84-93.

- Blaauw, G. A., & Brooks, F. P. (1997). Computer architecture: Concepts and evolution. Reading, MA: Addison-Wesley.

- Bleeker, M. (2008). Visuality in the theatre: The locus of looking. Basingstoke, UK: Palgrave Macmillan.

- Blevis, E., Lim, Y. -K., & Stolterman, E. (2006). Regarding software as a material of design. In Proceedings of International Conference of Design Research Society. Lisbon, Portugal: Instituto de Artes Visuais.

- Boehner, K., Sengers, P., & Warner, S. (2008). Interfaces with the ineffable: Meeting aesthetic experience on its own terms. ACM Transactions on Computer-Human Interaction, 15(3), 1-29.

- Carlson, K., Schiphorst, T., & Shaw, C. (2011). ActionPlot: A visualization tool for contemporary dance analysis. In S. N. Spencer (Ed.), Proceedings of the International Symposium on Computational Aesthetics in Graphics, Visualization, and Imaging(pp. 113-120). New York, NY: ACM Press.

- Chi, D., Costa, M., Zhao, L., & Badler, N. (2000). The EMOTE model for effort and shape. In J. R. Brown, & K, Akeley (Eds.), Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques (pp. 173-182). New York, NY: ACM Press.

- Csordas, T. J. (2002). Body/meaning/healing. Basingstoke, UK: Palgrave.

- Dearden, A. (2006). Designing as a conversation with digital materials. Design Studies, 27(3), 399-421.

- Dutta, T. (2012). Evaluation of the Kinect™ sensor for 3-D kinematic measurement in the workplace. Applied Ergonomics, 43(4), 645-649.

- Eikenes, J. O. H., & Morrison, A. (2010) Navimation: Exploring time, space & motion in the design of screen based interfaces. International Journal of Design, 4(1), 1-16.

- Farnell, B. (1999a). It goes without saying - But not always. In T. J. Bucklans (Ed.), Dance in the field: Theory, methods, and issues in dance ethnography (pp. 145-160). Basingstoke, UK: Macillan.

- Farnell, B. (1999b). Moving bodies, acting selves. Annual Review of Anthropology, 28, 341-373.

- Farnell, B., & Varela, C. R. (2008). The second somatic revolution. Journal for the Theory of Social Behaviour, 38(3), 215-240.

- Foster, S. L. (1995). Choreographing history. Bloomington, IN: Indiana University Press.

- Foster, S. L. (1998). Choreographies of gender. Signs, 24(1), 1-33.

- Franko, M. (1995). Mimique. In J. S. Murphy, & E. W. Goellner (Eds.), Bodies of the text (pp. 205-216). New Brunswick, Canada: Rutgers University Press.

- Fry, B. (2007). Visualizing data. Beijing, China: O’Reilly.

- Gaver, W. (2011). Making spaces: How design workbooks work. In G. Fitzpatrick, C. Gutwin, B. Begole, & W. A. Kellogg (Eds.), Proceedings of the 2011 Annual Conference on Human Factors in Computing Systems (pp. 1551-1560). New York, NY: ACM Press.

- Gavrila, D. M. (1999). The visual analysis of human movement: A survey. Computer Vision and Image Understanding, 73(1), 82-98.

- Goffman, E. (1959). The presentation of self in everyday life. Garden City, NY: Doubleday.

- Goffman, E. (1986). Frame analysis. Boston, MA: Northeastern University Press.

- Goodwin, C. (1994). Professional vision. American Anthropologist, 96(3), 606-633.

- Guest, A. H. (1989). Choreo-graphics: A comparison of dance notation systems from the fifteenth century to the present. New York, NY: Gordon and Breach.

- Guest, A. H. (2005). Labanotation: The system of analyzing and recording movement. New York, NY: Routledge.

- Haigh, T. (2009). How data got its base: Information Storage Software in the 1950s and 1960s. Annals of the History of Computing, 31(4), 6-25.

- Hall, E. T. (1966). The hidden dimension. New York, NY: Anchor books, Doubleday.

- Halliday, M. A. K., & Matthiessen, C. M. I. M. (2004). An introduction to functional grammar. London, UK: Arnold.

- Hallnäs, L. (2011). On the foundations of interaction design aesthetics: Revisiting the notions of form and expression. International Journal of Design, 5 (1).

- Hallnäs, L., Melin, L., & Redström, J. (2002). Textile displays: Using textiles to investigate computational technology as design material. Proceedings of the 2nd Nordic Conference on Human-Computer Interaction.

- Hansen, L. A. (2011). Full-body movement as material for interaction design. Digital Creativity, 22(4), 247-262.

- Hansen, L. A. (2013). Making do & making new - Performative moves into interaction design. International Journal of Performance Arts and Digital Media, 9(1), 133-149.

- Hollan, J., & Stornetta, S. (1992). Beyond being there. In P. Bauerdfeld, J. Bennett, & G. Lynch (Eds. ), Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 119-125). New York, NY: ACM Press.

- Horaud, R., Niskanen, M., Dewaele, G., & Boyer, E. (2009). Human motion tracking by registering an articulated surface to 3D points and normals. Intell Pattern Analysis and Machine Intelligence, IEEE Transactions, 31(1), 158-163.

- Hummels, C., Overbeeke, K. C., & Klooster, S. (2007). Move to get moved: A search for methods, tools and knowledge to design for expressive and rich movement-based interaction. Personal Ubiquitous Computing 11(8), 677-690.

- Ingold, T. (2007). Lines : A brief history. London, UK: Routledge.

- Kendon, A. (1990). Conducting interaction. Cambridge, UK: Cambridge University Press.

- Kendon, A. (1995). Sociality, social interaction, and sign language in aboriginal Australia. In B. Farnell (Ed.), Human action signs in cultural context:The visible and the invisible in movement and dance. Metuchen, NJ: Scarecrow Press.

- Kendon, A. (2004). Gesture: Visible action as utterance. Cambridge, UK: Cambridge University Press.

- Kirsch, D. (2013). Embodied cognition and the magical future of interaction design. ACM Transactions on Computer-Human Interaction, 20(1), 1-30.

- Klemmer, S. R., Hartmann, B., & Takayama, L. (2006). How bodies matter: Five themes for interaction design. In S. Bødker, & J. Coughlin (Eds.), Proceedings of the 6th conference on Designing Interactive systems (pp. 140-149). New York, NY: ACM Press.

- Knapp, M. L., & Hall, J. A. (2006). Nonverbal communication in human interaction. Belmont, CA: Thomson/Wadsworth.

- Koskinen, I. (2011). Design research through practice: From the lab, field, and showroom. Waltham, MA: Morgan Kaufmann.

- Kress, G., & van Leeuwen, T. (2001). Multimodal discourse: The modes and media of contemporary communication. London, UK: Arnold.

- Kulkarni, K., Boyer, E., Horaud, R., & Kale, A. (2011). An Unsupervised Framework for Action Recognition Using Actemes. In R. Kimmel, R. Klette, & A. Sugimoto (Eds.), Computer Vision ACCV 2010 (Vol. 6495, pp. 592-605). Berlin & Heidelberg, Germany: Springer.

- Levin, G. (n.d.). Ask me anything video for FITC. Retrieved 2 December, 2012, from https://vimeo.com/35858119

- Loke, L., & Robertson, T. (2013). Moving and making strange: An embodied approach to movement-based interaction design. ACM Transactions on Computer-Human Interaction, 20(1), 1-25.

- Löwgren, J., & Stolterman, E. (2004). Thoughtful interaction design: A design perspective on information technology. Cambridge, MA: The MIT Press.

- Lucena, M., Blanca, N. P., Fuertes, J. M., & Marín-Jiménez, M. J. (2009). Human Action Recognition Using Optical Flow Accumulated Local Histograms. In H. Araujo, A. Mendonça, A. Pinho, & M. a. s. Torres (Eds.), Pattern Recognition and Image Analysis (Vol. 5524, pp. 32-39). Berlin & Heidelberg, Germany: Springer.

- Mazé, R., & Redström, J. (2005). Form and the computational object. Digital Creativity, 16(1), 7-18.

- McNeill, D. (1992). Hand and mind: What gestures reveal about thought. Chicago: University of Chicago Press.

- Moeslund, T. B., Hilton, A., & Krüger, V. (2006). A survey of advances in vision-based human motion capture and analysis. Computer Vision and Image Understanding, 104(2), 90-126.

- Morrison, A. (2010) (Ed.). Inside multimodal composition. Cresskill, NJ: Hampton Press.

- Morrison, J. B., & Tversky, B. (2005). Bodies and their parts. Memory & cognition, 33(4), 696-709.

- Newlove, J., & Dalby, J. (2009). Laban for all. London, UK: Nick Hern books.

- Niebles, J. C., Chen, C.-W., & Fei-Fei, L. (2010). Modeling temporal structure of decomposable motion segments for activity classification. Proceedings of the 11th European conference on Computer vision (pp. 392-405). Berlin & Heidelberg, Germany: Springer.

- Noland, C. (2009). Agency & embodiment - Performing gestures / Producing culture. Cambridge, MA.: Harvard University Press.

- Nordby, K. (2010). Conceptual designing and technology: Short-range RFID as design material. International Journal of Design, 4(1), 29-44.

- Pers, J., Sulic, V., Kristan, M., Perse, M., Polanec, K., & Kovacic, S. (2010). Histograms of optical flow for efficient representation of body motion. Pattern Recognition Letters, 31(11), 1369-1376.

- Poppe, R. (2007). Vision-based human motion analysis: An overview. Computer vision and image understanding, 108(1-2), 4-18.

- Poppe, R. (2010). A survey on vision-based human action recognition. Image Vision Computing, 28(6), 976-990.

- Puri, R., & Hart-Johnson, D. (1995). Thinking with movement: improvising versus composing? In B. Farnell (Ed.), Human action signs in cultural context: The visible and the invisible in movement and dance (pp. 158-181). Metuchen, NJ.: Scarecrow Press.

- Reed, S. A. (1998). The politics and poetics of dance. Annual Review of Anthropology, 27, 503-532.

- Sanders, E. B. N., & Stappers, P. J. (2008). Co-creation and the new landscapes of design. CoDesign, 4(1), 5-18.

- Schiller, G. (2006). Kinaesthetic traces across material forms: stretching the screen’s stage. In S. Broadhurst, & J. Machon (Ed.), Performance and technology: Practices of virtual embodiment and interactivity (pp. 100-111). Basingstoke, UK: Palgrave Macmillan.

- Schön, D. A. (1991). The reflective practitioner: How professionals think in action. Aldershot: Avebury.

- Schrader, C. (2005). A sense of dance: Exploring your movement potential. Champaign, IL.: Human Kinetics.

- Sennett, R. (2008). The craftsman. New Haven, CT: Yale University Press.

- Sheets-Johnstone, M. (1999a). Emotion and movement. A beginning empirical-phenomenological analysis of their relationship. Journal of Consciousness Studies, 6(11-12), 259-277.

- Sheets-Johnstone, M. (1999b). The primacy of movement. Amsterdam, the Netherlands: John Benjamin.

- Sheets-Johnstone, M. (2011). The imaginative consciousness of movement: Linear quality, kinaesthesia, language and life. In T. Ingold (Ed.), Redrawing anthropology: materials, movements, lines (pp. 115-128). Farnham: Ashgate.

- Sigal, L., Balan, A. O., & Black, M. J. (2010). HumanEva: Synchronized video and motion capture dataset and baseline algorithm for evaluation of articulated human motion. International Journal of Computer Vision, 87(1-2), 4-27.

- Silver, M. (2003). Traces and simulations. In M. Silver, & D. Balmori (Eds.), Mapping in the age of digital media: The Yale symposium (pp. 108-119). Chichester, UK: Wiley-Academy.

- Sklar, D. (2008). Remembering kinesthesia: An inquiry into embodied cultural knowledge. In C. Noland, & S. A. Ness (Eds.), Migrations of gesture (pp. 85-111). Minneapolis: University of Minnesota Press.

- Sminchisescu, C., Kanaujia, A., & Metaxas, D. (2006). Conditional models for contextual human motion recognition. Computer Vision and Image Understanding, 104(2), 210-220.

- Stern, D. N. (2010). Forms of vitality: Exploring dynamic experience in psychology, the arts, psychotherapy, and development. Oxford, UK: Oxford University Press.

- Streeck, J., Goodwin, C., & LeBaron, C. D. (2011). Embodied interaction: Language and body in the material world. Cambridge, UK: Cambridge University Press.

- Thomas, H. (2003). The body, dance and cultural theory. Basingstoke, UK: Palgrave Macmillan.

- Tversky, B., Morrison, J. B., & Zacks, J. (2002). On bodies and events. In A. N. Meltzoff & W. Prinz (Eds.), The Imitative mind (pp. 221-232). Cambridge, UK: Cambridge University Press.

- Utterback, C. (2004). Unusual positions - Embodied interaction with symbolic spaces. In P. Harrigan & N. Wardrip-Fruin (Eds.), First person: New media as story, performance, and game (pp. 218-226). Cambridge, MA.: The MIT Press.

- Vallgårda, A., & Redström, J. (2007). Computational composites. In M. B. Rosson, & D. Gilmore Proceedings of the 25th SIGCHI Conference on Human Factors in Computing Systems. New york, NY.: ACM press.

- van Leeuwen, T. (1999). Speech, music, sound. Basingstoke, UK: Macmillan.

- van Leeuwen, T. (2005). Introducing social semiotics. London, UK: Routledge.

- Victor, B. (n.d.) Inventing on Principle. Retrieved 2 December, 2012, from http://vimeo.com/36579366

- Vinciarelli, A., Pantic, M., Herv, Bourlard, & Pentland, A. (2008). Social signal processing: state-of-the-art and future perspectives of an emerging domain. In C. Griwodz, A. Del Bimbo, K. S. Candan, & A. Jaimes Proceedings of the 16th ACM International Conference on Multimedia. New york, NY.: ACM press.

- Wachsmuth, I., Lenzen, M., & Knoblich, G. (2008). Embodied communication in humans and machines. Oxford, UK: Oxford University Press.

- Wang, L., Hu, W., & Tan, T. (2003). Recent developments in human motion analysis. Pattern Recognition, 36(3), 585-601.

- Williams, D. (2004). Anthropology and the dance: Ten lectures. Urbana, IL.: University of Illinois Press.