Evaluating Feedback Interventions: A Design for Sustainable Behaviour Case Study

Garrath T. Wilson*, Tracy Bhamra, and Debra Lilley

Loughborough University, Loughborough, UK

Design for Sustainable Behaviour is an emerging research area concerned with the application of design interventions to influence consumer behaviour during the use phase towards more sustainable action. However, current research is focussed on strategy definition and selection with little research into understanding the actual impact of the interventions debated. Here, the authors present three themes as different entry points to the evaluation of a feedback intervention designed to change behaviour towards a sustainable goal: an evaluation of the behaviour changed by the intervention; an evaluation of the interventions functionality; and an evaluation of the interventions sustainable consequences. This paper explores these themes through a case study of a physical feedback intervention prototype designed with the intention of reducing domestic energy consumption through behaviour change whilst maintaining occupant comfort. In this paper, the authors suggest that questions for evaluating functionality and usability are dependent upon the intervention strategy employed; questions for the evaluation of behavioural antecedents and ethics are applicable to all intervention strategies; finally, questions for the evaluation of sustainable metrics are dependent upon the interventions context. More universal lines of questioning are then presented, based on the findings of this study, suitable for cross-study comparison.

Keywords – Behaviour Change, Design for Sustainable Behaviour, Domestic Energy Consumption, Feedback, Sustainability.

Relevance to Design Practice – Through a design case study, this paper offers designers a new approach to the structured evaluation of a product designed to influence a user’s behaviour towards sustainable goals. This research presents three themes as possible entry points to evaluation.

Citation: Wilson, G. T., Bhamra, T., & Lilley, D. (2016). Evaluating feedback interventions: A design for sustainable behaviour case study. International Journal of Design, 10(2), 87-99.

Received February 10, 2015; Accepted April 6, 2016; Published August 31, 2016.

Copyright: © 2016 Wilson, Bhamra & Lilley. Copyright for this article is retained by the authors, with first publication rights granted to the International Journal of Design. All journal content, except where otherwise noted, is licensed under a Creative Commons Attribution-NonCommercial-NoDerivs 2.5 License. By virtue of their appearance in this open-access journal, articles are free to use, with proper attribution, in educational and other non-commercial settings.

*Corresponding Author: G.T.Wilson@lboro.ac.uk

Dr. Garrath T. Wilson is a Lecturer in Industrial Design and is part of the Sustainable Design Research Group at Loughborough Design School, UK. His research interests include the psychology of energy consumption and behaviour change strategies; the ethics and design considerations of behavioural interventions; design for sustainable and resilient futures; and more recently, emotionally durable design and product-service systems. Drawing upon an industrial design consultancy background, design has always been central to Garrath’s research approach, generating design concepts and physical prototypes as speculative and disruptive probes or as behavioural change agents. Garrath has written and talked internationally on the topic of Design for Sustainable Behaviour and is enthusiastic about both industry and public engagement.

Professor Tracy Bhamra is the Pro Vice Chancellor (Enterprise) at Loughborough University and Professor of Sustainable Design. Professor Bhamra has extensive research experience in the field of sustainable design initially during her PhD at Manchester Metropolitan University and then following that at Manchester Business School and Cranfield University before joining Loughborough University in 2003 where she established the Sustainable Design Research Group. Her research focuses on Design for Sustainable Behaviour, Methods and Tools for Sustainable Design, and Sustainable Product Service Systems. Tracy has over 150 publications associated with her research and has been awarded over £2.5m of funding for her research from the UK government and research councils and a number of large industrial organisations. Professor Bhamra has supervised over 20 successful PhDs and has acted as an external examiner for PhD theses in the UK and internationally.

Dr. Debra Lilley is a Senior Lecturer in Design at Loughborough Design School, Loughborough University. Debra has an MRes in Innovative Manufacturing, Sustainability and Design and completed the 1st UK PhD in Design for Sustainable Behaviour, an emerging field of research seeking to reduce the environmental and social impacts of products by moderating users’ interaction with them. Debra is Co-Investigator on CLEVER: Closed Loop Emotionally Valuable E-waste Recovery and CORE: Creative Outreach for Resource Efficiency. Both are funded by the Engineering and Physical Sciences Research Council (EPSRC). Debra has extensive knowledge and experience of applying user-centred sustainable design methods and tools to generate behavioural insights to drive design development of less-resource intensive products.

Introduction

In the wake of the United Nations Framework Convention on Climate Change (United Nations, 1992), the Kyoto Protocol (United Nations, 1998), and more recently, the Paris Agreement (United Nations, 2016), there is an increasing global consensus that developed nations must reduce their greenhouse gas emissions to avoid the impending crisis wrought by man-made climate change. This environmental predicament which has necessitated legislative action, such as the UK’s Climate Change Act 2008 (Parliament of the United Kingdom, 2008), has been propagated, in part, by energy consumed within the domestic sphere and the greenhouse gases that are produced as a consequence (Department of Energy and Climate Change, 2008). More efficient technological intervention, however, may not be the solution (Darby, 2006; Mintel, 2009) as it is often the behaviour of the user that drives consumption (Cole, Robinson, Brown, & O’Shea, 2008; Steg & Vlek, 2009). In order to promote a change in domestic energy use, it is critical, therefore, to understand the underlying cognitive and physical factors that influence the individual’s interaction with energy consuming domestic products (Abrahamse, Steg, Vlek, & Rothengatter, 2005).

A project that explored such factors was the Carbon, Control and Comfort: User-centred control systems for comfort, carbon and energy management project [CCC]. The CCC project was a three year, interdisciplinary project that attempted to reduce domestic energy use in social housing [social housing within the UK can be defined as “housing that is let at low rents and on a secure basis to people in housing need” (Shelter England, n.d.)], through the user-centred design of feedback interventions to change behaviour whilst maintaining tenant comfort levels. The aim of this paper is to present the findings from one aspect of the authors’ contribution to the CCC project; an investigation into how Design for Sustainable Behaviour models and strategies can be implemented within a structured design process towards the reduction of domestic energy consumption within social housing properties. Design for Sustainable Behaviour [DfSB] is a branch of sustainable design theory that presents a catalogue of design-led strategies concerned with influencing user behaviour, during the use phase of a product, towards more sustainable action (Lilley, 2009). DfSB strategies, when applied to the interface between a user and their goal–the product, can be used by the designer to shape an individual’s perception, learning, and interaction (Tang & Bhamra, 2009). This affords the opportunity to the designer to challenge the individual’s behaviour which could influence the individual’s action, thus mitigating or shaping the resulting consequence and impact.

It has been recognised by the majority of researchers working in this field that there exists an axis along which these strategies are positioned, determined by the control or power in decision-making. At one end of this axis are technologically agentive solutions such as intelligent, automatic technologies, whilst the other end of the axis represents user agentive technologies, such as feedback (Lockton & Harrison, 2012; Tang & Bhamra, 2011; Wilson, Bhamra, & Lilley, 2015; Zachrisson & Boks, 2012). However, there are disagreements on the terminology and classification of these strategies, making future research attempts and cross-research discussions difficult without clear and common agreement (Boks, 2012). Whilst an axis structure and design process model are gradually emerging through consensus (Selvefors, Pedersen, & Rahe, 2011; Tang & Bhamra, 2011; Zachrisson, Storrø, & Boks, 2012), the exact relationship between the phases of the design process are yet to become standardised. Coupled with the lack of case studies and the short duration of many of the implemented design processes identified, which tend to focus on the selection of DfSB strategies (Lilley & Wilson, 2013; Wilson et al., 2015), how a behaviour changing DfSB intervention should be evaluated is relatively undetermined, leaving a considerable gap in knowledge. This paper focuses on the qualitative evaluation of a feedback intervention prototype, considering evaluation questioning from a Design for Sustainable Behaviour theoretical perspective.

Evaluation Themes

The objective of this exploratory, qualitative case study is to address a considerable gap that currently exist within the field of DfSB, what are the entry points for evaluating a behaviour changing intervention? Without such an understanding, not only is the actual, tangible behavioural impact of an intervention unknown, but also cross-research study comparisons are difficult. Here, we frame such an evaluation as a series of three fundamental evaluation themes, starting with behaviour change.

Behaviour Change

Often used as a catch-all phrase for all human activity, behaviour can be a rather problematic term due to the proliferation of different and nuanced models and perspectives (Darnton, 2008). The use of models, however, helps one to explore and understand the multiple facets of human behaviour through a simplified representation of complex social and psychological structures (Chatterton, 2011; Oxford English Dictionary, n.d.). Models of behaviour, however, not only differ in their approach and representation of underlying structures, but also may differ in their theoretical perspective. To expand, models may consider the psychological rational antecedents of an individual’s behaviour, focussing on the actor, or they may take the sociological position of how societal elements form and define action, or practice (Chatterton, 2011). Please refer to Pettersen (2013) for an interesting digest and critic of different theoretic perspectives. However, no one model is the correct representation of behaviour, rather they can be considered as different concepts or lenses on which to view the same subject, although applications in the field of psychology are more ontologically aligned with the core of traditional Industrial Design thinking (concept of user, attitudes, goals, habits etc.).

Considering a psychological approach to behaviour, the individual is central to a rational decision-making process (rational in terms of being a process with known variables and deliberation), with behavioural action influenced by the antecedent structure unique to the individual and their operating context (Chatterton, 2011; Jackson, 2005); by disaggregating behavioural processes into a heuristic framework of multiple parts, understanding of the underlying formation of behaviour is increased whilst also providing numerous points for further study or intervention. In order to determine if the user’s behaviour has changed due to a design intervention, it is imperative to understand the antecedents and the habitual strength of that behaviour targeted for change. Only then can it become possible to recognise and fully evaluate any change in the behaviour attributed to that intervention.

Put forward by Ajzen (1985, 1991), the Theory of Planned Behaviour [TPB] centres on the concept of intention; the intention of an individual to act. Intention, within this model, is driven by belief; rational cognitive decision making by the individual, through the weighing of relevant costs and benefits (Abrahamse & Steg, 2011; Abrahamse et al., 2005; Ajzen, 2002; Jackson, 2005). Although well-known with an extensive history of application, this model suggests that for every deliberate action, there is a reasoned process of conscious/subconscious evaluation by the user, hence the planned moniker. Triandis’ Theory of Interpersonal Behaviour [TIB], although similar to the TPB, differs in its inclusion of habits, which intercede between intention (here composed of attitude, social factors, and affect) and behaviour, acting as a key determinant of the actual enactment of intention, the ensuing behaviour. Both intention and habit are in turn both ruled by the facilitating or constraining conditions, the external factors that enable or constrain behaviour (Chatterton, 2011; Jackson, 2005), such as birth attributes, acquired capabilities, situational context , public policy, and economic variables (Stern, 1999). Habits within this model are seen as routinized action enacted without conscious intention, hence its distinct mediating branch outside of intention (Chatterton, 2011). To expand this definition, three characteristics construct a habit: first a goal must be present and achievable; second if the achieved goal is satisfactory, the same action is repeatable; and third, a habitual response is governed by the cognitive process that develops through frequency and association of the context and intentional factors. Habits therefore may be identified and assessed through the cognitive decisions made and not through the frequency of the action (Lally et al., 2009; Polites, 2005; Steg & Vlek, 2009). Verplanken (2006) expands upon this standard definition of habit, stating that the strength of a habit is not determined just by the frequency of past behaviour (frequency based cued learning), but is also constructed of four further parts; lack of awareness, efficiency, difficulty in controlling behaviour, and identity (Bargh, 1994, 1999). Lack of awareness is a lack of conscious decision-making, delegating control of the act to environmental cues. Efficiency relates to the freeing of mental capacity to do other things at the same time through the application of expectation filters. Difficulty of controlling behaviour suggests a habit in principle is controllable, but it is difficult to implement deliberate thinking and planning to overrule. Identity is the reflection of one’s own identity and personal style (Verplanken, 2006; Verplanken, Myrbakk, & Rudi, 2005). Therefore, to include habitual action, one must turn to a model, such as TIB. Based on this definition, the following questions aim to evaluate the changes in context, intentions, and cognitive automaticity caused through design intervention:

- Was the behaviour (as intended by the designer) enacted?

- Did the user’s knowledge change because of the intervention?

- Did the user’s beliefs and value weighting of outcomes change because of the intervention?

- Did the user’s perception of social norms, roles, and self-concept change because of the intervention?

- Did the intervention generate emotional responses in the user?

- Did the facilitating conditions constrain or afford opportunities for the user?

- Did the user have a lack of awareness (conscious decision making) when enacting the intended behaviour?

- Was the user able to do other things (free mental capacity through efficiency) when enacting the intended behaviour?

- Did the user have difficulty in planning or controlling the intended behaviour?

Behaviour change, however, is only one entry point for fully evaluating an intervention that seeks to change behaviour towards sustainable goals; the second to be discussed within this paper is the interventions functionality.

Intervention Functionality

Although it is clear that DfSB interventions should be evaluated by their ability to change one’s behaviour, each strategy employed will have different criteria against which to evaluate. There may be a common target, such as reducing resource consumption, but the considerations and limitations of each strategy vary drastically. For example, at the technologically agentive end of the axis are forcing and determining strategies which are designed to ensure or force a change in behaviour, including intelligent context aware technologies and ubiquitous computing (Lilley, 2009). Examples of persuasive technology include speed bumps that force a driver to slow down and windows that open automatically to regulate the temperature within the building. Such products use technology to achieve a specified consequence, by the designer, often without the user’s explicit agreement or knowledge. Evaluating interventions that negate the user to enforce a change may be assessed, for example, against the support to install and maintain the technology, or to monitor the technology’s effects. Towards the centre of the axis are persuading and behaviour steering strategies (Lilley, Bhamra, & Lofthouse, 2006; Zachrisson et al., 2011). These strategies concern physical or semantic characteristics (Norman, 1988) and the scripting of affordances and constraints (Jelsma & Knot, 2002) to suggest a desired behaviour without forcing action. The majority of everyday products have scripted elements to reduce cognitive fatigue and to help the user to instinctively understand a product’s functionality based on experience and an accumulated visual language. Typical examples of scripting include handles, push plates, and pull bars on doors, each requiring a prescribed tacit interaction. Behaviour steering devices rely on affordances and constraints to encourage a change in behaviour, and thus ergonomics may be of focus when evaluating such products.

Of particular focus within this paper is feedback, a user-agentive intervention strategy that takes the approach of shifting focus towards the consequences of behaviour, framing the positive or negative resulting impact that behaviour has in relation to the antecedents that motivated that action (Abrahamse et al., 2005). Feedback can be defined as an educational tool, used to frame issues caused through behavioural action in order to generate cognitive reflection upon and within the behavioural structure of the individual (Wilson et al., 2015). Without appropriate information the bridging cognitive connections between action and effect are weakened, as impact is not linked by the individual to the behavioural antecedents that precipitated that action, negating any form of evaluation against expectations (intent), or increase in awareness of the consequences of their behaviour (Darby, 2008, 2010; Fischer, 2008). Through a process of cognitive evaluation by the user, future intentions, habits, and behaviour’s may be influenced (Abrahamse et al., 2005; Burgess & Nye, 2008). Common feedback devices include home energy management systems that can provide instantaneous feedback on energy use back to the home owner. When evaluating a feedback intervention, it is important to consider that the ability of information to motivate the individual is not only dependant on its content but also its delivery method, as this helps to frame the information presented to the individual (Wood & Newborough, 2007).

The frequency, duration, location, and accuracy of the feedback provided help the user to be aware of the consequences of their action (Wilson et al., 2015). The rapid provision of feedback after an action has been shown to reinforce the consequences of behaviour to the user (Abrahamse et al., 2005; Darby, 2006; Fischer, 2008), although different activities will require different frequencies of feedback; cooking activities, for example, may involve several energy decisions and actions across an event compared to turning on the washing machine (Wood & Newborough, 2007). The frequency of feedback should be dictated by when the user acts and is open to change, and when the user actually chooses to acknowledge the information provided. In addition, feedback should always be accurate, as estimated feedback disassociates the individual with the consequences of their action (Hargreaves, 2010; Seligman, Darley, & Becker, 1977). For a display local to action, the duration and aggregation of the information displayed needs to be concise to capture immediate interest; a centralised display, such as in a hallway, would show a larger time span, for example energy consumption of all appliances over a week (Wood & Newborough, 2007), although this can vary based on the users goals (Van Dam, Bakker, & Van Hal, 2012). The location of the device should also account for user routines, for example when performing the “baseline check” of the energy monitor before going to bed to ensure that all devices are switched off (Van Dam et al., 2012).

The selection of metric, medium, and mode by which feedback is presented should be framed within the intentions and capabilities of the individual targeted (Wilson et al., 2015). Feedback can be presented to the individual through different metrics, such as energy units, cost, and environmental impact. Each uses a different language to frame the context of consumption, thereby activating different intentions within the individual (Fischer, 2008), although it is recognised that a precise understanding may not be necessary, rather it is the relative movement of the information displayed that helps a consumer “learn what is normal, and what is not” (Anderson & White, 2009; Fitzpatrick & Smith, 2009). The medium by which information is presented also has an effect on its ability to engage with the individual, and thus be comprehended, reflected upon, and effectual (Fischer, 2008). Electronic media provides flexibility of control and display as well as rapid processing capabilities allowing for the presentation of real-time data, although complex devices may, conversely, be difficult for those of with a low level of education, technical ability, or free time to understand or engage with; written materials, by contrast, require a lower level of education or technical ability to engage with but may take a considerably longer time to process (Fischer, 2008). In addition, different presentation modes, such as alphanumerical displays or graphical data, need to be comprehensible (Fitzpatrick & Smith, 2009; Hargreaves, 2010; Wood & Newborough, 2007), undemanding and easy to cognitively process (Fischer, 2008), with ambient features easy to map cognitively for implicit evaluation (Ham, Midden, & Beute, 2009; Löfström & Palm, 2008; Maan, Merkus, Ham, & Midden, 2011).

- Does the frequency and duration of the feedback information help the user to bridge action and effect?

- Does the accuracy of the feedback information help the user to associate with their actions?

- Do the contents and metrics of the feedback information resonate with the user’s norms and motivations?

- Does the granulation of feedback information aid a user’s understanding of this information?

- Does the presentation medium affect a user’s ability to engage with the feedback information?

- Does the presentation mode affect a user’s comprehension of the feedback information?

- Can the user interpret and understand the ambient features of the feedback information?

- Does the location of the feedback device affect the user’s interaction with the feedback information?

- Does the feedback device meet the user’s technical expectations?

- Does the comparison of feedback information to ‘others’ help the user to frame their own information?

- Does the user require any supplementary information, goals or reward schemes to motivate action?

- Were there any user challenges that inhibited or countered the feedback information provided?

The next section of this paper discusses the impact of the changed user behaviour, in respect of being ecologically, socially, and economically sustainable.

Sustainable Consequences

Separating Design for Sustainable Behaviour from other behaviour-changing, design-led fields (such as Design for Behaviour Change) is the clear agenda of changing behaviour towards more sustainable action. One of the primary objectives of a DfSB intervention should, therefore, be the changing of a user’s behaviour towards long-term sustainable ends, in respect of being ecologically, socially, and economically sustainable (Bhamra & Lofthouse, 2007), not the changing of a user’s action for immediate gratification at a long-term cost (thereby becoming unsustainable). Through an understanding and measurement of the change in sustainability metrics, the success of a DfSB design intervention can be put into perspective against the interventions’ function and ability to change the user’s behaviour (Lilley & Wilson, 2013). Interventions with different aims and contexts will require different project specific sustainability impact criteria. As presented in the introduction, the aim of this project is to change the domestic energy consuming behaviour of the user whilst maintaining their comfort levels; therefore, the following questions would be applicable, focussing on the impact criteria of energy consumption and comfort:

- Has net domestic energy consumption changed because of the intervention?

- Has component domestic energy consumption (appliance/space/time) changed because of the intervention?

- Has the net comfort level of the user changed because of the intervention?

- Has the component comfort level of the user (lighting/acoustical/air/thermal) changed because of the intervention?

- Have there been any external interventions?

- Are there any additional sustainable benefits (ecological/economic/social) because of the intervention?

The question of ethics in design is not optional, as technology has ethical connotations whether prescribed towards sustainable ends or not by the designer, therefore also requiring evaluation (Albrechtslund, 2007). Considering DfSB specifically, the issue of ethics is intensified, as the expected behavioural change prescribed through the design intervention by the designer in order to reduce energy consumption, may not be in line with the expectations and values of the user (Pettersen & Boks, 2008). Faced with this dilemma, it is suggested that the designers motivations and original intent are investigated (Berdichevsky & Neuenschwander, 1999; Fogg, 2003), and that the methods and strategies employed by the designer are ethically evaluated, considering the intervention device as a hypothetical human mediator to aide in this complex and morally subjective assessment (Fogg, 2003; Gowri, 2004). Behaviour change interventions, as with any other technology, can result in many potential uses and outcomes dependant on its interaction with a particular user within a particular context; interventions are inherently multi-stable (Albrechtslund, 2007). Whilst designers clearly play an important role in determining the anticipated outcomes of an intervention, the technology can be interpreted and acted upon by the user in line with their own needs and values, which the designer may not have intended or predicted (Verbeek, 2006). The unpredictable nature of user behaviour may result in rebound effects such as increased consumption, the bypassing of technology, or its ignorance and unintended use (Pettersen & Boks, 2008). Therefore, the moral responsibility of the outcomes of interaction resides with both the designer and the user (Berdichevsky & Neuenschwander, 1999; Fogg, 2003; Pettersen & Boks, 2008). In order for the designer to ensure human democratic rights are not violated and that the outcomes of interaction by the user with the product are ethically accounted for, users and other stakeholders should be involved within the design process (Lilley & Lofthouse, 2010; Pettersen & Boks, 2008; Verbeek, 2006). The following questions reflect on the ethics of the user’s changed behaviour, as well as the ethics of the process through which the design intervention was created, the results of which are discussed in the following section:

- Was the designer’s original intent for designing a behaviour intervention ethical?

- Was the designer’s original motivation for designing a behaviour intervention ethical?

- Are the intervention methods employed by the designer ethical?

- Has the designer/user/purchaser taken moral responsibility for the intervention?

- Is the user in control of the intervention?

- Is the level of user control over the intervention acceptably weighted against the intent and motivation of the designer?

- Have the democratic decision making rights of all stakeholders been accounted for in the design process?

- Have the values and morals of all stakeholders been accounted for in the design process?

- Have the values of the stakeholder been evaluated against a robust ethical framework?

- Are the intended outcomes of the intervention ethical?

- Have unintended outcomes been predicted and are ethical?

Feedback Intervention Case Study

The CCC project was an interdisciplinary UK project attempting to reduce domestic energy use in social housing, through the user-centred design of feedback interventions to change behaviour. The choice of feedback intervention was based on energy savings as reported by researchers such as Darby (2006), who showed that energy savings of between 5-15% are possible from direct feedback and 0-10% from indirect feedback. It was also anticipated that if the user’s cognitive processes are engaged, cause is correlated with effect, which, as previously described, is fundamental to the user’s learning and understanding of the consequences of their own behaviour and actions, providing a greater potential for spill-over sustainable behaviour and action (Pettersen & Boks, 2008); forcing and determining strategies have a reduced chance of this due to negating the user.

A physical feedback intervention was developed following a comfort and energy study conducted spring 2010 in Merthyr Tydfil, Wales [an area of the UK with significant unemployment and low levels of education (Office for National Statistics, 2010, n.d.)]. Seven social housing tenements with several dimensions of variability such as household composition, the built form of the property, as well as variations in terms of heating system participated in this initial study. In each of the initial studies, a household member was interviewed at home (a semi-structured contextual interview) for approximately an hour followed by a guided tour (Pink, 2007, 2010) of the same approximate length. Interview questions probed the participant’s background, how the participant defined and created comfort at home, and how the participant used their heating system. The guided tours built upon interview responses, with participants giving a narrated tour of the home to the researcher, reflecting upon artefacts, actions, and experiences in relation to comfort, heating and energy. After a process of identifying insights and opportunities from the research data that had been thematically coded, the following behaviour change brief was derived: to change the behaviour of opening windows with the heating system active using feedback, in order to achieve a reduction in domestic energy consumption whilst maintaining comfort. Following a convergent design process (Cross, 2010), the aim of the final developed concept was to feedback to the participant the status of their heating system in tandem with the status of their windows so as to convey directly the energy consequences of their behaviour. The conveyed information, it was anticipated, would enable the user to consider and examine their actions and bring about a user agentive reduction in waste i.e. a window left open with the heating system active.

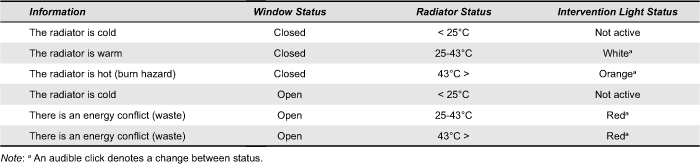

An experimental system prototype (Evans & Pei, 2010) was developed that monitored two input variables—radiator status (surface temperature) and window status (open or closed). Feedback was provided in the form of two output mechanisms: light (white, orange and red LEDs) and sound (piezo buzzer click). For further information on the design process followed, the authors refer you to Wilson (2013). Table 1 summarises the operating conditions and associated feedback response.

Table 1. Feedback intervention prototype statuses.

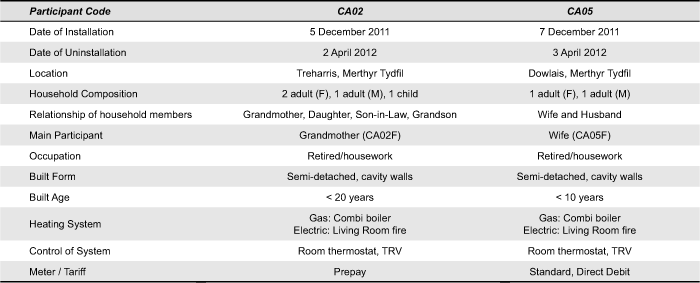

User trials were undertaken with two participant households that formed the cohort for the initial study, installed in December 2011 and uninstalled in April 2012. Coded households CA02 and CA05 were selected as they both exhibited frequent use of windows for the control and circulation of fresh air and controlled the heating on an ad hoc basis, often leading to energy conflicts with their window actions or to a comfort conflict with other tenants. Table 2 summarises the participant’s dimensions of variability:

Table 2. Participant information.

In order to be able to make any claims as to an interventions efficacy in shaping and changing behaviour, one must be able to demonstrate that the change is causal and not an erroneous correlation. Therefore, data on pre-existing behaviour within the context of use (also known as the baseline) must be recorded. With a baseline established, any changes to behaviour as a consequence of the intervention can be measured and evaluated in relation to external influences and post-intervention behaviour; vital if the success of an intervention is to be demonstrated. Data may be captured through various research techniques, including qualitative methods, such as observation (Tang & Bhamra, 2011) and/or through quantitative measurement, such as the duration that a refrigerator door is left open, or the number of times a specific action is repeated (Elias et al., 2008a; Elias et al., 2008b; Wever et al., 2010). Here, a pre-intervention qualitative baseline was established using a semi-structured contextual interview, focused on the participants’ knowledge and normative structures, as well as the context in which they operate, followed by the installation of the intervention prototypes. The intention was to record quantitative data (technical motoring data on both energy consumption and the domestic environment) to compare and correlate against the qualitative interview data recorded. However, due to monitoring issues, this was not possible within the duration of the project. The prototypes were installed into the living room of CA02 and into the kitchen of CA05 (self-designated by the participants as their most comfortable space).

The duration of the user trial used in this study was four months. The appropriateness of this timeframe is borne out by Lally et al. (2009), who found in their study on habit development that automaticity, a key component of habitual behaviour, plateaued on average in sixty six days (the spread was in the order of eighteen to two hundred and fifty four days), although it is noted that a pilot study may have helped to guide the time frame in relation to understanding when the “most change is taking place” (Laurenceau, Hayes, & Feldman, 2007). The shape of change, the trajectory in which behaviour change occurs between the baseline, and the post-intervention study would have helped to understand behaviour variability over the course of the study, including any point of rebound, however, due to the lack of technical monitoring data this was not possible. Although repeated assessment points with interviews or user diaries was considered, these would have become interventions themselves, thus affecting the results of the study.

Following removal of the intervention, both CA02 and CA05 participated in a final semi-structured contextual interview, in order to provide a qualitative comparison to the pre-installation baseline. The post-intervention questions were split into understanding if there had been any change in the participant’s experience of comfort, use of energy, and finally questions relating to the functions of the prototype itself. Analysis of the user trial data took an inductive approach, with semantic codes in relation to the projects overarching research objective, that worked towards the defining of thematic groups. Themes were then further analysed and interpreted in relation to the research objectives of this case study, generating a rich and thick description of a large amount of data in a format that makes it easier to compare and contrast data across sets (Braun & Clarke, 2006). In the next sections of the paper, the findings of the user trials are put into context against the three themes presented.

Did the User’s Behaviour Change?

The intention of the intervention was to change the behaviour of opening windows with the heating system active through feedback information. In response to the feedback information, CA05 identified the heating system cycle, and that the variations in lights showed this change in radiator surface temperature. This led to CA05 exploring and increasing her own understanding of how the heating system worked across the household and the consequences of any changes that she made to the settings of this system. Through the changing of the lights on the radiator, the participant was encouraged to reassess their thermal comfort, to investigate the settings of their heating system, and to act accordingly, as self-reported, in terms of her comfort levels. CA02, in response to this information, closed her blinds more often as she believed that this cut down on the number of window draughts thereby allowing the radiator to get hot quicker. However, when reflecting upon the intervention, the participants believed that certain actions are unchangeable, regardless of any information that the feedback intervention may provide. For CA02, heating was akin to a basic human right.

CA02 …if you’re hungry you eat; if you’re cold you put the heating on…I don’t think anything would change you.

It was also apparent that the majority of intentions and habits had stayed the same. From the post intervention interview it was clearly established that the built form and heating technologies were still the same, with the same tenants occupying the same rooms, performing similar daily tasks, and following window opening and heating activation routines. The participants value weighting of resource consumption and comfort had fundamentally not changed, such as in the desire for fresh air.

CA02 As soon as I get up I’d open the window to allow a bit of air in…If it’s nice for a few hours; but if it’s not very nice just for a half hour or something just to let some fresh air in.

INTERVIEWER So, if the heating was on…how long would the windows be open for?

CA02 If it’s cold only about 20 minutes perhaps…

What had changed, however, was the knowledge and awareness that the participants had concerning how the heating system worked and when it was active. Participants may not have drastically changed the activation of their heating systems, but were now more aware of when to turn it off or alter it, no longer always waiting for thermal discomfort. Furthermore, conflicting energy use due to multiple occupancy could also be assessed and corrective actions taken, such as turning down a radiator TRV (thermostatic radiator valve) in a single room rather than the house thermostat, whereas previously the covert actions of other family members in adjusting the heating system would not be noticed until thermal discomfort was felt.

CA02 …if it was a day like today now and [my daughter] wanted that heating on, and I certainly don’t see no reason for it to be on...I wouldn’t have it on myself... [but] I knew she’d been down then and she’d put the heating on [via the thermostat]…if I didn’t want it on I’d turn it off on the radiator.

It is unlikely from the user trials that the participants had a lack of awareness or difficulty in controlling the intended behaviour of closing the window with the heating on. However, CA02 was developing a (self-reported) cognitive connection between the ambient feedback and the radiator temperature, no longer touching the radiator, which may have become habitual, although it is difficult to verify from an interview. The provision of information had not altered the motivation or intentions of the participants to act, driven by a short-term immediate goal (fresh air) that is perceived to be of greater benefit than the long-term goal of domestic warmth and lower fuel bills. Although the action and intentions of turning on the heating system had not significantly changed, the feedback mechanism, as self-reported by CA02, had superseded the habit of waiting for extreme discomfort before acting.

This research has illustrated that attempting to change the intention of an individual with feedback alone does not necessarily correlate with a substantial change in overall behaviour. Illustrating the temperature of the radiator and waste in an attempt to alter the individual’s perception and evaluation of outcomes had only a limited effect in this study. Behavioural action, it would appear, remains largely unaffected unless the behaviour change mechanism illustrates a dramatic enough change to motivate conscious and on-going consideration and reassessment. Even in the depths of winter when thermal discomfort and energy bills were at their highest, windows were still opened daily by the user trial participants with little conscious consideration for its thermal and cost impact. This appears in line with Triandis’ Theory of Interpersonal Behaviour, which, as Darnton (2008) discussed, prioritises habit over intention and context. Framing the problem and informing the individual that their window was open with the heating on would not change action, logically, if there was no motivation or change in intent to do so. An additional explanation for the return to existing behaviour may be that the predictability and consistency of the ambient feedback features had become less effective over time as receptiveness to new information fades, an issue as noted in the WaterBot trials by Arroyo, Bonanni, and Selker (2005) and as described by Van Dam, Bakker, and Van Hal (2010) as feedback becomes a background technology.

Did the Intervention Function?

Feedback helped the users to understand how their heating system actually worked in terms of thermal output. Through the provision of rapid, accurate, and frequent information, the participants could instantaneously see any effect that their actions would have on the heating system, either intentional or unintentional, such as the opening of a window:

CA02 …you could see the colour changes straightaway… you can think well, why put the heating on if I’m going to open the windows, because it’s just flying out of the window like, isn’t it?

This encouraged a period of investigation and optimisation, particularly during the initial period of installation, although towards the end of the four month installation period the participant’s receptiveness to the information was self-reported as decreasing. This was possibly attributable to the participant’s actions becoming optimised as far as they believed possible, therefore no longer requiring the information.

CA05 The radiator was obviously knocking itself off and I didn’t realise, you know; so I was wondering why it was. So, it make me then move about to see…so, I’d have a fiddle…But then it just blends in like all the other stuff that’s around.

The findings illustrate limited success with the selection of metrics, the use of ambience and the selection of lights and sound as a presentation medium. The off, white, and orange light statuses were correctly understood by the participants; usually described as being cold, heating up, or warm and hot respectively. The red light status, however, caused some challenges, participants assuming it to represent an even hotter surface temperature than white, rather than wasteful behaviour as intended. In addition, both user trial participants found that the clicking noise that accompanied the change in status was the first thing that they noticed, drawing their attention to the light. CA02, in particular, initially responded to the information by touching the radiators surface, as a form of experiential learning. Towards the end of the installation period, the participant no longer felt the need to touch the radiator, as the cognitive connection between the ambient feedback and the radiator surface temperature had become established.

CA02 …it would click when the radiator was getting warm…if you’re just watching telly then the click would be the first thing you notice…in the beginning I used to [touch the radiator]; you just get used to it then. Oh, that’s getting hotter; or that’s not so hot now…

The location of the feedback device also had a noticeable effect on the way in which the information was acted upon by the participant. For CA02, this was the living room; for CA05, the kitchen. Whilst for CA02 this position allowed the participant to detect any change from a regularly occupied comfortable position, for CA05 this was not always the best position for effective engagement, as the room was not always in use, rendering the feedback useless.

The frequency, duration, and accuracy of the information allowed the user trial participants to see relatively instantaneously the effects of their action, with the impact immediately displayed. This, in combination with the location of the device on the radiator in close proximity to the window, allowed for accurate real-time monitoring of the status of their heating system and facilitated an initial period of exploration and optimisation; this is all in line with the literature. A breadth of authors including Fisher (2008), Darby (2006), and Abrahamse et al. (2005) have all stated that quick feedback after an action reinforces the bridge between action and effect. In addition, Wood and Newborough (2007), Hargreaves (2010), and Fischer (2008) have all suggested that duration and accuracy of the information contribute to maintaining the interest of the individual, also making the information meaningful and helping to strengthen the cognitive connection between action and effect.

Was the Change in User’s Behaviour Sustainable?

The moments of thermal discomfort had decreased because of the intervention. As already noted, the intervention prompted participants to reassess both their thermal environment as well as the mechanisms that control it, moving away from their reliance on the physical sensation of comfort as a feedback mechanism. Prior to intervention, physical discomfort feedback included: feeling too hot with the central heating system left on for extended periods eventually driving a desire to turn it down; the touching of radiators to determine if the heating system was active after others had covertly altered the thermostat; and windows for fresh air left open too long or draughts eventually creating a discomforting cooling effect, finally driving window closure. Such mechanisms, however, are not ideal as they rely on thermal discomfort to indicate a change of state or excessive consumption.

In one unintended event with the intervention, the heating system had turned itself off as the prepaid sum of gas had all been consumed. On the grandson recognising that this was an unexpected event, he informed the adults of the household who then responded accordingly. Without quantitative data it is not possible to determine the exact impact, however, it could be expected that this information increased their consumption of energy for this specific event, although it did also help the household to maintain their desired comfort level and to re-evaluate their consumption.

CA02 …our [grandson] would get up and say: the radiators have gone off. Well, we’d sit here and we didn’t know the gas had gone; we’d run out of gas. So, [grandson] knew by that [the intervention]; the gas has gone, he said, because that’s off…Because we didn’t really know it had gone off like.

Feedback is neither inherently ethical nor unethical, as the moral responsibility resides with both the designer that creates the device and the user that has freedom of choice and action. The motivation behind this feedback intervention can be disentangled into two key drivers, legislation (to reach the goals of the Climate Change Act) and education (to complete the goals of the CCC project). The intention of the feedback intervention was to reduce domestic energy consumption whilst maintaining the inhabitants defined levels of comfort. However, although these motivations and intentions may seem worthy, the end user has a clear role to play in this ethical deliberation process. Involving the user helps to ensure that their decision-making concerns are exercised and accounted for; that the process is democratic. An intentional ethically responsible effect of the device was that it eventually removed the need for a user trial participant to touch the radiator in order to determine the temperature of the radiator. In general, the user trial participants enjoyed using the feedback prototype, finding the intervention to be good, as it made them more aware of their heating system.

Discussion

If there is one particular area under represented thus far in existing DfSB cases studies, it is what to consider when evaluating a design intervention that seeks to change behaviour towards sustainable ends. This paper starts to fill these gaps in knowledge, through three fundamental themes, mapped to the composite parts of Design for Sustainable Behaviour; behaviour change, intervention functionality, and sustainable consequence. Here we discuss the implications of the research and its limitations.

To evaluate behaviour change, one must first decide which model one is using; which of the many lenses available is most appropriate for the given context? This work has presented several lines of questioning based on a specific model that combines the TIB model of behaviour with definitions of habit as outlined above (Wilson, 2013; Wilson, Lilley, & Bhamra, 2013). However, the emerging consensus [as evidenced, for example, in the work of Hanratty (2015)] is that the inherent complexity of trying to account for all aspects of a single, detailed, and multifaceted behavioural model, drastically limits application by practioners that prescribe to a different specified behavioural model (Hanratty, 2015), in effect, hindering cross-study comparison. Combined with the difficulty of trying to answer so many complex questions in detail, it would be more appropriate, therefore, to consider the relevant issues as broader questions, anchored within the literature but applicable across a wider range of behavioural models. Taking the most significant points of the presented case study and reducing the complexity and specificity of the questions, more applicable lines of generic questioning are presented below:

- Has there been a change in user intention?

- Has there been a change in context?

- Has there been a change in user cognitive process?

- Has there been a change in user action?

Considering these newly presented questions, changes in behaviour are not specific to a DfSB strategy, or indeed the application context. Although behaviour itself differs depending on the user and context, ultimately an easier to understand (by the designer), more uniform research and questioning strategy can now be considered. These behavioural sub-questions can be asked of any behaviour change strategy, asked in any context, as all behavioural indicators are present to an extent within all action, habitual or not.

The functionality questions presented relate specifically to the design of the intervention. Questions such as how does the accuracy of the feedback information help the user to associate with their actions, are clearly weighted towards feedback alone and are not applicable to the other DfSB intervention strategies. The overarching question is still valid; however, if these questions were to be applied to a different strategy then the sub-questions would need to be more appropriate to the mechanism employed. In this case, genericity beyond feedback would be impossible to achieve here although it is acknowledged that this may be the start of a database of such questions that may be developed through the cross-study comparison of further applied DfSB strategies (unfortunately outside of the scope of this research). Feedback seeks to change behaviour through the provision of information and therefore the sub-questions presented are related to this, again a broader version is presented below that focuses on the key attributes of feedback as understood from the research case study; user awareness, intentions, and capabilities:

- Has the frequency, duration, location, and accuracy of the feedback increased the user’s awareness of the consequences of their actions?

- Are the selected metrics, medium, and mode relevant to the intentions, and within the capabilities of the user?

The final theme pertains to the sustainability impact of the behavioural intervention, dependent upon the specific context in which it is applied. Again, the theme is still valid; however, the sub-questions would need to be honed towards the sustainable attribute that one wishes to change. Whilst sustainability is commonly defined in terms of economic, environmental, and social pillars (Bhamra & Lofthouse, 2007), each of these pillars are contextual. For example, this project is concerned with reducing the amount of CO2 (environment) generated from domestic energy consumption, whilst ensuring that comfort (social) is maintained or increased, and that financial burden (economy) is maintained or reduced. Questions that evaluate the ethical impact of changing the user’s behaviour and the ethics of the process itself are not tied to any strategy or context, and are applicable to all design interventions. However, it should be noted that the list of questions asked do not seek to be moralistic, rather they are a proposition of considerations by the designer. They are not necessarily designed to be solely reflective, but as a platform from which to integrate other relevant perspectives. Rather than stating that “the motivations behind the creation of a persuasive technology should never be such that they would be deemed unethical if they led to a more traditional persuasion” (Berdichevsky & Neuenschwander, 1999), it would perhaps be more logical to ask “was the designers original motivation for designing a behaviour intervention ethical?”. This allows for a wider discussion with the users and further stakeholders without relying on an implicit understanding of a universal moral framework. Decisions can be made in reference to the moral frameworks of relevance.

In addition, there should be a further consideration when evaluating a behaviour changing intervention, concerning multiple occupancy and users. Within this research it was found that whilst feedback is useful for an individual to assess the impact another occupant had on the heating system (such as opening a window), any second occupant didn’t have either the opportunity to assess their own impact (due to location), or that the information that was provided was not relevant to their intentions. This raises the question; can feedback information be suitable for multiple users with conflicting intentions and competing actions rather than just the prescribed individual user? Perhaps this is also a limitation of using behaviour theory and traditional user-centred research techniques, that often focuses on the individual rather than the social nexus and context (such as the users family/friends/) and is certainly a consideration that is given much more thought and significance in practice theory (Pettersen, 2013). This should be investigated further. A certain methodological limitation of this study was the lack of quantitative data.

Generally speaking, user trials are well suited to formative evaluation, to help with the cyclic process of understanding and iterating the design, as well as summative, to draw conclusions as to the change in behaviour and sustainability impact over time. The application of energy consumption and environmental monitoring would have, it is predicted, provided both physical and quantitative evidence for any measurable change in comfort (through environmental proxies) as well as determine if the intervention had actually reduced or increased energy consumption, filling in the evaluative gap left from user trials and self-reported accounts of behaviour. Such data would have also helped the authors to better understand the shape of change (Laurenceau et al., 2007).

Conclusions

Design for Sustainable Behaviour is in an embryonic state, evolving from its focus on defining strategies within an axis of influence into a cohesive and applied approach to affect sustainable behaviour through design. With several concurrent researchers active in the field, focussing on a broad range of DfSB considerations such as refining the axis of influence or working out methods, guides, or tools for strategy selection, it is unsurprising that there is not one single DfSB model or categorisation of strategies to which all researchers subscribe. Equally disparate are the ways in which this knowledge has been accumulated and applied, with the design processes and methods used varying from project to project. This leaves several areas of DfSB interest that have not been adequately explored to ensure that DfSB reaches maturity. A notable gap that this paper has addressed is the lack of appropriate assessment themes for evaluating feedback behaviour changing interventions, demonstrating that the evaluation of a DfSB intervention can be subdivided into three fundamental themes, which can be further disaggregated to give additional resolution concerning:

- behaviour change—the intentions, context, cognitive process and action of the user (applicable to all DfSB strategies);

- intervention functionality (dependant on the DfSB strategy);

- and the sustainability impact of the intervention, which in this study was considered in terms of energy, comfort (dependant on the intervention context) and ethics (applicable to all DfSB strategies).

These questions provide multiple entry points for designers and design researchers to evaluate the success (or failure) of an intervention which could be iteratively fed back into the design process, avoiding the limited view of only categorising the measure of a behaviour changing interventions success as an x% reduction in y consumed (this precludes any debate over the actual success of the mechanism itself for behaviour change and inhibits progress towards better understanding and feedback design; a change in behaviour does not necessarily correlate to a change in energy consumption, especially when one considers rebound effects). In this respect, a qualitative approach, possibly combined with quantitative measures, gives a more three-dimensional view of behavioural change. Furthermore, by formalising the evaluation themes for a DfSB intervention, cross-study comparison is facilitated, although further work is necessary in order to develop the three themes to be more appropriate to different intervention strategies.

The research within this paper has started to address a considerable gap in knowledge currently present in the field of DfSB through the practical investigation of a feedback intervention designed to change user behaviour; formulating, implementing, and discussing three entry points towards the evaluation of a DfSB intervention. This research forms the first exploratory step towards a knowledge platform for the formalisation of transferable DfSB evaluation questions to help designers better understand and design for behaviour change.

Acknowledgments

The authors thank the Engineering and Physical Sciences Research Council (EPSRC) and E.ON UK for providing the financial support for this study as part of the Carbon, Control & Comfort project (EP/G000395/1).

References

- Abrahamse, W., & Steg, L. (2011). Factors related to household energy use and intention to reduce it: The role of psychological and socio-demographic variable. Human Ecology Review, 18(1), 30-40.

- Abrahamse, W., Steg, L., Vlek, C., & Rothengatter, T. (2005). A review of intervention studies aimed at household energy conservation. Journal of Environmental Psychology, 25(3), 273-291.

- Ajzen, A. (1985). Action control: From cognition to behavior. Berlin, Germany: Springer-Verlag.

- Ajzen, A. (1991). The theory of planned behaviour. Organizational Behavior and Human Decision Processes, 50(2), 179-211.

- Ajzen, A. (2002). Residual effects of past on later behavior: Habituation and reasoned action perspectives. Personality and Social Psychology Review, 6(2), 107-122.

- Albrechtslund, A. (2007). Ethics and technology design. Ethics and Information Technology, 9(1), 63-72.

- Anderson, W., & White, V. (2009). Exploring consumer preferences for home energy display functionality: Report to the energy saving trust. Retrieved April 23, 2016, from https://www.cse.org.uk/downloads/reports-and-publications/behaviour-change/consumer_preferences_for_home_energy_display.pdf

- Arroyo, E., Bonanni, L., & Selker, T. (2005). Waterbot: Exploring feedback and persuasive techniques at the sink. Retrieved April 23, 2016, from http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.94.7877&rep=rep1&type=pdf

- Bargh, J. A. (1994). The four horsemen of automaticity: Awareness, efficiency, intention, and control in social cognition. In J. R. S. Wyer & T. K. Srull (Eds.), Handbook of social cognition (pp. 1-40). Hillsdale, NJ: Erlbaum.

- Bargh, J. A. (1999). The unbearable automaticity of being. American Psychologist, 54(7), 462-479.

- Berdichevsky, D., & Neuenschwander, E. (1999). Toward an ethics of persuasive technology. Communications of the ACM, 42(5), 51-58.

- Bhamra, T. A., & Lofthouse, V. A. (2007). Design for sustainability a practical approach. Hampshire, UK: Gower Publishing.

- Boks, C. (2012). Design for sustainable behaviour research challenges. In M. Matsumoto, Y. Umeda, K. Masui, & S. Fukushige (Eds.), Proceedings of the 7th International Symposium on Environmentally Conscious Design and Inverse (pp. 328-333). Berlin, Germany: Springer.

- Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77-101.

- Burgess, J., & Nye, M. (2008). Re-materialising energy use through transparent monitoring systems. Energy Policy, 36(12), 4454-4459.

- Chatterton, T. (2011). An introduction to thinking about ‘energy behaviour’: A multi model approach. Retrieved April 23, 2016, from https://www.gov.uk/government/uploads/system/uploads/attachment_data/file/48256/3887-intro-thinking-energy-behaviours.pdf

- Cole, R. J., Robinson, J., Brown, Z., & O’Shea, M. (2008). Re-contextualising the notion of comfort. Building Research & Information, 36(4), 323-336.

- Cross, N. (2010). Engineering design methods (4 ed.). Hoboken, NJ: Wiley.

- Darby, S. (2006). The effectiveness of feedback on energy consumption. A review for DEFRA of the literature on metering, billing and direct displays. Retrieved April 23, 2016, from http://www.eci.ox.ac.uk/research/energy/downloads/smart-metering-report.pdf

- Darby, S. (2008). Why, what, when, how, where and who? Developing UK policy on metering, billing and energy display devices. Retrieved April 23, 2016, from http://aceee.org/files/proceedings/2008/data/papers/7_137.pdf

- Darby, S. (2010). Literature review for the energy demand research project. London, UK: Office of Gas and Electricity Markets.

- Darnton, A. (2008). GSR behaviour change knowledge review: An overview of behaviour change models and their uses. London, UK: Government Social Research Unit.

- Department of Energy and Climate Change. (2008). 2008 greenhouse gas emissions, final figures by end-user. London, UK: DECC.

- Evans, M. A., & Pei, E. (2010). iD cards. Leicestershire, UK: Loughborough University.

- Fischer, C. (2008). Feedback on household electricity consumption: A tool for saving energy? Energy Efficiency, 1(1), 79-104.

- Fitzpatrick, G., & Smith, G. (2009). Technology-enabled feedback on domestic energy consumption: Articulating a set of design concerns. IEEE Pervasive Computing, 8(1), 37-44.

- Fogg, B. J. (2003). Persuasive technology: Using computers to change what we think and do (interactive technologies). San Francisco, CA: Morgan Kaufmann.

- Gowri, A. (2004). When responsibility can’t do it. Journal of Business Ethics, 54(1), 33-50.

- Ham, J., Midden, C. J. H., & Beute, F. (2009). Can ambient persuasive technology persuade unconsciously? Using subliminal feedback to influence energy consumption ratings of household appliances. In Proceedings of the 4th International Conference on Persuasive Technology (Article No. 29). New York, NY: ACM.

- Hanratty, M. (2015). Design for sustainable behaviour: A conceptual model and intervention selection model for changing behaviour through design (Doctoral dissertation). Loughborough University, Leicestershire, UK.

- Hargreaves, T. (2010). The visible energy trial: Insights from qualitative interviews. Retrieved April 23, 2016, from http://www.tyndall.ac.uk/sites/default/files/twp141.pdf

- Jackson, T. (2005). Motivating sustainable consumption: A review of evidence on consumer behaviour and behavioural change, a report to the sustainable development research network. Retrieved April 23, 2016, from http://www.sustainablelifestyles.ac.uk/sites/default/files/motivating_sc_final.pdf

- Jelsma, J., & Knot, M. (2002). Designing environmentally efficient services: A ‘script’ approach. The Journal of Sustainable Product Design, 2(3), 119-130.

- Lally, P., Van Jaarsveld, C. H. M., Potts, H. W. W., & Wardle, J. (2009). How are habits formed: Modelling habit formation in the real world. European Journal of Social Psychology, 40(6), 998-1009.

- Laurenceau, J. -P., Hayes, A. M., & Feldman, G. C. (2007). Some methodological and statistical issues in the study of change processes in psychotherapy. Clinical Psychology Review, 27(6), 682-695.

- Lilley, D. (2009). Design for sustainable behaviour: Strategies and perceptions. Design Studies, 30(6), 704-720.

- Lilley, D., Bhamra, T. A., & Lofthouse, V. A. (2006). Towards sustainable use: An exploration of designing for behavioural change. In L. Feijs, S. Kyffin, & B. Young (Eds.), Proceedings of the European Workshop on Design and Semantics of Form and Movement (pp. 84-97). Eindhoven, The Netherlands: Philips.

- Lilley, D., & Lofthouse, V. A. (2010). Teaching ethics for design for sustainable behaviour: A pilot study. Design and Technology Education: An International Journal, 15(2), 55-68.

- Lilley, D., & Wilson, G. T. (2013). Integrating ethics into design for sustainable behaviour. Journal of Design Research, 11(3), 278-299.

- Lockton, D., & Harrison, D. (2012). Models of the user: Designers’ perspectives on influencing sustainable behaviour. Journal of Design Research, 10(1/2), 7-27.

- Löfström, E., & Palm, J. (2008). Visualising household energy use in the interest of developing sustainable energy systems. Housing Studies, 23(6), 935-940.

- Maan, S., Merkus, B., Ham, J., & Midden, C. J. H. (2011). Making it not too obvious: The effect of ambient light feedback on space heating energy consumption. Energy Efficiency, 4(2), 175-183.

- Mintel. (2009). Domestic central heating (industrial report) - UK - May 2009. London, UK: Mintel Group.

- Norman, D. (1988). The psychology of everyday things. New York, NY: Basic Books.

- Office for National Statistics. (2010). Statistical bulletin: Life expectancy, 2007-2009. London, UK: ONS.

- Office for National Statistics. (n.d.). Labour market profile: Merthyr tydfil. Retrieved April 23, 2016, from https://www.nomisweb.co.uk/reports/lmp/la/1946157399/report.aspx?town=merthyr%20tydfil

- Oxford English Dictionary. (n.d.). “Model, n. and adj.” Retrieved June 5, 2012, from http://www.oed.com/viewdictionaryentry/Entry/120577

- Parliament of the United Kingdom. (2008). Climate change act 2008. Chapter 27. Norwich, UK: The Stationery Office Limited.

- Pettersen, I. N. (2013). The role of design in supporting the sustainability of everyday life (Doctoral dissertation). Norwegian University of Science and Technology, Trondheim, Norway.

- Pettersen, I. N., & Boks, C. (2008). The ethics in balancing control and freedom when engineering solutions for sustainable behaviour. International Journal of Sustainable Engineering, 1(4), 287-297.

- Pink, S. (2007). Walking with video. Visual Studies, 22(3), 240-252.

- Pink, S. (2010). Visual methods. In C. Seale, G. Gobo, J. F. Gubrium, & D. Silverman (Eds.), Qualitative research practice. New York, NY: SAGE.

- Polites, G. L. (2005). Counterintentional habit as an inhibitor of technology acceptance. Retrieved April 23, 2016, from http://sais.aisnet.org/SAIS2005/Polites.pdf

- Seligman, C., Darley, J. M., & Becker, L. J. (1977). Behavioral approaches to residential energy conservation. Energy and Buildings, 1(3), 325-337.

- Selvefors, A., Pedersen, K. B., & Rahe, U. (2011). Design for sustainable behaviour - Systemising the use of behavioural intervention strategies. In A. Deserti, F. Zurlo, & F. Rizzo (Eds.), Proceedings of the Conference on Designing Pleasurable Products and Interfaces (Article No. 3). New York, NY: ACM

- Shelter England. (n.d.). What is social housing? Retrieved April 23, 2016, from http://england.shelter.org.uk/campaigns_/why_we_campaign/Improving_social_housing/what_is_social_housing

- Steg, L., & Vlek, C. (2009). Encouraging pro-environmental behaviour: An integrative review and research agenda. Journal of Environmental Psychology, 29(3), 309-317.

- Stern, P. C. (1999). Information, incentives, and proenvironmental consumer behavior. Journal of Consumer Policy, 22(4), 461-478.

- Tang, T., & Bhamra, T. A. (2009). Understanding consumer behaviour to reduce environmental impacts through sustainable product design. Retrieved April 23, 2016, from https://core.ac.uk/download/files/102/100104.pdf

- Tang, T., & Bhamra, T. A. (2011, October). Applying a design behaviour intervention model to design for sustainable behaviour. Paper presented at the International Conference on Sustainable Design in a Globalization Context, Beijing, China.

- United Nations. (1992). United Nations framework convention on climate change. Retrieved April 23, 2016, from https://unfccc.int/resource/docs/convkp/conveng.pdf

- United Nations. (1998). Kyoto Protocol. Retrieved April 23, 2016, from http://unfccc.int/resource/docs/convkp/kpeng.pdf

- United Nations. (2016). Paris Agreement. Retrieved April 23, 2016, from http://unfccc.int/paris_agreement/items/9485.php

- Van Dam, S. S., Bakker, C., & Van Hal, J. D. M. (2010). Home energy monitors: Impact over the medium-term. Building Research & Information, 38(5), 458-469.

- Van Dam, S. S., Bakker, C., & Van Hal, J. D. M. (2012). Insights into the design, use and implementation of home energy management systems. Journal of Design Research, 10(1/2), 86-101.

- Verbeek, P. P. (2006). Materializing morality: Design ethics and technological mediation. Science Technology Human Values, 31(3), 361-380.

- Verplanken, B. (2006). Beyond frequency: Habit as mental construct. British Journal of Social Psychology, 45(3), 639-656.

- Verplanken, B., Myrbakk, V., & Rudi, E. (2005). The measurement of habit. In T. Betsch & Haberstroh (Eds.), The routines of decision making. Arhus, Denmark: Psychology Press.

- Wilson, G. T. (2013). Design for sustainable behaviour: Feedback interventions to reduce domestic energy consumption (Doctoral dissertation). Loughborough University, Leicestershire, UK.

- Wilson, G. T., Bhamra, T. A., & Lilley, D. (2015). The considerations and limitations of feedback as a strategy for behaviour change. International Journal of Sustainable Engineering, 8(3), 186-195.

- Wilson, G. T., Lilley, D., & Bhamra, T. A. (2013). Design feedback interventions for household energy consumption reduction. Retrieved April 23, 2016, from https://dspace.lboro.ac.uk/dspace-jspui/bitstream/2134/12522/3/ERSCP-EMSU%202013%201.0%20GTW.pdf

- Wood, G., & Newborough, M. (2007). Energy-use information transfer for intelligent homes: Enabling energy conservation with central and local displays. Energy and Buildings, 39(4), 495-503.

- Zachrisson, J., & Boks, C. (2012). Exploring behavioural psychology to support design for sustainable behaviour. Journal of Design Research, 10(1/2), 50-66.

- Zachrisson, J., Storrø, G., & Boks, C. (2012). Using a guide to select design strategies for behaviour change; Theory vs. practice. In M. Matsumoto, Y. Umeda, K. Masui, & S. Fukushige (Eds.), Proceedings of the 7th International Symposium on Environmentally Conscious Design and Inverse (pp. 362-376). Berlin, Germany: Springer.