Perceiving While Being Perceived

Department of Communication Science, University of Siena, Siena, Italy

Under what conditions can we engage in a meaningful, expressive interaction with an electronic device? How can we distinguish between merely functional objects and esthetic, poetic, interactive objects that can be potential carriers for meaningful experience? This paper provides some answers to these questions through considering an aspect of aesthetic interaction that is still quite unexplored. Taking a phenomenological approach to action and perception, the paper explores the possibility of achieving by design a shared perception with interactive devices in order to enrich the experience of use as an emergent and dynamic outcome of the interaction. In exploring shared perception with interactive devices, the concept of “perceptual crossing” is taken as a main source of inspiration for design. As defined by Auvray, Lenay, and Stewart (2008), perceptual crossing is the recognition of an object of interaction which involves the perception of how the behaviour of the object and its perception relate to our own. In this sense, a shared perceptual activity influences the behaviour of interacting entities in a very peculiar way: we perceive while being perceived. Here, this argument is explored from the design viewpoint, and prototypes that illustrate the dynamics of perceptual crossing in human-robot interaction are presented.

Keywords – Aesthetic Interaction, Shared Perception, Perceptual Crossing, Human-Robot Interaction.

Relevance to Design Practice – This study considers perceptual crossing as a central theme for aesthetic interaction. The prototypes presented demonstrate the possible use of this concept as inspiration for design.

Citation: Marti, P. (2010). Perceiving while being perceived. International Journal of Design, 4(2), 27-38.

Received April 18, 2010; Accepted July 20, 2010; Published August 31, 2010.

Copyright: © 2010 Marti. Copyright for this article is retained by the authors, with first publication rights granted to the International Journal of Design. All journal content, except where otherwise noted, is licensed under a Creative Commons Attribution-NonCommercial-NoDerivs 2.5 License. By virtue of their appearance in this open-access journal, articles are free to use, with proper attribution, in educational and other non-commercial settings.

Corresponding Author: marti@unisi.it

Introduction

Electronic devices have undergone an alarming trend related to their aesthetics; the search for appearance and perfection often turns into a loss of sensuous and emotional content. Instead of inviting a sensory and perceptual intimacy and exploration, they frequently signal a rejection of sensuous curiosity and pleasure constraining the user to execute action without the possibility to experience their inherent effect. Modern touch-based interfaces make it possible to directly manipulate information, for example by sliding icons over screens. However, this kind of interaction only slightly involves our senses while not permitting us to perceive the inherent properties of the moved objects. The interface is necessary to interact with technology, but through the interface we touch surfaces without quality, experience spaces without gravity, and exercise actions without forces and their inherent effect. The Aesthetics of Interaction is an emerging field of research that tackles these topics while considering beauty of use and expressivity and meaning in interaction as paramount values for design.

Under what conditions can we engage in a meaningful, expressive interaction with an electronic device? How can we distinguish between merely functional objects and aesthetic, poetic interactive objects that can be potential carriers for meaningful experience? Here, “aesthetic” does not refer to a property that is inherent in the object itself, but rather a property of the (inter)action. According to this view, aesthetics is not only related to the form as perceived visually or with the functionality of the system (Fogarty, Forlizzi, & Hudson, 2001), but it is a potential that is released in dialogue as we perceive and act in the world. Consequently, the Aesthetics of Interaction should primarily study action and perception, as well as the intentional affordances that move us to act and interact in the world.

Aesthetic Interaction

The field of Aesthetics of Interaction has reached a certain maturity, partly consolidating the idea that in response to a change in the use of computers and interactive technologies, traditional Human Computer Interaction concepts of usability, efficiency, and productivity have to be enriched with other values such as curiosity, intimacy, emotion and affection. This is done in part through the development of new models and theories that explore many different directions and methods of technological implementation. Given that there seems to be near consensus on the importance of designing interactive systems beyond rational and functional requirements, the ways in which this can be achieved are not so straightforward, and the notion of Aesthetics of Interaction is still ambiguous and often contentious. In fact, different views have emerged.

One view understands the notion of aesthetics as being a result of the appearance properties of form as perceived visually (Fogart et al., 2001), perhaps through the use of exquisite materials and form. Here, aesthetics is seen as an added bonus pertaining to the object apart from the context of use. According to this view, the judgment of beauty is a higher-level evaluative construct which is independent of the actual product-usage experience. However, satisfaction and pleasure are emotional consequences of goal-directed product usage. Other views consider aesthetics with a socio-cultural connotation, as being a result of the human appropriation of the object, a socio-historical appreciation of different components (materials, forms) and properties that do not inherently pertain to the object itself (Petersen, 2004). Other views of aesthetics introduce the concepts of Flow (Csikszentmihalyi, 1990), Rich and Meaningful Interaction (Wensveen, Overbeeke, & Djajadiningrat, 2002), and Resonance (Hummels, Ross, & Overbeeke, 2003), all of which are challenging and dense concepts for study and experiment.

This paper concentrates on a different and complementary perspective of Aesthetics of Interaction. Shared perception is considered as having paramount importance for the aesthetic experience, along with other conditions which include embodiment, bodily skills, cultural context, social practices and contextual aspects. It is a potential that is released in dialogue as we perceive and act in the world while being perceived by the world itself. It is a prospect of reciprocal influences and dynamics of mutual perception. Taking into account shared perception, the aesthetic interaction develops as a shared, reciprocal rapport between interacting entities. This mutuality of influences is a key property of the interaction process. It is dynamic and implies the exploration of the other at the level of our perceptual-motor and emotional skills, at the level of our cognitive capabilities, at the level of our value-related personal and social system.

Examples of Shared Perception

As an illustrative example of shared perception, consider the automatic glass doors of modern train coaches. We know by experience with similar systems that the automatic doors should open when approaching them. But do they show their intention to open when approaching? It is not rare to see passengers making strange movements in front of the door to signal their intention to cross. Likewise, one can often see the automatic door open for no apparent reason. Each of the interacting entities (the person and the door) can potentially perceive the other one, but neither of them clearly show and share their own intentionality.

Sharing intentionality roughly means the process by which agents interact in a coordinated and collaborative way either in order to pursue some shared goals or share a common experience. In order to reach this shared intentionality, perceptual crossing is required. In this view, intentionality is not a result of an internal judgment but is a social product created dynamically as an emergent outcome of the interaction. Social interaction is a product of a perceptual crossing in that the recognition of an object to interact with does not only consist of the simple recognition of a particular form, behaviour, or pattern of movements, but involves the perception of how the behaviour of the object and its perception relate to our own (Auvray, Lenay, & Stewart, 2008). In this sense, intentionality is not a matter of unilateral perception where each intentional subject acts in order to achieve goals by the most efficient means available. On the contrary, it is a shared perceptual activity that influences the behaviour of all the interacting entities.

In order to realise mechanisms of shared intentionality, we should design systems which are sensitive to perceptual crossing. This makes interaction expressive, embodied and responsive to individual actions without the use of an interface or without a previous representation or plan of the interaction itself. From this perspective, and in order to illustrate better what perceptual crossing is, the example of the train door can be taken further. For example, a glass door could show its perception of a person from a distance, perhaps by becoming less opaque. It could also start opening just a bit in the presence of a person, and then open completely very slowly or quickly depending on the quality of the movement of the person towards the door. Of course, the type of interaction here is too limited to offer a very rich experience. However, even in a simple example like this, the timing, intensity, and form of an action can have a corresponding effect to enable the interacting entities to show their shared intentionality and resonate with one another. We could confront a door, rather than functionally approach it. With this example, we can experience the act of entering, not simply seeing the visual design of the door. Further, we can look in or through its transparency as a source of experience, rather than looking at the glass door itself as a material object.

The question remains, though, of how to develop interactive systems able to show perceptual crossing with their users. An alternative view to the concept of interface and structured sequences of action should be developed in order to let people access stimulus information directly through their senses while perceiving and being perceived by the surrounding world. Restoring direct perception using all our senses will enrich people’s experience through a dialogue with artifacts of everyday use. To better understand this concept, take as an example the vending machine. The basic design of a vending machine is usually comprised of a cabinet that holds all internal components and an outer panel containing the electronic controls that allow customers to purchase and receive goods. The outer door usually includes signage and illustrations to show the sequence of actions required to operate the machine. A panel of control buttons lets customers make their selections. The sequence of actions is pre-determined: put in money, press a selection, and receive the food or drink. Occasionally, one may make a selection when the machine is empty, or even mistakenly enter a wrong code and receive an undesired product. This kind of machine is not open to the user’s actions and it requires an interface to be operated. The kind of interaction enabled by this design is error prone as well as dramatically reduces the opportunities for action.

A completely different approach to the design of a vending machine has been taken by Guus Baggermans. For his master graduating project at TU/e Industrial Design, under the supervision of Kees Overbeeke, he created the Friendly Vending Machine that communicates on a personal level with customers, thus enhancing their experience. The vending machine basically invites one to explore interaction possibilities using the customers’ movements and their gestures. The cans, aligned in a series of glass tubes, follow the customer’s movements and turn toward him or her. They show they can see the customer and can behave in coordination to his or her way of approaching the machine. The customer can interact without using any button or browsing menus. Once the customer has decided which drink to buy by physically pointing at the can, a coin is fed into the machine and the tube gently opens allowing the can to be grabbed. The machine elicits an emotional response instead of a rational one. This interaction develops as a reciprocal perception in that the user can perceive the machine and be aware of being perceived by the machine itself at the same time.

Perceptual Crossing

As mentioned, the concept of perceptual crossing as defined by Auvray et al. (2008) is taken as a main source of inspiration in exploring shared perception with interactive devices. Important empirical evidence has been found in their experiments to sustain the central role of dynamic mutuality and shared intentionality in forming several aspects of an ongoing interaction. Auvray et al. (2008) carried out the following relevant experiment. Two blindfolded subjects interacted in a virtual one-dimensional space. Each subject moved a receptor field using a computer mouse, and received an all-or-nothing tactile sensation when the receptor crossed an object. Each participant could encounter three types of objects:

- The other participant’s avatar.

- A fixed object (inanimate).

- A mobile object (the ‘‘mobile lure’’, a shadow inanimate object similar to the avatar, with the same form and movement of the avatar).

The key point of the experimental setup is that the only difference between the avatar and the mobile lure is that the avatar can both perceive and be perceived, while the other objects can only be perceived. The participants’ task was to click the mouse when encountering the other participant’s avatar. In practice, the subject had to distinguish if the tactile stimuli received from the encounter were related to the other participant’s avatar or to an inanimate object. A result of the experiment is that participants clicked significantly more often on the other participant’s avatar (i.e., correctly) than on the fixed object and the mobile lure. Remarkably, subjects were able to distinguish animate objects from inanimate ones with the same appearance and movement (in the case of the mobile lure) only by perceiving very simple tactile stimuli.

A fundamental insight we can draw from this experiment in regard to designing for expressive interaction is that an important clue in interaction is its interwoven nature which has to be shared between the subjects. This is a property of the dyadic system and does not belong to the single interacting entities. Following this argument, interactive systems should show their capability to perceive while being perceived, to be sensitive to others’ movements and actions and their corresponding actualisation in timing, intensity, and form in order to enable the interacting entities to ‘resonate with’ or ‘reflect’ one another. We should also design for action coordination. The human body and cognition are specialized for mutual regulation of joint action. We should take a dual perspective of perception so that each partner in interaction can perceive while being perceived by the other and can modulate his/her behaviour accordingly. These insights will be explored in more depth through the examination of the following design case.

The Robot Companion

In the past few years, human-robot interaction has received a significant and growing interest that has led to the development of a number of so-called robot companions, a term that emphasizes a constant interaction, co-operation and intimacy between human beings and robotic machines. The robotic companions are not supposed to simply execute tasks; a continuous and natural dialogue is expected to be held between the human being and the robot companion. A high quality interaction should occur that is not merely functional (entering a command so the robot can execute it) but emotional (asking “Is the robot or the human angry?”), aesthetically pleasurable (declaring “My cute robot companion”), social (robots mediating social exchanges), and intentional (asking the questions “What can the robot do for me? What can we do together?”). In this respect social robots can represent an ideal test bed for aesthetic interaction and, in particular, for designing for perceptual crossing.

Iromec is a robotic companion that engages in social exchanges with children with different disabilities. The robot has been developed within a three year project started in November 2006, co-funded by the European Commission within the RTD activities of the Strategic Objective SO 2.6.1 “Advanced Robotics” of the 6th Framework Programme (Interactive Robotic Social Mediators as Companions, www.iromec.org).

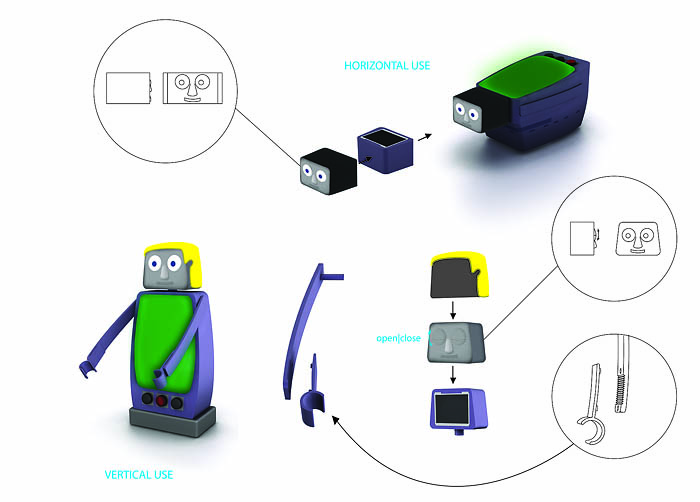

It is a modular robot composed of a mobile platform, an application module and a number of additional components that modify the appearance and behaviour of the robot (Marti & Giusti, 2010). The robot can assume two main configurations, vertical (Figure 1) and horizontal (Figures 2a and 2b). In the vertical configuration, the robot has a human-like stance by being mounted on a dedicated support that provides stability and maintains a fixed position. This configuration supports imitation scenarios that require the children to reproduce basic movements, like turning the head. The robot can also assume the horizontal configuration to support activities requiring wider mobility and dynamism.

Figure 1. The vertical configuration. Figure 2. The horizontal configuration.

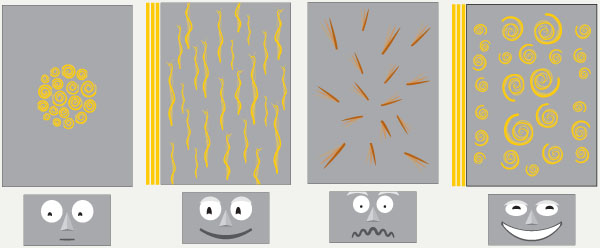

The head of the robot is composed of an 8-inch display for visualizing facial expressions, while the trunk presents a 13-inch display showing graphical elements that can play a role in expressing the robot’s behaviour. For example, the body screen can display a digital fur which moves according to the direction of the platform’s movement. When the robot stops, fur clumps appear that extend when it moves again (Figure 3).

Figure 3. Graphical elements of the robot.

The robot is able to show different facial expressions that incorporate the mouth, nose, eyes and eyebrows, as well as different levels of expressiveness and emotional states. Colors, visual cues (shadows and shades) and smooth transitions have been used to provide a life-like impression. Different masks can also be mounted on the head to hide parts of the face, modify the physical appearance of the robot, and to reduce the expressiveness (Figure 4). This last feature is specifically designed for autistic children whose competence level in processing facial expressions can vary considerably according to the severity of cognitive impairment. The combination of digital and physical components allows the robot to be experimented with in several setups in order to find the solution that better fits the needs of the children.

Figure 4. The masks.

The robot can engage in a number of play scenarios (Robins, Ferrari, & Dautenhahn, 2008), including Turn Taking, Imitation Game, Make it Move (a cause and effect game), Follow-me (coordination game), Dance with Me (imitation and composition game), Bring Me the Ball (cause and effect game), and Get in Contact (Sensory stimulation game). Each scenario has a number of specific educational and therapeutic objectives. For example, the Tickle scenario (Figure 5) consists of an exploration of the robot’s body to discover where it is sensitive to being tickled. The game was developed to improve the perceptual functions (auditory, visual, tactile and visuo-spatial perception) as a basic form of communication. This is important to the learner since the tactile sense can help to provide awareness of one’s own self and each other, to build trust, and to give or receive support in order to develop social relationships during play. In fact, the ability to use one’s senses in an active and involved manner is linked first and foremost to orientation, attention, perception and sensory functions from which knowledge must be acquired and applied to communicate and to take part in social and educational relationships.

Figure 5. “Tickle” scenario.

The Tickle scenario is enabled by covered modules embedding smart materials. A pressure sensitive textile covering module was developed that fixes a soft woolen cover on top of two metallic and conductive layers separated by an isolating layer. The conductive layers are made of steel wires, while the isolating layer can be made of coloured polyester or transparent PA6 monofilament depending on the type of connection to the commutation. The fabric works like a switch – whenever the child strokes a sensitive area, the robot emits an audible laugh. The tickling zones change dynamically and children have fun in trying to guess where the robot is more sensitive.

A particular attention in designing the robot has been paid to the use of sounds. Since most of the play scenarios aim at improving auditory perception, original sounds have been created to structure and articulate the play experience. Indeed, even if we are not normally fully aware of the significance of hearing in coordination and spatial experience, sound can provide the temporal continuum in which visual impressions are embedded and acquire meaning. The robot’s sounds have been designed to give the impression of a living entity without any specific human or animal connotation. The primary objective was to assign a tempo to the activity, to structure spatial and proximity relations, to anticipate an intention to act, to underlie the effect of an action, and externalise the robot’s perception.

A set of covering modules can be mounted on the robot’s body in order to obtain different tactile and visual effects (Figure 6). Some of these modules are interactive (Figure 10) and affect the robot’s behaviour. The covering modules embed smart textiles that provide the robot with unusual visual, tactile and behavioural feedback resulting from material transformations.

Figure 6. Covering modules.

Implementing Perceptual Crossing:

Movement and Coordination

The objective of this design case is to provide expressive, aesthetic interaction with the robot companion. With this case and with perceptual crossing in general, the goal is to develop interactive objects/systems which show their capability to perceive while being perceived, and to use shared perception as a means to influence the behaviour of the interacting entities.

The human body and cognition are specialized for mutual regulation of joint action. People interindividually coordinate and reciprocally influence their movements in social interaction – they mirror each others’ movements, anticipate them, temporally synchronise or desynchronise and so on. A specific feature of social coordination is that patterns of coordination can dynamically influence the behaviour of the interacting partners. This happens also in situations where the interaction carries on even though none of the participants wishes to continue it.

In discussing mechanisms of social understanding through direct perception, De Jaegher (2009) reports as an example the familiar situation where one encounters someone coming from the opposite direction on a narrow footpath. In attempting to walk past each other, it may happen that both pedestrians step towards the same side. This may happen a few times before they are finally able to pass each other. Here, the coordination of movements (a temporally synchronised mirroring of sideways steps) ensures (for a brief while) that the interaction process is sustained despite the fact that the persons both want to stop interacting in this way. We can exploit this natural ability of coordination by taking a dual perspective of perception where the interacting entities adjust their behaviour according to the evolving dynamics of the interaction. This can be illustrated through an analysis of the robot’s Follow Me scenario, which will show the dynamics of mutual coordination in a situation of perceptual crossing.

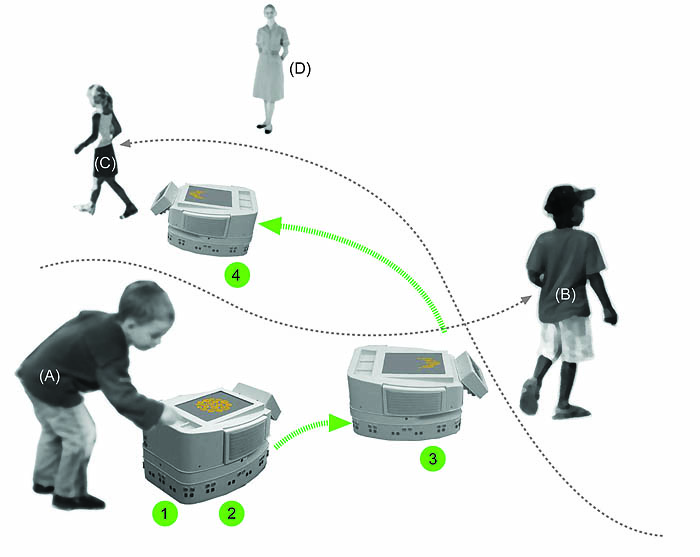

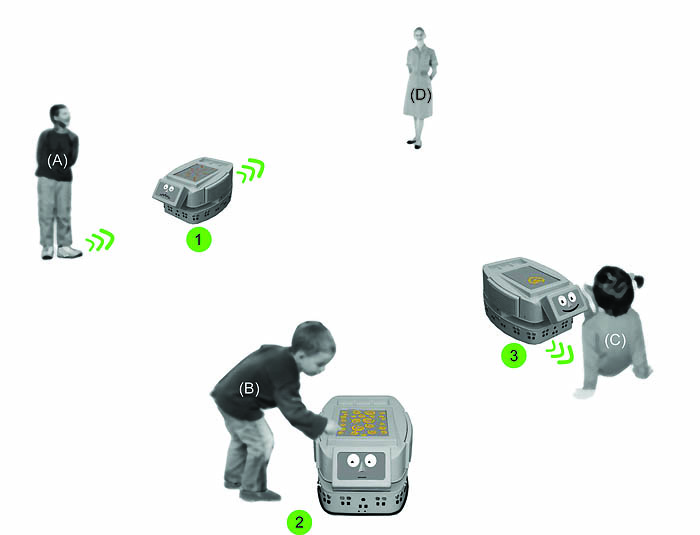

The Follow Me scenario is an exercise and simple symbolic game with primary objectives related to energy and drive functions, like improving motivation to act and to feeling in control. The scenario aims to develop the understanding of cause and effect connections and to improve attention to mobility, coordination and basic interpersonal interaction. The game consists of playing with the robot that follows a child. Other children can compete to attract the attention of the robot in order to be followed.

The game starts when the first player activates the ‘follow-me’ mode by stroking the digital fur clumps displayed on the robot’s body (Figure 7 step 1). The robot starts to move (Figure 7 step 2), searching for a child. When the robot finds a child, it follows him or her within a predefined distance (e.g. 50 cm) (Figure 7 step 3). If the child stops, the robot stops too. When a second player (another child or the teacher) approaches the robot, and the robot is closer to the second player, it starts to follow the second player (Figure 7 step 4). In practice, the children and the robot have to coordinate their movements while expressing through movement their intention to act. The behaviour of the individual actors is influenced by their shared perception and it is dynamic.

Figure 7. Follow Me scenario.

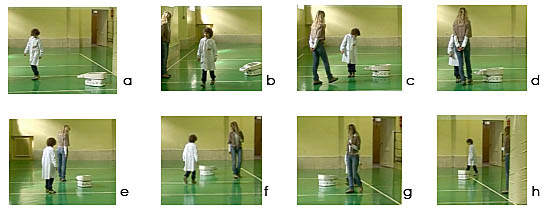

Frames a-h in Figure 8 show the Follow me scenario played with a very early version of the robot prototype during an experiment at a primary school in Siena, Italy. In this version, the appearance of the robot was not finalized yet, but a great deal of attention was put into designing coordination dynamics between the robot and the child. In fact, the trajectory, pace and speed of the child’s movement were dynamically coupled to a corresponding actualization in the robot’s movements with a similar form, timing, and intensity.

Figure 8. Follow Me scenario: trials at the school.

The child involved in the trial was nine years old and had a mild cognitive disability entailing a learning delay and difficulties in focusing attention on the same activity for a sustained time. In Figure 8, we can see the child walking in the gym of the school being followed by the robot (frame a) that keeps the same pace and trajectory of movement. She looks at the teacher while she is walking and shows that the robot is able to follow (b). Sometimes, she stops and slows down and the robot synchronises its movement to the child’s pace. All of a sudden, the teacher starts moving, passing quickly in between the child and the robot (c – d). By doing so, she attracts the attention of the robot which starts following her instead, coordinating its movement to the teacher’s speed. The child observes the scene (e) and tries to obtain the same effects by walking between the teacher and the robot (f – g). Ultimately, the initiative is successful and the student can get the attention of the robot and have it follow her without hesitation (h), while adjusting the pace and speed of its movement to those of the student’s movement.

The video recording of this scene was analysed and discussed with the teachers, where it was agreed that the behaviour of the child was remarkable. She was focused on the activity which lasted 30 minutes without interruption, and which produced interesting variations in the behaviour of the child. She enjoyed trying out different movements in the space, changing the geometry of her trajectory, increasing or decreasing her speed, and stopping and going back to experience the tuning of her way of walking to the robot’s movement. The robot showed a clear intentional behaviour that was situated and contingent on the behaviour of the other actors (child and teacher). The effect was mainly due to a shared perceptual activity that was embodied and contingent.

Implementing Perceptual Crossing through Micro Movements

Another exercise game and symbolic play scenario implemented in the robot is Get in Contact. The game is played by one or more children, and an adult has a supportive role during the activity to stimulate storytelling and to control the behaviour of the robot. Through a wireless Ultra Mobile PC unit, the adult can dynamically select the robot’s behaviour among a set of behavioural patterns throughout the activity. Each behavioural pattern is characterized by a certain configuration of the robot movements, interfaces (e.g. face expressions), and covering module transformations.

At the beginning of the game, the adult selects the behavioural pattern expressing a ‘feeling of fear’. Here, the robot does not approach the child and maintains a safe distance from him or her (Figure 9 step 1). When the child tries to approach the robot, it retreats and its digital fur gets darker and rough. Such a pattern creates a context that encourages the children to interpret the robot’s behaviour and to change their behaviour towards the robot accordingly (e.g to approach the robot kindly). When the child gently approaches the robot, the adult modifies the behavioural pattern and the robot now approaches the child showing warm colours in order to invite the child to a more intimate interaction (Figure 9 step 2). The adult can then select the behavioural pattern specifically related to the tactile exploration. The robot and the child are next to each other, and when the child touches the robot, it responds as if it were purring to engage the child into an intimate and emotional exchange (Figure 9 step 3).

Figure 9. Get in Contact scenario.

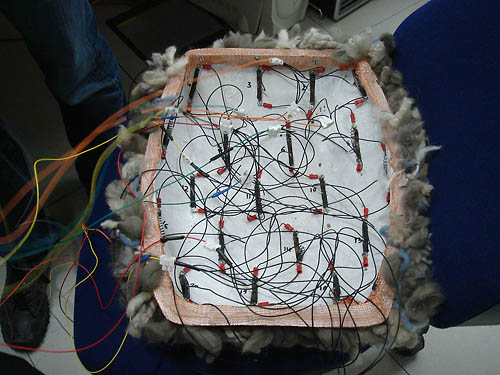

This play scenario has been enriched through developing a covering module of interactive fur (Figure 10) that implements dynamics of perceptual crossing. The fur is made of a soft woolen cover with static and moving hairs. The static hairs are knotted on a copper knitted fabric covering a dome-like fiberglass shell. Also, the moving hairs are fixed to the copper fabric but their lower part is connected to a Nitinol spring (Figure 11).

Figure 10. The interactive fur.

Figure 11. The inner shell of the interactive fur with Nitinol springs.

A total of 20 moving hairs are distributed on top of the shell. Each Nitinol spring is connected at the centre to an electric wire wrapping the hairs. The Nitinol springs are fixed to the inner part of the dome shell by means of screws, and the electric wires are inserted through holes in the shell itself. When electricity passes from one extremity of the spring to the centre, the other extremity contracts. In this way, the electric wire at the centre of the spring moves left and right together with the hair it is wrapped to. The movement of the hair can be controlled in timing, intensity and form. Since the hair is inserted in the copper fabric, which is not elastic, the movement of the lower part of the hair is transformed in a rotation of the hair, which in some cases can reach more then 100 degrees. When half of the spring contracts, it is necessary to wait at least 20 seconds for it to cool down before the other half of the spring can contract. This makes the effect of the moving hairs seem quite natural, similar to the fur of an animal.

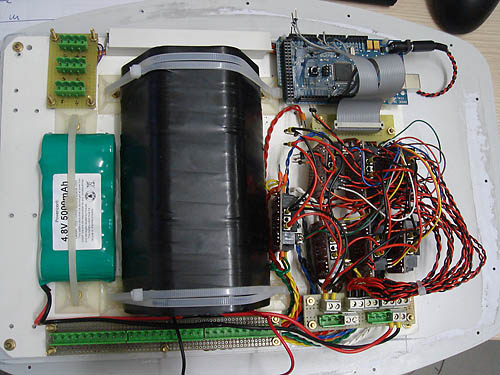

The hardware architecture (Figure 12) of the module is composed of the following components:

- Input Pins: These pins are used to get inputs by sensors. In particular, SRF04 sensors have been used to obtain object proximity information, and a long distance microphone to capture sound variations.

- Output Pins: The Nitinol wires inserted in the fur are controlled by an Arduino Mega micro-controller. Each interactive hair is composed by a Nitinol wire and covered by a heat-resistant fabric.

- Arduino Mega: A microcontroller board based on the ATmega1280. It has 54 digital input/output pins (of which 14 can be used as PWM outputs), 16 analog inputs, 4 UARTs (hardware serial ports), a 16 MHz crystal oscillator, a USB connection, a power jack, an ICSP header, and a reset button.

- L298 Motor Driver: The L298 is a dual-motor driver used by the Arduino pins to control the Nitinol wires by changing the input voltage of the wires. The prototype uses eight Motor Drivers that can be independently controlled to obtain different behaviours of the fur, and two batteries.

- 5V Battery: This battery is used to power Arduino Mega and the L298 Motor Drivers.

- 18V Battery: This battery is used to power the Nitinol wires. The use of this kind of battery is necessary since the prototype uses the maximum discharging capability for a short time to obtain a realistic sudden movement.

Figure 12. The prototype hardware architecture.

A software program in C/C++ calculates the speed with which to approach the robot by using three different sequential position values to avoid measurement noise. Then, the input voltage to the wires and the stimulation time is varied according to the approach speed values (a range between 0 and a maximum speed threshold).

Different implementations and controls of this module have been tested through embedding different kinds of sensors. For example, a software program has been developed to control sound sensors which have two different thresholds. The low threshold is the minimum sound value needed to obtain a certain kind of behaviour, called “quiet activation”. In this case, a small voltage value is assigned to the hairs for a long time. In this way, the hairs are slowly warmed up to obtain a slow activation. When the hairs reach the complete activation and extension, the voltage stimulation is stopped so that the hairs can cool down and slowly come back to the deactivation position. When the hairs are midway through their descent, a small voltage is administered again to allow the hairs to reach the maximum extension position. At this point, the stimulation is definitely stopped and the hairs slowly reach the deactivation position. When the sound value exceeds the high threshold, a new behaviour called “afraid activation” is reached. In this case, the maximum input voltage is sent to the hairs (18V) to obtain a sudden complete activation to give the effect of the fur of a frightened pet.

Figure 13 shows an interaction scenario where the robot presents interactive fur connected to audio sensors. If the person whispers to the robot from the right side and talks to the robot in the right “ear”, the fur raises gently starting from the right side. It is usually more fun for children to shout in order to frighten the robot. In this case the fur reacts suddenly and the robot moves away.

Figure 13. Interactive fur with sound sensors.

Figure 14 shows a different robot behaviour in response to an approaching person. Here, the robot presents interactive fur connected to proximity sensors. When a person approaches the robot, its hairs raise corresponding in timing and intensity to the movement of the person. If the person moves toward the robot quickly, the fur reacts with quick movement.

Figure 14. Interacting fur with proximity sensors.

Figure 15 shows a variation in the implementation of perceptual crossing using LEDs in a free scenario where the robot moves autonomously in a room without any specific goal. When the person enters the room and crosses the robot, the LEDs light up and follow the person passing by. This simple behaviour is extremely expressive and interpreted as intentional in that the robot perceives the person and shows its readiness to interact.

Figure 15. Interactive fur with LEDs.

Conclusions

Most of the studies conducted to date to investigate the mechanisms involved in shared intentionality consider the possibility of sharing another’s intentionality as granted by an inferential cognitive process based on the discrimination of, first and formost, facial expressions but also of body movement, gestures, and language. In this view, the ability to recognize intentionality becomes a prerequisite for adopting the other’s outlook and separating it from one’s own. This allows one to share the other’s intentionality in a secondary way and to represent it. For this discrimination to take place, it is necessary to acknowledge the other as an animated entity with intentions and goals, capable of expressing its internal state.

For social robots, intentionality is a fundamental characteristic. Their credibility as autonomous entities is given by their ability to show intentions, and to express and pursue them. But what are the minimum requirements that need to be fulfilled to design social robots capable of showing and sharing intentionality in interaction with human beings? This paper tries to answer this question adopting an alternative view inspired by the concept of perceptual crossing. According to this view, some of the mechanisms underlying the recognition of others as intentional entities are intrinsic to the shared perceptual activity – we perceive the others while being perceived. This mutual interdependency is a product of a perceptual crossing and is dynamics.

Along these lines, in order to design robots able to engage in social interaction with human beings, we do not necessarily need to represent internal states and implement complex inferential processes. The prototypes described above attempt to enable perceptual crossing in a direct, non-mediated perceptual way. The design solutions adopted do not require a representation of complex internal states and inferential mechanisms.

From the review of these design cases, it is clear that a fundamental challenge for the design of interactive objects including social robots is to enable mechanisms for perceptual crossing based on the awareness to perceive while being perceived by the other. Perceptual crossing is a fundamental perceptual competence for the aesthetic experience. Meaning is released in dialogue as we perceive and act in the world while being perceived by the world itself. The perception of mutual affordances shapes the interaction and is a fundamental ingredient of the aesthetic experience.

Acknowledgments

I’m grateful to the Iromec partners, experts, and children who collaborated and participated in the research project. A special thanks goes to Claudio Moderini for his support in the robot design, to Ernesto Di Iorio of QuestIt who implemented the interactive fur, and to Riccardo Marchesi of InnTex s.r.l. for producing the textile of the interactive fur.

References

- Auvray, M., Lenay, C., & Stewart, J. (2009). Perceptual interactions in a minimalist virtual environment. New Ideas in Psychology, 27(1), 32-47.

- Csikszentmihalyi, M. (1990). Flow: The psychology of optimal experience. New York: Harper and Row.

- De Jaegher, H. (2009). Social understanding through direct perception? Yes, by interacting. Consciousness and Cognition, 18(2), 535-542.

- Fogarty, J., Forlizzi, J., & Hudson, S. E. (2001). Aesthetic information collages: Generating decorative displays that contain information. In Proceedings of the 14th Annual ACM Symposium on User Interface Software and Technology (pp. 141-150). New York: ACM.

- Hummels, C. C. M., Ross P. R., & Overbeeke C. J. (2003). In search of resonant human computer interaction: Building and testing aesthetic installations. In M. Rauterberg, M. Menozzi, & J. Wesson, (Eds.), Proceedings of the 9th IFIP Conference on Human-Computer Interaction (pp. 399-406). Amsterdam: IOS Press.

- Marti, P., & Giusti, L. (2010). A robot companion for inclusive games: A user-centred design perspective. In Proceedings of the IEEE International Conference on Robotics and Automation (pp. 3-8). Piscataway, NJ: IEEE.

- Petersen, M. G., Iversen, O. S., Krogh, P. G., & Ludvigsen, M. (2004). Aesthetic interaction: A pragmatist’s aesthetics of interactive systems. In Proceedings of the 5th Conference on Designing Interactive Systems (pp. 269-276). New York: ACM.

- Robins, B., Ferrari, E., & Dautenhahn, K. (2008). Developing scenarios for robot assisted play. In Proceedings of the 17th IEEE International Workshop on Robot and Human Interactive Communication (pp. 180-186). Piscataway, NJ: IEEE.

- Wensveen, S., Overbeeke, C. J., & Djajadiningrat, T. (2002). Push me, shove me and I show you how you feel: Recognising mood from emotionally rich interaction. In Proceedings of the 4th Conference on Designing Interactive Systems (pp. 335-340). New York: ACM.