Approachability: How People Interpret Automatic Door Movement as Gesture

Stanford University, Stanford, USA & California College of the Arts, San Francisco, USA

Willow Garage, Menlo Park, USA

Automatic doors exemplify the challenges of designing emotionally welcoming interactive systems—a critical issue in the design of any system of incidental use. We attempt to broaden the automatic door’s repertoire of signals by examining how people respond to a variety of “door gestures” designed to offer different levels of approachability. In a pilot study, participants (N=48) who walked past a physical gesturing door were asked to fill out a questionnaire about that experience. In our follow-up study, participants (N=51) viewed 12 video clips depicting a person walking toward and past an automatic door that moved with different speeds and trajectories. In both studies, our Likert-scale measures and open-ended responses indicated significant uniformity in participants’ interpretation of the behaviour of the door prototypes. The participants saw these motions as gestures with human-like characteristics such as cognition and intent. Our work suggests that even in non-anthropomorphic objects, gestural motions can convey a sense of approachability.

Keywords – Emotion, Gestures, Movement, Physical Interaction, Welcome.

Relevance to Design Practice – This research will be useful to designers of interactive objects seeking to actively engage users or to communicate usage suggestions or product availability.

Citation: Ju, W., & Takayama, L. (2009). Approachability: How people interpret automatic door movement as gesture. International Journal of Design, 3(2), 77-86.

Received March 12, 2009; Accepted July 13, 2009; Published August 31, 2009

Copyright: © 2009 Ju & Takayama. Copyright for this article is retained by the authors, with first publication rights granted to the International Journal of Design. All journal content, except where otherwise noted, is licensed under a Creative Commons Attribution-NonCommercial-NoDerivs 2.5 License. By virtue of their appearance in this open-access journal, articles are free to use, with proper attribution, in educational and other non-commercial settings.

*Corresponding Author: wendyju@stanford.edu

Introduction

Conveying approachability is a critical aspect of interaction design where designers seek to invite people to interact with designed services. It is particularly critical in designing systems that people use incidentally like doors, vending machines or kiosks (Dix, 2002). Here, the stakes for the user’s experience are high. If such systems do not convey a sense of welcome to passersby and engage them in interaction, subsequent niceties and refinements of the system’s design are effectively irrelevant. Unlike many aesthetic qualities, such as visual form or personality, approachability is a dynamic property that can vary according to the time of day, the state of the system or the identity of the person the system is addressing. Dynamicism is a unique and fundamental aspect of interactive products, but there are few design conventions to guide the design of dynamic behaviors; designers of interactive systems often grapple with how to convey approachability.

Automatic doors exemplify the possibilities and pitfalls of designing approachable interactive systems. We have all experienced the welcome, convenience and ease of having doors sweep open as we draw near a building. Automatic doors are common enough to be conventional, invisible—almost unremarkable—and yet they still suffer from interaction problems. Any sustained observation of a building employing automatic doors reveals numerous breakdowns: people have difficulty distinguishing automatic doors from non-automatic doors; people inadvertently trigger the doors without meaning to; people walk toward the door too quickly, or not quickly enough; people are frustrated in their attempts to trigger the door before or after regular hours. These problems show that extended use and familiarity are not sufficient to create a critical sense of approachability; the conventions of design fail to respond to the broader dynamics surrounding the door to help people understand when the door is offering ingress, and when it is not.

What guidance, then, can we offer the designers of automatic doors? One possible answer lies in the theory of implicit interactions (Ju, 2008). It posits that the gestures and patterns of interaction that people use to subtly communicate queries, offers, responses and feedback to one another can be applied analogously in the design of interactive devices to improve people’s ability to “communicate” with interactive devices without recourse to explicit speech. These gestures and interaction sequences could include aesthetic considerations, but are fundamentally functional concerns about whether interactants notice and properly interpret implicit behaviors and signals.

The “offer” is one class of implicit interaction. Offers perform the critical function of alerting potential interactants to the possibility of a joint action (Clark, 1996). A doorman can offer to open a door for a passersby, and thereby invite them into a building, by making eye contact with people, overtly placing his hand upon the door handle, motioning towards the door and even opening the door slightly. These actions let people know that they are able and welcome to enter through the door, and people respond predictably to this social engagement. The theory of implicit interactions suggests that we can design automatic doors analogously, employing equivalent sequences to enable engagement, overt preparation, deictic reference and demonstration, to convey a sense of welcome and to achieve a predictable response.

In this paper, we present a pair of studies that examine the use of door gestures to present different degrees of “approachability.” In the first study, we use “Wizard of Oz” techniques to gesture physical doors at pedestrians. In the second, we use web-based video prototypes to show participants a range of door gestures. The goal of both studies is to show that door gestures are interpreted in a predictable fashion by a range of participants, even when the door gestures are non-conventional. This work functions as a “proof of concept” for the use of communicative analogues as suggested by the theory of implicit interactions. Designers looking for insights into how to convey approachability—or other dynamic characteristics—can look to human-human interactions for conventions of communication when there are no communications of design to fall back on.

Related Work and Theory

In our studies, we are examining how interactive objects’ actions influence people’s cognitive, affective and behavioral response. Much research has been done on the influence of visual and tactual aspects of a product’s design (e.g., Crilly, 2004; Krippendorf, 2008; Boess, 2008) and, more recently, on the role of aesthetics of interaction (e.g., Dalsgaard, 2008; Baljko, 2008). However, few studies address the functional role that interaction plays in user experience. Our study seeks to validate a theory about how to pattern the design of interactive products around human implicit interactions.

Although implicit interactions may precede, prevent or augment verbal or other explicit communication, they mediate interaction without requiring “linguistic” communication (Clark, 1996). Implicit interactions have two key qualities: they are dynamic, adapting their appearance, behaviors and responses to changing situations, and they are demonstrative, adopting embodiment and action for expression. These two properties distinguish implicit interactions from functional actions and explicit interactions. Functional actions do not necessarily change and are not necessarily meant to be interpreted in any way. Explicit interactions are literal, employing words, symbols or graphic elements, rather than embodied meanings. People use implicit interactions to communicate queries, offers, responses and feedback to one another all the time. We extend an open hand to offer help; we gently pull back objects to signal that they are not for sharing; we avoid eye contact if we don’t wish to speak to someone. The theory of implicit interactions argues that such interactions can be applied analogously to the design of interactive devices to improve people’s ability to “communicate” intuitively with interactive devices (Ju, Lee, & Klemmer, 2008).

The theory of implicit interactions has its roots in human-centered design methodology. The design of implicit interactions requires practitioners to spend ample time observing people to understand their interaction patterns, and also to test designed interactions to gauge people’s responses to them. However, the principal qualities of implicit interaction, dynamicism and demonstration, differentiate it from other human-centered approaches. For instance, previous work on product personalities (Desmet, 2008) and interactive characters incorporate physical interaction and animated behaviours into interactive product design, but they employ action or embodiment to convey intrinsic qualities, such as character, personality or purpose, rather than the changing dynamic qualities, such as mood, readiness or availability, that implicit interactions would communicate. They also tend to focus on subjective concerns as opposed to pragmatic issues about how to communicate to enable joint action.

The use of physical movement and other implicit means of signaling can be thought of as an extension to the theory of affordances. Affordances are variously described as the actual (Gibson, 1979) or perceived (Norman, 1988) properties of an object that relate its potential for use by the perceiver. Gaver extended the notion of affordances into the realm of the interactive with the concept of sequential affordances, which are revealed over time (Gaver, 1991). Objects employing these complex affordances can be thought of as dynamically communicating potential for action through their unfolding behavior. Although there is substantial overlap between the design principles suggested by the theory of affordances and that of implicit interaction design, there remains an important distinction between the two. Affordances rest on people’s perceptual abilities to discover potential use, whereas implicit interactions rely on people’s communicative abilities regarding potential use. To improve an affordance to enter a building, a designer would make the passability of the doorway more obvious. To improve an implicit interaction to enter a building, a designer would make the doorway express that the passerby was welcome to enter.

The aforementioned example illustrates the social aspects of implicit interaction. People often use the non-verbal communication channel employed in implicit interactions to express feelings, emotions, motivations and other implicit messages (Argyle, 1988). Indeed, the instinctive reflex to interpret emotional expression in perceived actions causes people to attribute emotional motivations to non-human and even non-animal actors. For instance, Heider and Simmel (1944) found that people interpreted moving objects in the visual field “in terms of acts of persons.” Subsequent studies by Michotte (1962) involving simple depictions of two moving balls showed that while some movements elicited “factual” descriptions, others caused people to attribute motivations, emotions, age, gender and relationships to the two objects. This suggests that designers can design interactive environments to signal subtly and expressively, much as animators create subtle expressiveness in otherwise inanimate objects (Lasseter, 1987). This socio-emotional aspect of implicit interactions is notably absent from discussions about affordance. As a consequence, implicit interactions are a natural and powerful way to communicate messages about engagement or avoidance, approval or rejection.

Study 1: Physical Prototype Pilot

Study Design

As an exploratory pilot study, we employed a field experiment on gesturing doors. We used Wizard of Oz techniques (Dahlbäck, 1993) to make a physical building door gesture at participants who happened to be walking near the door during its deployment (N=48). The primary interests of the pilot study were (1) how people interact with the door and (2) how they interpret its dynamic motions. We took note of whether the participant was walking toward the door or walking by the door at the time of the encounter with the moving door. Over a three-day period, we tried three different door trajectories: open, open with a pause, and open, then quickly close. This was a between-participants study; each participant only saw one of the door trajectories.

Materials

For this experiment, we selected one of a set of double doors that featured a large pane of glass that enable people to see into the building. A human operator stood to the side of the door, out of view of passersby, and acted as a wizard, pushing the door with a mechanical armature attached to the door’s push bar. We used gaffer’s tape to hide the armature and Contact Paper to obscure the windows to the sides of the door to create the illusion that the door was opening on its own. (See Figure 1 for the experiment as seen from inside the building and see Figure 2 for the view from outside the building.)

Figure 1. Wizard of Oz setup. A hidden door operator uses a mechanical armature to gesture the door.

Figure 2. Person walking (a) by and (b) towards the door. Note the monitor on the right.

The paper questionnaire contained two open-ended questions: “What did you think was happening when you saw this automatic door move?” and “Assuming it functioned properly, how did you interpret the door’s movement?” The questionnaire also included closed-ended questions that queried participants on 10-point scales with the following questions and anchors:

- How did you feel about the door?

- (1) very negative – (10) very positive

- The door seemed to intend to communicate something to me.

- (1) strongly disagree – (10) strongly agree

- The door seemed to think when it communicated with me.

- (1) strongly disagree – (10) strongly agree

- The door was reluctant to let me enter.

- (1) strongly disagree – (10) strongly agree

- The door was welcoming me.

- (1) strongly disagree – (10) strongly agree

- The door was urging me to enter.

- strongly disagree – (10) strongly agree

Procedures

The procedure for the study required three to four experimenters. One was the door operator mentioned earlier. Another experimenter acted as a monitor, waiting casually outside of the building and surreptitiously triggering an alert to the door operator inside via walkie-talkie when pedestrians neared the door. The other experimenter(s) approached the pedestrians with the paper questionnaire after they had seen the gesturing door move.

Only those people who approached the door from the direction shown in Figures 1 and 2 were chosen to encounter the gesturing door because anyone approaching from the other direction might have seen the door operator and armature. Experimenters approaching people first queried participants to gauge whether they had noticed the door’s motion before giving them a paper questionnaire. Some people declined to fill out the questionnaire; the most common explanations for non-participation were lack of time and inability to speak English. Most people (48 out of 64) opted to fill out the questionnaire and many even discussed the study with us at some length. Date, time, participant gender and experimenter condition were noted on the back of each questionnaire.

Data Analysis

We used univariate analysis of variance (ANOVA) to analyze the data, using door trajectory as the independent variable and participant walking direction as a covariate. Because participants were not randomly assigned to walking direction conditions, walking direction was not used as a full independent variable. Each questionnaire item was analyzed as a dependent variable in an ANOVA.

In addition to the statistical analyses, we present descriptive statistics and observations from this pilot study that fed into the next iteration of this study design.

Study 1 Quantitative Results

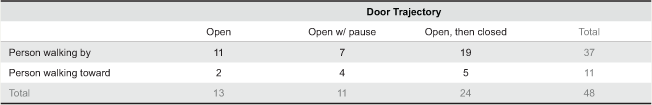

During the experiment, 64 people nearing the door noticed its motion. 48 of them opted to fill out the questionnaire. An additional 38 people did not notice the door’s motion. Distributions of door motions and walking trajectories for participants who noticed the door move and filled out the questionnaire are reported in Table 1.

Table 1. Frequency distribution of pilot study participants for conditions

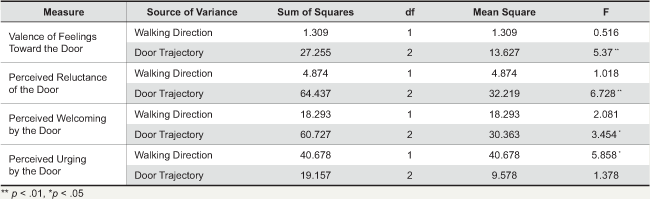

Door trajectory had a significant influence on valence of feelings toward the door, perceptions of reluctance, welcoming and urging on the part of the door. Walking direction had a significant influence on perceptions of the door as urging one to enter. These results are presented in Table 2 and are further described in this section. Differences in sample sizes are due to non-responses by some participants to some questions.

Table 2. Study 1: Analysis of Covariance Summary for Influences of Door Trajectory (Independent Variable) and Person Walking Direction (Covariate) Upon Valence of Feelings Toward the Door, Perceived Reluctance of the Door, Perceived Welcoming by the Door, and Perceived Urging by the Door.

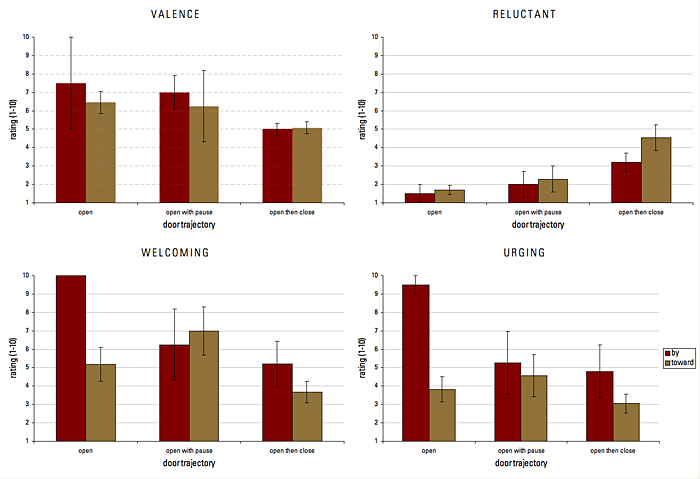

Door trajectory significantly affected the valence (negative to positive) of participants’ feelings toward the door, F(2,44)=5.37, p<.01: open (M=6.62, SD=2.06), open with pause (M=6.55, SD=1.57), and closed (M=5.06, SD=1.27). Bonferroni post hoc comparisons of door trajectory revealed significant differences between “open” and “open, then close” door trajectories, p<.05. No significant main effect for walking direction was found.

Door trajectory also significantly affected how reluctant the door seemed to be, F(2,43)=6.73, p<.01: open (M=1.67, SD=0.78), open with pause (M=2.18, SD=1.66), and closed (M=4.25, SD=2.77). Bonferroni post hoc comparisons of door trajectory revealed significant differences between “open” and “open, then close” door trajectories, p<.01. Again, no significant main effect for walking direction was found.

Figure 3. Mean +/- std. error values for Study 1 Door Perception Factors.

Door trajectory also significantly affected how welcoming the door seemed to be, F(2,44)=3.45, p<.05: open (M=5.92, SD=3.33), open with pause (M=6.73, SD=3.44), and closed (M=4.00, SD=2.59). Differences approached significance between the “open with pause” and “open, then close” door trajectories, p=.08. No main effect for walking direction was found.

Finally, walking direction significantly affected how urging the door seemed to be, F(1,44)=1.38, p<.05: walking by (M=3.57, SD=2.44) and walking toward (M=5.82, SD=3.31). However, door trajectory was not found to significantly affect perceptions of the door as urging one to enter the building.

These data analyses did not reveal significant results for questions about apparent intention or apparent cognition of the door.

Qualitative Results

Written responses to the open-ended questions were too short for any meaningful response coding. The average length of response was 29 characters for the first question (M=29.4, SD=14.8), and 19 characters for the second (M=19.0, SD=15.9). The likely cause for the brevity of response is that participants filled the questionnaires out while standing and on their way to another destination.

Discussion

The results of the pilot study were promising in suggesting systematically predictable interpretations of door motions. Even in the noisy world of people going about their everyday lives, people showed consensus in their responses to the door motions. Other insights gained from the pilot study came from qualitative observations and discussions with participants after they finished the questionnaire. One participant was a retail designer who was interested in the study because the door’s motion caught his attention and made him curious about what was inside of the building; the goal of shops is to entice potential customers to walk through their doors.

One important observation for consideration in real field deployments of such systems is the people who did not notice the moving door. They tended to be walking and talking with others, talking on their mobile phones, listening to music players with headphones or walking very quickly, seemingly in a rush to some other destination. People are not always strolling idly down the street; they are often preoccupied.

One issue with this pilot field experiment was that participants who walked through the door also ended up seeing the door operator before they filled out the questionnaire. Fortunately, the majority of the data came from people walking by the door rather than toward it.

Another issue with this pilot field experiment was that participants who were unhappy with the door were also quite unhappy with the experimenter who requested their time to fill out the questionnaires. In particular, those participants who were walking toward the door and had the door shut in their faces seemed personally offended; several people were consequently unwilling to fill out a questionnaire “for the door.”

Using a field experiment for this study gave us the benefit of seeing how people would respond to gesturing doors in a natural setting, particularly how they would respond the first time they encountered such a door. However, with this experimental setup, it was difficult to ask people to evaluate their reactions towards different door gestures in the context of other possible gestures; once they saw how the door was actually operated, it would be harder to interpret the movements as coming from the door itself. In addition, we were concerned about the effect that the natural variations in door gestures might have on people’s interpretations. Finally, we found that the people encountering the door were usually on the way from one place to another, and were generally too impatient to write more than a couple of words in the written responses. Thus, for a secondary experiment, we decided to use video prototypes of gesturing doors, so that participants would be able to compare the different door gestures and scenarios, would all be looking at the same door gestures and would have more time to explain what they felt different door gestures meant and how they responded.

Study 2: Video Prototype Experiment

Based on the findings and identification of weaknesses in the pilot study, we decided to conduct a more controlled experiment to further test people’s responses to door gestures. In the video prototype study, participants were shown 12 different gestures using video clips embedded in a web-based questionnaire.

As in existing research (Heider, 1944; Michotte, 1962), these studies engage participants in an “interpretative” role (where they are asked to read the interaction) rather than an “interactional” role (where they are asked to engage in the interaction). Although this method sacrifices some ecological validity, the video-prototype study enables better “participant” and door interactions, and cleaner isolation of feelings toward the door rather than toward the experimenter or study. In addition, this video prototype could be run as a within-participants study, thus reducing the possibility that our inadvertent participant selection and individual difference effects might be skewing our results across the conditions.

Study Design

This study added one new dimension, door speed, to the previous study design. Using a 2 (person walking direction: walking by vs. walking toward) x 2 (door speed: slow vs. fast) x 3 (door trajectory: open vs. open with pause vs. open then close) within-participants experiment design, we investigated the effects of both the door and the passerbys’ actions in this human-door interaction. Participants were recruited from a university community (N=51).

Materials

We performed a web-based experiment in which participants were shown 12 web pages, each containing an embedded video of a human-door interaction and questionnaire items. The clips were randomly ordered to address ordering effects. These 12 videos included every combination of our three independent variables: person’s walking direction, door speed and door trajectory. As in the pilot study, the videos showed door gestures performed by a hidden door operator. On each page, participants were asked to play the video, imagining themselves to be the person in the video. To prevent participants from merely reading the person’s reaction as opposed to imagining what their reaction would be, we chose a camera angle that hid the walker’s face and we ended the clip before the person walked through the door or physically reacted to the door’s gestures. Video clips ranged from 4 to 9 seconds in length, were sized at 540 x 298 pixels, and were encoded using Apple Quicktime format (see Figure 4).

Figure 4. Screenshots of video of person walking by (left) and toward (right) the gesturing door.

Procedure

Participants who volunteered for the study were directed to the web page with gesturing door videos and questionnaire items. After watching each video, participants were asked to describe their experience with the door from the perspective of the person in the video, and to describe what they thought the door was communicating. They were then asked to rate the strength of their agreement or disagreement with three statements about the door, including how reluctant, welcoming or urging the door seemed. We selected these factors because they were significant factors in the first study.

Data Analysis

Because indices are more robust to the variance of individual items, we opted to create a single index of “approachability” for Study 2. Using Principle Component Analysis, we found that the three Likert indices (welcoming, urging and reverse-coded reluctant) constituted a single factor, with Cronbach’s α =.91. Therefore, we combined them into an unweighted averaged single factor, approachability.

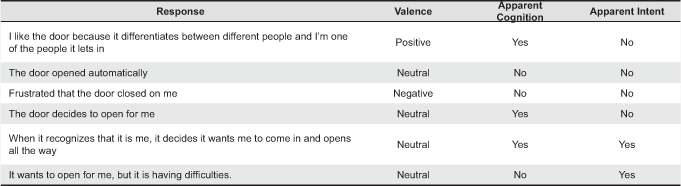

Open-ended responses were coded and averaged across coders for valence (negative, neutral, or positive), apparent cognition (0 or 1), and apparent intent of the door (0 or 1) by two independent coders, who were blind to the experimental conditions. Valence was judged as an apparently positive, neutral or negative feeling toward the door. The coders judged apparent cognition according to how much the response made it seem like the door was thinking. The coders judged apparent intent according to how much the response made it seem like the door wanted or intended to do things. Table 3 presents examples of real responses from participants in this study and how they were rated.

Inter-rater reliability was reasonable: Cronbach’s α values of .714, .616, and .723, for valence, apparent cognition and apparent intent, respectively.

Table 3. Study 2: Example responses from participants and how they were coded in this study

Study 2 Results

Unlike the pilot study, the video prototype study elicited far more descriptive responses to the open-ended questions. Average length of responses was 75 characters for the first question (M=75.1, SD=51.2), and 53 characters for the second (M=53.1, SD=42.1). This far exceeds the lengths from the previous study, despite the fact that each participant filled out 12 times as many open-ended questions.

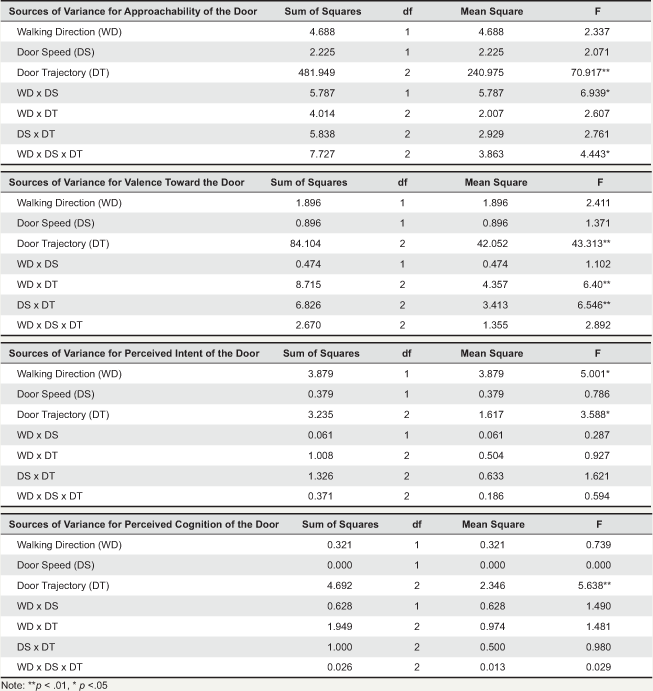

After all of the descriptions were coded and averaged across coders, we used a within-participants full-factorial repeated-measures analysis of variance to investigate the effects of each of the three independent variables (person walking direction, door speed, door trajectory) on each of the four dependent variables (approachability factor, valence of person’s response, apparent cognition attributed to door, apparent intent attributed to the door). They showed systematically different responses among participants as shown in Table 4.

Table 4. Study 2: Repeated Measures Analysis of Variance Summary for Influences of Person Walking Direction, Door Movement Speed, and Door Trajectory Upon Perceived Approachability of the Door, Valence of Feeling Toward the Door, Perceived Intent of the Door and Perceived Cognition of the Door.

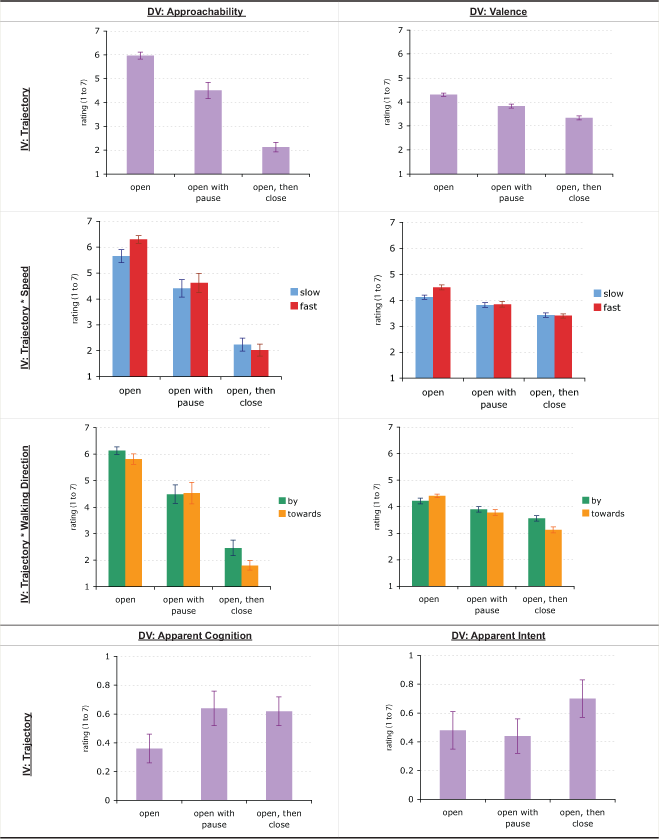

As seen in the repeated measures ANOVA results in Table 4, the door’s trajectory had the most far-reaching effects across all dependent variables: approachability (F(2,30)=70.91, p<.001), valence (F(2,88)=43.31, p<.01), apparent intent (F(2,42)=3.59, p<.05) and apparent cognition (F(2,50)=5.64, p<.01). In general, the door that opened and then closed before the person got to it made the door seem more negative, more intentional and less approachable, whereas the door gesture that simply swung open was read as approachable, but not necessarily cognitive or intentional. (See Figure 5, Row 1.)

Several two-way interactions were significant or nearly significant. Faster door speeds showed a trend toward exaggerating the effects of door trajectory. They significantly influenced valence, F(2,88)=6.55, p<.01 and nearly significantly influenced approachability, F(2,30)=2.61, p<.09. Similarly, the walking direction of the “participant” showed a trend toward exaggerating the effects of the door trajectory. It significantly influenced valence, F(2,88)=6.40, p<.01, and nearly significantly influenced approachability, F(2,30)=2.76, p<.09. (See Figure 5, Rows 2 and 3.) Bonferroni post hoc comparisons revealed significant differences in the approachability of the door and valence of response toward the door between each of the pairs of conditions, all at the p<.01 level. Bonferroni post hoc comparisons revealed significant differences in perceived intent and cognition of the door at the p<.05 level between the “open” vs. “open with pause” and “open” vs. “open then close” door trajectories.

Figure 5. Mean +/- std. error values for Study 2 Door Perception Factors.

Discussion

The core finding of this study is that people’s interpretations of door gestures are highly systematic across several dimensions of door motion. Despite the novelty of gesturing doors, untrained interactants “intuitively” read the gestures in systematic ways that were very consistent with the findings in pilot study 1. This suggests that people have a common understanding of door interaction and interpret the meaning of door gestures in similar ways, possibly comparable to interpretations of human gestures (McNeill, 2005). This agreement supports the notion that door motion can provide an effective means of implicit communication.

The correspondence between the findings in studies 1 and 2 also point to how employing different experimental methods can address or mitigate potential limitations inherent in each method. The field study had the benefit of being realistic and querying people’s first-hand experience of an interaction. However, it was difficult or impossible to perform as a within-subjects design and was subject to a high degree of variability; if people’s reactions to the door movements had not been so strong, this experiment could well have overlooked the effect. The video prototype study addresses the issue of variability and increases the likelihood of identifying causal relationships between interaction factors and people’s reactions. The external validity of this method is limited by the fact that participants are interpreting their perceptions of someone else’s experience, rather than having an experience first-hand. Therefore, it is important that studies of this nature be paired with first-hand field experiments like the one we used as a pilot.

The catch-22 in implicit interaction development is that it is difficult to assess people’s interpretations of implicit actions without distorting the effect by asking about them explicitly. As such, the video prototype technique employed in this study is a methodological contribution to this area of research. Although it will take more subsequent studies to see if people interpreting these interactions are reasonable predictors of how people would feel in an interactive role, the coherence of the two studies indicates that testing with video prototypes can provide a good approximation of people’s real-world responses to an interaction design.

Conclusions

These two experiments indicate that door trajectory is a key variable in the doors’ expression of welcome; door speed and the context in which the door is opening acting as amplifying factors influencing people’s emotional interpretation of the door’s gestures. The wide range of expression available with only one physical degree of freedom suggests that designers can trigger emotional appraisal with very simple actuation. Unlike previous systems, which employed anthropomorphic visual or linguistic features, our interactive doors were able to elicit social response using only interactive motion to suggest cognition and intent. If designers can convey different “messages” in such a highly constrained design space, it seems reasonable to extrapolate that more information could be conveyed with more complex ubiquitous computing and robotic systems.

Although this study focused on doors, our broader goal was to experiment with welcoming users to engage in joint action. Other interaction designers could extend the techniques explored here in a variety of applications: in interactive kiosks patterns to proactively indicate to users what services are provided (Buxton, 1997), in word processor interfaces to proactively offer assistance formatting letters or printing without the use of insufferable talking paperclips (Xiao, Catrambone, & Stasko, 2003), or by future work environments to indicate selective access to different badge holders (Weiser, 1991).

This research will assist designers of interactive devices in expanding the repertoire of implicitly communicative conventions that can be employed in the design of interactive systems that seek to welcome users. Moreover, our implicit interaction approach takes an important step towards acknowledging that emotional responses to interactive devices may play a functional role as well as an aesthetic one. The approaches we have employed, field studies and video prototype studies, can be very useful when designers need interactions that prompt consistent and objective interpretations, as opposed to the subjective reactions that might be desirable in applications with a more purely aesthetic purpose.

Acknowledgements

We wish to acknowledge Abraham Chiang, Björn Hartmann, Corina Yen, Doug Tarlow, Erica Robles, Micah Lande, Scott Klemmer, Larry Leifer and Clifford Nass for their generous assistance with this research.

References

- Argyle, M. (1988). Bodily communication. New York: Methuen.

- Baljko, M., & Tenhaff, N. (2008). The aesthetics of emergence: Co-constructed interactions. ACM Transactions on Computer-Human Interaction, 15(3), 11:1-27.

- Buxton, W. (1995). Integrating the periphery and context: A new model of telematics. In Proceedings of IEEE Conference on Graphics Interface (pp. 239-246). Los Alamitos, CA: IEEE Computer Society.

- Buxton, W. (1997). Living in augmented reality. In K. E. Finn, A. J. Sellen, & S. B. Wilbur (Eds.), Video-mediated communication (pp. 215-229). Mahwah, NJ: L. Erlbaum Associates.

- Clark, H. (1996). Using language. Cambridge, UK: Cambridge University Press.

- Clark, H. (2003). Pointing and placing. In K. Sotaro (Ed.), Pointing: Where language, culture and cognition meet (pp. 243-268). Mahwah, NJ: L. Erlbaum Associates.

- Crilly, N., Moultrie, J., & Clarkson, P. J. (2004). Seeing things: Consumer response to the visual domain in product design. Design Studies, 25(6), 547-577.

- Dalsgaard, P., & Hansen, L. K. (2008). Performing perception—Staging aesthetics of interaction. ACM Transactions on Computer-Human Interactions, 15(3), 13:1-33.

- Dahlbäck, N., Jönsson, A., & Ahrenberg, L. (1993). Wizard of Oz studies: Why and how. New York: ACM Press.

- Desmet, P. M. A., Ortíz Nicolás, J. C., & Schoormans, J. P. (2008). Product personality in physical interaction. Design Studies, 29(5), 458-477.

- Dix, A. (2002). Beyond intention: Pushing boundaries with incidental interaction. In Proceedings of Building Bridges: Interdisciplinary Context-Sensitive Computing. Glasgow, UK: Glasgow University. Retrieved January 25, 2009, from http://www.hcibook.com/alan/papers/beyond-intention-2002

- Gaver, W. W. (1991). Technology affordances. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 79-84). New York: ACM Press.

- Gibson, J. J. (1979). The ecological approach to visual perception. Mahwah, NJ: L. Erlbaum Associates.

- Heider, F., & Simmel, M. (1944). An experimental study of apparent behavior. The American Journal of Psychology 57(2), 243-259.

- Ju, W, & Leifer, L. (2008). The design of implicit interactions: Making interactive systems less obnoxious. Design Issues, 24(3), 72-84.

- Ju, W., Lee, B., & Klemmer, S. (2008). Range: Exploring implicit interaction through electronic whiteboard design. In Proceedings of the ACM 2008 Conference on Computer Supported Cooperative Work (pp. 17-26). New York: ACM Press.

- Lasseter, J. (1987). Principles of traditional animation applied to computer animation. Computer Graphics, 21(4), 34-44.

- McNeill, D. (2005). Gesture and thought. Chicago: University of Chicago Press.

- Michotte, A. (1962). The perception of causality. London: Methuen.

- Norman, D, A. (1988). The psychology of everyday things. New York: Basic Books.

- Norman, D, A. (2004). Emotional design. New York: Basic Books.

- Norman, D. A. (2007). The design of future things. New York: Basic Books.

- Reeves, B., & Nass, C. (1996). The media equation. New York: Cambridge University Press.

- Schifferstein, H. N. J., & Hekkert, P. (2007). Product experience. Amsterdam: Elsevier Science.

- Weiser, M. (1991). The computer for the twenty-first century. Scientific American, 265(3), 94-100.

- Xiao, J., Catrambone, R., & Stasko, J. (2003). Be quiet? Evaluating proactive and reactive user interface assistants. In Proceedings of the 9th IFIP TC13 Conference in Human-Computer Interaction (pp. 383-390). Amsterdam: IOS Press.