Designing Group Music Improvisation Systems: A Decade of Design Research in Education

Bart J. Hengeveld * and Mathias Funk

Future Everyday Group, Dept. of Industrial Design, Eindhoven University of Technology, Eindhoven, The Netherlands

In this article we discuss Designing Group Music Improvisation Systems (DGMIS), a design education activity that investigates the contemporary challenge design is facing when we go beyond single-user single-artefact interactions. DGMIS examines how to design for systems of interdependent artefacts and human actors, from the perspective of improvised music. We have explored this challenge in design research and education for over a decade in different Industrial Design education contexts, at various geographic locations, in several formats. In this article we describe our experiences and discuss our general observations, corrective measures, and lessons we learned for (teaching) the design of novel, interactive, systemic products.

Keywords – Design, Group Music Improvisation, Systems Design, Internet of Things.

Relevance to Design Practice – We investigate the contemporary challenge when designing beyond single-user single-artefact interactions; We have explored this challenge in design research and education for over a decade. In this article we describe our experiences and reflect on them aiming to inform design researchers and educators in the field of IoT.

Citation: Hengeveld, B., & Funk, M. (2021). Designing group music improvisation systems: A decade of design research in education. International Journal of Design, 15(2), 69-81.

Received July 23, 2020; Accepted July 20, 2021; Published August 31, 2021.

Copyright: © 2021 Hengeveld & Funk. Copyright for this article is retained by the authors, with first publication rights granted to the International Journal of Design. All journal content is open-accessed and allowed to be shared and adapted in accordance with the Creative Commons Attribution-NonCommercial 4.0 International (CC BY-NC 4.0) License.

*Corresponding Author: B.J.Hengeveld@tue.nl

Dr. Ir. Bart Hengeveld is Assistant Professor in the Future Everyday group at the Department of Industrial Design, Eindhoven University of Technology (TU/e). He has a background in Industrial Design (M.Sc. at TU Delft, Ph.D. at TU/e) and jazz double bass (Codarts Conservatory Rotterdam). His research interests center around the role of sound in the design of and interaction with systems of smart things, building on 25+ years of experience in music composition and performance; also he has an extensive background in tangible and embodied interaction. Since 2016 he is a member of the Steering Committee of the annual conference on Tangible, Embedded and Embodied Interaction (TEI).

Dr. Mathias Funk is Associate Professor in the Future Everyday group in the Department of Industrial Design at the Eindhoven University of Technology (TU/e). He has a background in Computer Science and a PhD in Electrical Engineering (from TU/e). His research interests include methods and tools for designing with data, designing systems of smart things, and interfaces for musical expression. In the past he has researched at ATR (Japan), RWTH Aachen, Philips Consumer Lifestyle and Philips Experience Design, Intel Labs (Santa Clara), National Taiwan University of Science and Technology, and National Taiwan University. He is also the co-founder of UXsuite, a high-tech spin-off from TU/e.

Introduction

This article should start by pointing out that both authors have a background in design and engineering, as well as in music performance and composition. Having this shared background, we were struck by the parallels between these—at first glance—disjunct worlds, most prominently by how contemporary interactive and increasingly networked technologies and music can be regarded as highly dynamic systems in which multiple actors engage with each other socially and technologically. A difference though is that, whereas the world of design has only recently started delving into multi-user multi-technology systems, music can boast centuries of experience when it comes to shared interactions through systems of musical interfaces (i.e., instruments). Moreover, music has a rich history in accommodating, or even specifically designing for, unpredictability; something that we found lacking in the world of design. We started wondering how the design of aforementioned expressive, networked interfaces could be informed by practices from (improvised) music. We hypothesised that, by looking at the design of multi-user multi-technology systems through the lens of group music improvisation we could explore and deepen approaches to system design.

In the following, we first introduce music improvisation and the branch of design we are active in, before fusing the two into what we call Group Music Improvisation Systems (GMIS). We illustrate an example of such a GMIS before presenting the design research and education program we have been running, called Designing GMIS or DGMIS. Over the course of ten years we have ran and tweaked DGMIS in various Industrial Design education contexts, at different geographic locations, in several formats. The main part of this article reports on our DGMIS journey, on our insights and adaptations. We round off with discussing our general observations, our corrective measures, and the lessons we learned for (teaching) the design of novel, interactive, systemic products.

Music Improvisation

As this research aims to inform design by looking at it through the lens of group music improvisation, let us start off by illustrating the kind of group music improvisation we are talking about. We do this by briefly considering what happens at a typical jazz music jam session. This is a setting where musicians—often complete strangers—meet to play music. At these sessions musicians self-organise into performing groups and play whichever tune and style the group feels like, often one from the canon of jazz standards. When the people on stage agree on key and tempo someone does the count-in after which (hopefully) everyone starts at the same moment in the same way. This is where the group music improvisation truly begins, as it is simply impossible to agree on everything beforehand and deliberation is limited in the heat of the moment; you cannot elegantly stop playing and align expectations. Moreover, the fun of group music improvisation lies in its unexpectedness.

There is of course some structure, not in the least because the musicians share a similar musical worldview: we have a common vocabulary, we play the same scales, we know the same chords, and we’ve listened to similar harmonies for years (Peplowski, 1998). When jamming, tunes follow a typical structure, the musicians can build on a shared knowledge of canonic recordings and make use of a typical bag of tricks. Most of all though, the musicians will have “learned how to listen … [and are] constantly giving one another signals” (pp. 560-561). One of the more interesting aspects in this is that within an improvising group there is a continuous shift of leadership, within an unstoppable and ever-changing musical system in motion; nobody is solely in charge, everyone can direct the collective musical direction through their playing. This essentially means that musicians have become attuned to a very specific, non-verbal communication that is often either the music itself or social signals such as eye contact, body position and posture and other performative aspects.

Group improvisation has been researched in the context of design before of course, for example looking at the interaction modeling of free improvisation (Hsu, 2007), designing collaborative musical experiences (Bengler & Bryan-Kinns, 2013), social aspects of musical performance (Alcántara et al., 2015; Davidson, 1997), collaborative music experiences for novices (Blaine & Fels, 2003), audience interaction in digital music experiences (Stockholm & Pasquier, 2008), musical improvisation as interpretative activity (Klügel et al., 2011; Valone, 1985) and the inter-dependencies in musical performance (Hähnel & Berndt, 2011)—just to give a rough overview. We, however, take a more holistic approach to the relation between improvisation as it is in the world of music and what that could mean for the field of design, at different levels (e.g., artefact, interaction, methodology, or process).

Also, design researchers have proposed a number of new devices and interactions for musical expression that, to some extent, fit the context of musical collaboration in an improvisation setting. There is work on the relation between simple interaction and complex sound (Bowers & Hellström, 2000), supporting an immersive musical experience (Valbom & Marcos, 2007), AudioCubes as a distributed tangible interface proposition (Schiettecatte & Vanderdonckt, 2008), Jam-o-Drum (Blaine & Perkis, 2000) and digital drumming studying co-located, dyadic collaboration (Beaton et al., 2010), and related, rhythmic negotiation (Hansen et al., 2012), or multi-user instruments (Jordà, 2005). Design research has focused on the notion of tangibility in musical expression (Rasamimanana et al., 2011) as well as emotion (Juslin, 1997) and intimacy (Fels, 2004). Also, networked experiences have been in the focus of research, such as in network musics (Kim-Boyle, 2009), interdependent group collaboration (Weinberg et al., 2002), and the RadarTHEREMIN (Wöldecke et al., 2011), the Augmented String Quartet (Bevilacqua et al., 2012) and the reacTable (Jordà et al., 2007). Given the technological or technology-supported nature of designing GMIS, we differentiate from live coding approaches (Brown & Sorensen, 2007) in that GMIS is based on full-fledged design activities that include form and interaction and not just programmed (musical) logic, and approaches to music visualization (Chan et al., 2010; Chew, 2005; Endrjukaite & Kosugi, 2012; Fonteles et al., 2013; Grekow, 2011; Puzoń & Kosugi, 2011) that allow for introspection into the experience.

Systems of Expressive Things

Having read these examples of interaction design for musical expression, what remains is the step from single-artefact to multiple-artefact interactions. After all, like many in the field of design we see a move from designing things, to designing systems of things. Over the past decade, more and more attention has gone into designing for the Internet of Things (IoT), a term used to describe pervasive networks of artefacts with sensing and actuating capabilities, combined with embedded intelligence (Atzori et al., 2010; Fritsch et al., 2018). The artefacts in these networks are only loosely coupled, decentralised, and highly dynamic (Fortino et al., 2018). As such the promise of the IoT is that it may fundamentally transform the role technology plays in practically every aspect of our lives (e.g., Bigos, 2017) although this promise is also meeting less optimistic counterviews (Fritsch et al., 2018).

So, what are systems of—in our case expressive—things and how do they differ from the IoT? While the IoT relies on similar technology, it is a conceptually very different beast: the typical thing in the IoT is a locally deployed sensor, actuator or interface that connects to a cloud system for monitoring, updates and control. What we call systems of expressive things are predominantly local constellations of things, interactive expressive products, that leverage networking technology to exchange data and control amongst the constellation—not further. Such a system or constellation of products is used synchronously (in both time and space) in the form of a musical session where every player and every instrument exchanges signals and reacts accordingly. Ultimately, a system of expressive things turns into a reactive socio-technical network that performatively immerses in musical expression.

Given this rather loose definition, we should emphasize that we are not so much interested in better defining what the IoT is or isn’t, but rather that we are interested in the implications of such technologies on the act of designing. After all, a large part of our everyday professional challenge lies in teaching young designers to help create these highly dynamic socio-technical systems in which multiple humans interact with multiple technologies. We realized early on that, when designing for such complex systems, we cannot rely on the way we have designed for single-user single-product interactions. Instead, we see the need for a methodological turn, a move from designing fixed input-output mappings towards input-output mappings that are emergent and inherently unstable, as these new systems are subject to constant change in terms of context, situatedness, network topology, data and semantics, and often multiple forms of intelligence or smart behavior. System design (Ryan, 2014) essentially means designing for a setting in which high degrees of human and technological agency coexist, resulting in complex and unpredictable, yet expressive behavior (Rowe, 1999) while trying to direct this setting towards a recognizable and inherently meaningful context.

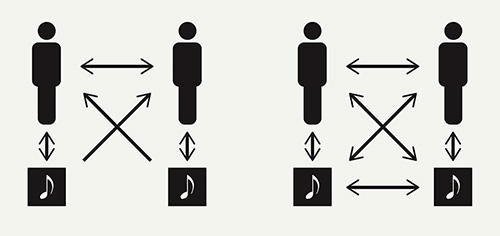

The important conceptual leap in this work is threefold: (1) We introduce new feedback loops between the components of the designed system; (2) This necessitates that the designers take part and perform in the system; and (3) Designing such complex systems cannot be done on paper alone, instead it needs embodiment, interaction and connectivity (Frens & Hengeveld, 2013). All three points relate design, and design education, to the aforementioned practice of group music improvisation: the act of improvising requires ubiquitous and fast feedback loops, i.e., to shape the experience means to be part of performing it (Funk et al., 2013; Hengeveld et al., 2013). After all, as we pointed out earlier, group music improvisation is something you cannot talk about but must engage in to understand completely, let alone something of which you can control the outcome. Instruments play a large role in performance, without them, there would not be improvisation. The essence of group music improvisation lies in that the outcome is emergent. What we can control are the conditions under which improvisation happens. We therefore posit that the only way to get some grip on (and within) designing Systems of Expressive Things is by creating a dialogue between the subject of design and the act of designing itself. When we apply the lens of systems design, improvisation can take a new meaning, attributing agency to all actors including instruments (see Figure 1, right), adding technological influence to social interaction. In this paper, such performative systems that allow for rich interactions and interconnections are what we will refer to as Group Music Improvisation Systems as an operationalization for educational purposes.

Figure 1. During normal music performance (left) two players are fully in control of their own instruments and can only influence each other’s playing indirectly. They can hear each other’s instrument and give social cues. In GMIS (right) players have direct influence over the other musician’s instrument. Moreover, the instruments themselves can influence each other’s characteristics.

Group Music Improvisation Systems (GMIS)

GMIS are composed of musical instruments that are digital or digitally mediated, and as such can communicate among each other and influence each other’s current state and characteristics. This means that all instruments within a GMIS can be played in isolation as well as in a group, and that, in group settings, players do not merely play their own musical instrument but also influence one or more parameters of someone else’s instrument. These parameters include the other instrument’s pitch, timbre, attack, or decay, but also mean applying filters (e.g., hi-pass, low-pass) or effects (e.g., delay, flanger, bit-crusher). Consequently, all the instrumentalists within the shared group music improvisation experience are forced to relinquish some control over their own musical instrument, while receiving some control over someone else’s instrument. GMIS are systems that are designed to facilitate group music making in an expressive, improvised way. When instruments are played as part of a GMIS they undergo systemic enrichment that creates a more than the sum of its parts situation—the essential quality of a system.

Especially for trained musicians this is a shift in perception. Ultimately, all performers need to relay their focus towards shared control and interplay as they all and always interfere with each other’s instrument and all need to work with it. While mutual influence in musical performance has always been an integral part of collective music-making through social cueing (Figure 1, left), casting and consequently designing musical instruments as sources of interference is not just novel; it is, as we have experienced over the past decade, an often thoroughly disruptive design experience.

Example GMIS

To give an impression of what such a GMIS looks like we first discuss an example here, before elaborating on the setup of the DGMIS design activities and learnings in the remainder of the article. The example is called Beat My Bass, Pluck My Drum (BMBPMD; Hengeveld et al., 2014), stemming from the very start of our research. BMBPMD is a small-scale GMIS of two instruments for two players: one percussive instrument (see Figure 2, left) and a two-stringed synthesizer controller (Figure 2, right). The percussive instrument consists of a conga drum skin augmented with three touch-sensitive touch pads and a microphone, located below the drum skin to amplify the acoustic sound and pick up trigger signals for the second instrument (processed using an envelope follower to generate events). The percussive instrument can be played as a regular conga drum, but the touch sensitive areas trigger additional synthetic sound alterations.

Figure 2. Beat My Bass (BMB, left) and Pluck My Drum (PMD, right). BMB is consists of a conga drum skin augmented with three touch-sensitive touch pads and a microphone, through which it can influence the behavior and sound of PMD, a two-stringed synthesizer which in turn can influence the behavior and sound of BMB.

The two-stringed synthesizer is a custom-built controller consisting of two bass strings, a regular bass pickup, and two stacked capacitive slide and pressure sensors. Plucking the strings triggers a synth tone that is manipulated in pitch and volume using the slide and pressure sensors. The slide sensor changes the pitch of the tone, whereas altering the pressure modifies the instrument’s timbre. Both instruments use an external computer for sound generation, sound manipulation, and generating a 3D sound mapping. Performers and audience were all situated within a custom-built 3D audio setup consisting of eight speakers in a square layout.

When played solo, the instruments respond in a fairly predictable manner. However when played simultaneously each of them is subject to some control by the other: (1) by hitting the augmented pads on the conga skin, the percussive instrument adds pitch shifts to the two-stringed synth; (2) by playing louder, the two-stringed synth decreases the level and of the percussive instrument and applies a low-pass filter. This reciprocal instrument parameter influence changes the individual instrument response and demands the players to shift their attention away from their own playing towards an anticipatory performative stance closely following the ensemble performance and the emergent, collaborative musical creation.

Starting Points for the Designing GMIS (DGMIS) Program

This example GMIS did not just come about accidentally but was the result of the first incarnation of our DGMIS program, a design education activity that ultimately lasted for over a decade. In the following pages we give a description of the academic journey that ensued, which was based on three main starting points. Here we first describe these starting points and their implications on the DGMIS setup.

Dynamic Design Space with a Shared Goal and Aesthetics

Through DGMIS we want to challenge designers to construct a dynamic design space with a shared goal that satisfies their collective sense of aesthetics, both musically and in terms of the interaction/performance. This means on the one hand that the designers involved should accept that the design space can (and will) change over the course of the design activity, and that they should be designing for, and coming to grips with, an aesthetics that is not predominantly determined by the all-powerful designer nor the simple-minded user but emerges from the interplay between both.

Consequently, this means that even though everyone involved individually prepares an instrument prototype or a performative intervention, the actual design happens when they face each other and experience the actual musical multi-agent system. As such, rather than predetermining outcomes, the designers involved create an ephemeral experience that requires continuous adaptation based on both an individual and a collective sense of quality and direction. Such a design session can be intensive, short-lived, and full of reflection and learning. The performed musical experiences that demark important turns in the design process all help the participant-performers align their sense of aesthetics.

Designing with Dependencies.

In a highly dynamic system such as a GMIS all individual designs depend on all other designs, making the collective system continuously in progress. Consequently, individual designs inherently rely on each other, not only in their performance but also in driving the design process. Only when one design makes a significant step forward, another can benefit. In other words, if all designer-performers wait for each other’s input, we have a deadlock situation, and no one will be able to experience the full reciprocal system behavior. Ironically, in DGMIS the only way to help each other is to interfere with each other.

In the DGMIS setup therefore, we intend the design process to move from individual designs to the collective try-out, back to the individual designs, and so forth. This should happen in rapid succession because to support the understanding that the created experience needs to evolve constantly. In this sense, the DGMIS setup represents a faster progression between phases of a conventional design process, while upholding a higher level of complexity that cannot be easily reduced in the specification or interpretation of the design brief. As a result of this interdependency, all design action requires additional communication: which data the individual designs exchange and how they do that, how often they share instrument state and parameters, and whether the data streams are visible to the human actors in the system.

Nurturing Complexity.

As one may read from all this, being part of DGMIS requires being able to cope with complexity and uncertainty. Instead of looking for ways to reduce these, we believe in embracing and nurturing both. We believe that in systems design, premature (and often misguided) convergence and reduction is (almost) a cardinal sin. Experiencing complexity is instrumental in reaching solutions that eventually apply to complex situations and we see group music improvisation as a good practice context for this. The components of a GMIS, i.e., the individual instruments, need to be designed as a means to produce sound and allow for expression. At the same time, they need to compose to a performative system. From a technology perspective that means that they produce and consume data as a way of parameterizing the sound generation. The individual designs need to connect to a common data infrastructure and be able to send and receive real-time data to individual nodes (a soloist) or groups of other instruments (a section of an orchestra). From an interaction design perspective, DGMIS means creating individual interfaces that contribute to a collective experience.

Summarizing, DGMIS is a challenging design activity that is highly immersive and process-aware, rich in communication and relies on developing a keen sense of complexity as a design and process quality that is worth nurturing and designing with.

A Decade of DGMIS

Over the span of a decade, we ran three types of DGMIS activities in different educational contexts. We started with semester-long activities that over the years evolved into single-day pressure cookers. This process of reduction was on the one hand triggered by variations in the educational contexts, but also by experiential insights that allowed us to progress faster within a shorter time frame.

The three variants of DGMIS were: (1) as a semester-long project, (2) as a workshop lasting one or two weeks, and (3) as a one-day pressure cooker. Although they varied in duration and depth, the overall format for the three variants was always the same:

- We always start off with immediate experience of music improvisation (e.g., through sensitizing activities we dubbed telephone jam sessions).

- After that, about half of the activity is dedicated to hands-on explorations in which musical instruments and players are gradually coupled in pairs.

- The remaining time is then dedicated to increasing system complexity (and unpredictability), e.g., by making increasing connections.

Throughout DGMIS we organize frequent intermediate jam-like performances of increasing length, as well as final ‘concert-like’ performances. Support is provided in the form of workshops, a technological infrastructure (see the section Intermission: Platforming for data communication), technical, musical and design related support, as well as frequent feedback sessions and group discussions. An overview of the three DGMIS variants is presented in Table 1. It outlines the duration, iterations, type of participants, employed technology and results of the DGMIS activities.

Table 1. Structured overview of GMIS activities.

| Projects | Workshops | Pressure cookers | |

| Time span | One Semester (16 weeks) | One or two weeks | 1-day, half-day |

| Number of times run | 4 consecutive semesters in 3 format iterations (2nd iteration format ran twice) | 4 workshops between 2013 and 2017 | 1 pressure cooker |

| Participants | Undergraduate design students | Undergraduate and graduate students in international design programs | Undergraduate and graduate design students |

| Technology | Evolving infrastructure with limited example code provided; technical support and personal coaching. | Full-fledged infrastructure with example code; working implementations built on Arduino and Leap Motion; technical support and personal coaching. | Full-fledged infrastructure with example code; working implementations built on Arduino and Leap Motion; technical support and personal coaching. |

| Results | Fully working prototypes of individual designs, yet limited in working as a system. | Fully working prototypes in group settings with different designs per group, working as true GMIS as intended, with a designed and live-performed experience. | Fully working prototypes resulting from instruction, working as true GMIS as intended, with a designed and live-performed experience. |

We describe the variants in more detail in the following sections.

DGMIS as a One-Semester Design Project

The first activities of DGMIS were designed to fit with our curricular semester structure and they were open to groups of Industrial Design Bachelor’s students and individual Master’s students. We conducted three iterations of this setup over four semesters. Every iteration involved a kick-off briefing or introductory lecture outlining the activity’s starting points, followed by weekly coaching sessions and-or jam sessions, and wrapped up with a closing demo day.

The first iteration of DGMIS involved only a single third-year Bachelor student, who worked on DGMIS full-time with weekly coach meetings. This iteration served mainly as a first exploration. To get the most out of this iteration we implemented a structure of weekly meetings, in which the authors could try out that week’s design iteration and discuss the design. From very early on the student had two rudimentary instrument prototypes that could be played and critiqued. Part of the meeting was typically spent on discussing the design, the design decisions, and how they related to our starting points. Part of the discussion was aimed at exploring good design approaches, as well as predicting what other students would require in the case of running DGMIS on a larger scale. This first iteration resulted in the system described earlier in this article in the section Example GMIS. More about this design can be read in (Hengeveld et al., 2014).

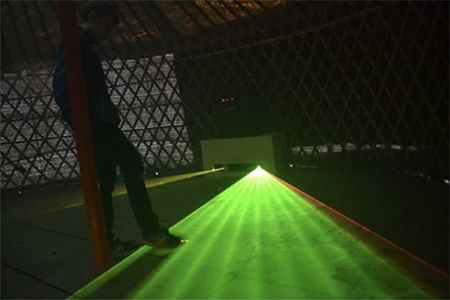

For the second iteration, which ran twice in consecutive semesters, we prepared the DGMIS project for groups of Bachelor students. We defined one primary starting point, which was that all students (or groups of students) were to design a digital musical instrument (or digitally mediated) that would be able to influence the behavior of at least one other musical instrument. On average we ended up with systems of 10 musical instruments, either developed in groups of three students or by individual students. These two iterations resulted in several interesting designs, e.g., ones based on laser dance floors (Junggeburth et al., 2013; Van Hout et al., 2014), see Figure 3, on generative synthesis (Band et al., 2014), or on physiological input (Ostos Rios et al., 2013; Rios et al., 2016). In some cases, we included the exploration of self-invented notation systems (Hengeveld, 2015).

Figure 3. Experio (Junggeburth et al., 2013), a laser-triggered collaborate sample mixer.

Although the designs were musically expressive and appealing, they were often still conceptually stand-alone designs; typically, the teams did not reach the final step of a collective emergent music experience. Students seldom self-organized, and hardly considered group design sessions. They often went into overthinking the concept.

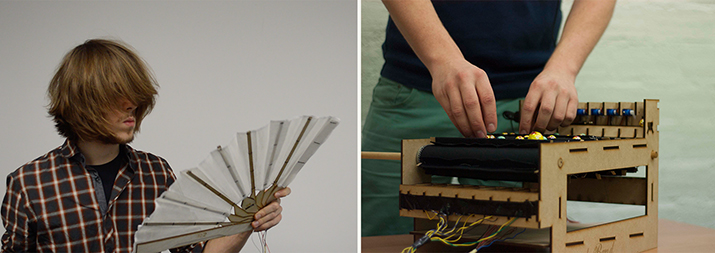

To counter this, we foregrounded the performative aspect of the project in the third iteration, as well as our starting point that the students should become a system themselves (i.e., they should adopt a more bottom-up approach from the get-go rather than force-fitting their individual designs as an afterthought). We dropped all musical theory from the DGMIS briefing as we noticed that it narrowed down the design space rather than opening it up. We emphasized expressivity in input and output and introduced a make first, think later approach to the project. By forcing the students to first explore in the medium itself they had to face the technical complexity of translating expressive sensor input to corresponding expressive sound output. As a consequence, student groups worked on subsystems rather than musical instruments. This resulted in quite satisfying—albeit limited—systemically networked musical interfaces that had their own expressivity in input (see Figure 4), but a shared expressivity in musical output.

Figure 4. Windt (left, by Tijs Duel), is an expressive soundscape player with two states (fan open, fan closed) and was linked to Loopende Band (right, by Bart Jakobs and Bas Bakx), a conveyer belt-based loop station.

DGMIS as a Design Workshop

Intermission: Platforming for Data Communication

Over the course of the semesters we identified technical pain points that severely hampered our students in creating truly networked systems of instruments. To overcome these, we introduced template implementations of basic communication functionality as building blocks for prototyping. These implementations used a design middleware called OOCSI (Funk, 2019), which implements all network communication for the designed artefacts in a GMIS. Using OOCSI the connected artefacts talk to each other by means of messages that are sent and received in real-time. Messages can contain an arbitrary number of data and ensure that different musical parameters can be sent and received together. Technically, the middleware served as a hub that the individual components of the GMIS could subscribe and post data streams to. This means that when played, each instrument would issue a small piece of data (comparable to a note-on event in MIDI), which results in a constant stream of data that are routed and received by other GMIS artefacts. We instructed our participants to collectively agree on ways to communicate between instruments. They converged to a few central conventions, including lower-case labels and keys, continuous values in the range [0..1] (as floating point numbers) and clear, expressive naming of who generated data about which sound parameter, e.g., group1_parameter1, or volume_group6.

Design Workshop Setup

Having run DGMIS as semester projects for several consecutive semesters in different iterations, we compressed the activity into a one to two-week workshop. As said, the overall workshop setup did not differ much from the semester setup: we first introduced the participating students into the context of group music improvisation and the DGMIS starting points, after which half the time was used for exploration, and the remaining time for increasing complexity. We ran the workshop four times between 2013 and 2017, twice in Umeå (Sweden), and once in Beijing and Xi’An (PRC). The first time we ran the workshop format was in Umeå, Sweden, in a 5-day activity. The second time, in Beijing, we planned 10 days (including a more thorough technology introduction ahead of the DGMIS activity), the third time again in Umeå, we planned 5 days, and the last workshop in Xi’an took 8 days, again with a more thorough technical introduction.

The participants in the workshops were predominantly undergraduate and graduate design students. The participant groups ranged from 10-14 participants in Umeå to 40-50 participants in China. We divided the participants into groups of up to five members to facilitate cohesion, cooperation and learning outcomes. The main part of the workshops were hands-on design prototyping sessions that were actively supported by the authors, e.g., by giving feedback or even helping with the implementation of sometimes quite technical prototypes. All workshops ended with a final concert (e.g., Figure 5), sometimes lasting a whole hour. Depending on the location and availability, the concerts took place in a lecture hall, a formal ceremonial hall, or even the local municipal theater.

Figure 5. One of the workshop concerts.

Four participants perform their improvised 10-minute set using a LeapMotion based GMIS.

As a main result from all the workshops, the final stage was successfully achieved: we witnessed several concerts that were practiced and performed in a professional manner. The student designs could be used by all participants within a band and the design communicated with other designs, often forming a data daisy chain. This refers to the connectivity topology that relayed, for instance, data from group 1 to group 2, group 2 to 3, group 3 to 4, and finally data from group 4 to group 1 again. We ran the later workshops with a network visualization of the data flow built in OOCSI (Funk, 2019) to be able to spot problems and intervene quickly from the backstage.

DGMIS as a Pressure Cooker

In our ambition to further reduce the time-to-concert, we compressed the format further into a one-day, and even half-day, pressure cooker. This form of DGMIS was planned as a workshop for an international conference on design (Funk & Hengeveld, 2018) and later for a local student festival. While the former was cancelled, the latter took place with 13 undergraduate and graduate Industrial Design students. The briefing and introduction remained the same compared to the workshop settings. However, the technology was comparatively streamlined: the student teams received a working software sketch together with a Leap Motion device that could be further designed by means of software effects, interaction parameters, hand tracking variations and of course the interaction with other instruments by means of data exchange. The result of this activity was a 30-minute concert in four parts, on a professional stage with four teams participating in four performed pieces.

Reflecting on a Decade of DGMIS

Now, what did this decade-long exploration bring us in terms of (educating young designers in) the process of designing Systems of Expressive Things? In the following, we reflect on our experiences designing, running and redesigning DGMIS over the years.

General Observations

DGMIS is Hell for Control Freaks

The most salient observation from all DGMIS activities was how participants were generally overwhelmed by the complexity and unpredictability of designing open-ended GMIS. Despite our explicit starting point to embrace this uncertainty and just go for it we could see that almost all participants experienced the conceptual and organizational consequences of this approach as daunting. Most did not have prior experience with allowing external influences on their own design’s functioning and resorted to designing in isolation. Their intuitive reaction was to specify a GMIS, ignoring the idea of improvisation being the system’s raison d’être. Consequently, they got stuck (both individually and as a group).

Coping Fallacy: Thinking is Free, Making and Doing are Sunk Costs

Especially in the earlier DGMIS activities we observed that our students were reluctant to start with making and performing. Most of them saw performance as something you do after designing rather than as another form of designing. They deliberately postponed making explorative models or prototypes because they wanted to be sure that the time required to make something work would be worth it. Consequently, they lost themselves in comprehensive analyses of the complex system and its emergent interactions first, realizing too late that the collective system design had moved on. In other words, what our students did not realize is that you only know if it is worth it after you’ve done it. We could clearly see that the students we considered makers saved much time compared to their peers, although even they were often reluctant to move towards performing. Ironically, all students evaluated post-activity that they would have likely progressed much sooner in the process by engaging in making and performing activities.

Improvisation, while Feeling Musically Inadequate

A factor that seemed to contribute to the aforementioned reluctance was most students’ perception of what music is. Typically, about half of the students in a group had some form of musical training, the other half none at all. Almost all of them, though, seemed to have grown up with the understanding that music is what you hear on the radio or Spotify, and amateur approaches to music would not count (or even exist). On top of that, improvisation as such proved to be a difficult concept in itself.

Corrective Measures

Make First, Think Later

As a response to the reluctance to make and perform we applied a more rigid structure to the later DGMIS activities, emphasizing doing over thinking, allowing emergent behaviour to surface. For example, in the final variation of the semester version we dedicated the entire first half to designing expressive musical output to expressive sensor input (e.g., how does turning a dial gently sound, compared to turning it aggressively?). Only then did we start grouping students and allowing them to think about the actual form of the whole instrument and GMIS. This seemed to solve the original reluctance to making problem and resulted in fewer traditional instruments, but it also resulted in (a) the students feeling creatively limited; (b) the activity becoming tech heavy instead of performance heavy; and (c) a reduction in the group aspect of the project. We addressed these issues in the workshop and pressure cooker versions, where we restricted the technological platform even further, only allowing students to work with Leap Motion sensors and limiting the input to only a restricted set of measurements (e.g., whole hands-only). Also, we implemented regular jam sessions in the schedule—every two or three weeks during the semester version, every day or twice a day during the workshops—during which all students had to perform.

Enforcing Immediacy

While complexity was expected and to some extent intended in our DGMIS activities, we were surprised that the participants had difficulties coming to grips with a notion that is omnipresent in music performance, i.e., immediacy; responding to what happens in the here and now. Immediacy comes quite naturally to a musician; it is inherent to the fact that live music is, well, live. One can reduce error through rehearsals, but ultimately the musical outcome of any performance will always be different to the previous one. This is not only part of the fun, but it is to some extent what gives value to music performance. In contrast we observed that DGMIS participants struggled to embrace this notion. They found it difficult to organize and synchronize their design processes, including the cut-off point when they would freeze their individual instrument designs and collectively move to the design of the musical experience. To tackle this, we changed the setup of the DGMIS activities so that the students had to implement prototypes that worked with live data from the get-go, just to be able to participate in the design sessions. Prototypes needed to be experience-ready uncomfortably early in the process and iterating on them should not take more than 30 minutes. Enforcing connectivity and immediacy as such made that students simply had no choice but to embrace the inherent unexpectedness of systems of instruments.

Opening Up and Constraining

Furthermore we applied a range of openings and constraints to all DGMIS activities. We implemented several content changes over the years: first, we introduced students to more abstract branches of music such as the work of John Cage, and invited guest musicians that worked with noise, granular synthesis, sequencers, and generative music. All with the purpose to broaden the understanding of music and musical performance. Secondly, we refrained from providing any basic music theory. We forbade traditional musical instruments and pushed more towards novel digital instruments to push students away from too familiar sounds and styles. Thirdly, reduced the time available for learning about music and composition, for ideating on paper, and for thinking. Instead we included more hands-on musical activities such as the telephone jam sessions. Finally, as mentioned above we stressed the importance of designing the group music improvisation experience repeatedly, to prevent participants from focusing on individual music instruments designs and neglecting the performative parts of the design research projects.

Lessons Learned from DGMIS

Designing Top Down or Bottom Up?

When looking back at all the instances of DGMIS one recurring struggle was how to align the individual performers and technologies to ensure a positive project outcome. Roughly, we observed two approaches: (1) a bottom-up approach in which the individual designs when put together constitute emergent system performance. In this approach the system more or less aligns itself; and (2) a top-down approach in which the system components would be designed to work towards a desired system performance. In the latter approach alignment is more or less enforced by predetermined rules or success criteria.

Bottom-up approaches dominated in our observations over the years, which corresponds to the original objective of DGMIS to inform the domain of systems design from the domain of improvised music. The top-down approach appeared only once, in the last DGMIS workshop, when the students went for a design once, then cookie-cutter approach: the overall system was designed as a group, after which all aspects of one system component were delegated to smaller sub-groups to design and implement. Finally, several copies of this system component were manufactured with small variations. Later in the workshop it was even decided to allocate hardware and software to two separate sub-groups that would build and program reusable modules for the two performing groups.

In retrospect, the bottom-up approach generally resulted in more design variety, more creativity and bigger conceptual leaps. The downside was more friction and confusion, leading to systems that had to be force-fit together. This led to unforeseen decisions, changes in original success criteria and generally bigger conceptual shifts without resulting in ‘unsuccessful’ systems. On the contrary, the bottom-up approaches generally resulted in very interesting and novel musical experiences, because the participants had to embrace a more avant-garde take on digitally mediated music.

The top-down approach was a smoother experience for all involved (including the authors) as it demanded much less problem solving, reframing or reinventing. The instruments and systems were more predictable, easier to operate and resulted in decent experiences. The music was generally more accessible and there was an easier role for the group conductor. However, it was clear that the participants learned less, made only smaller conceptual leaps and rendered the experience of systems design less relevant and visionary.

Interestingly, when we consider jazz improvisation in this discussion, one could argue that jazz improvisation is neither top-down nor bottom-up but rather takes on a more hybrid approach. Of course the individual players operate in a more bottom-up fashion as their playing is not pre-designed; it happens in the moment and is largely dependent on their own take on music. However, as said in the introduction to this article, jazz improvisation happens within a space of shared understanding; all the individual players know what falls within a successful outcome and all embrace this shared goal. As such, jazz improvisation is typically not aimed at reaching a predictable result, but rather is about working within/towards a scope of outcomes that are all valid. This can be seen as the top-down component.

Concluding, we argue that results of the DGMIS process can be tweaked: a more performative approach will demand more openness for unexpected success criteria, whereas the more planned versions are more accessible but less provocative conceptually. Embracing complexity is an attitude as much as it is a skill—and both are can be taught.

Leveling the Playing Field

In addition, as the form of DGMIS activities evolved, we invested in platforming as an integral part of the DGMIS design process. This platforming involved introducing a shared data exchange platform, and at a later stage a fully working example instrument that participants in the shorter workshops had to modify instead of designing and prototyping from scratch. Through both measures the design space was radically more constrained.

We can see how this can be interpreted as contrary to our ambition to embrace complexity (see our three DGMIS starting points earlier in this article). However, we have experienced that these platforming endeavors don’t eliminate complexity, but rather scope it; platforming allowed our students (a) to embrace the complexities that were more interesting to us, the authors; and (b) to cut some corners in getting stuff to work so that they could spend their limited resource on the experiential and performative aspects of their GMIS design. The more we provided technical scaffolding, the shorter we could prescribe the duration of iterations and the more successful the design teams became. The additional scaffolding significantly reduced the time to working with (experiential) feedback loops (Bahn et al., 2001), and as we emphasized more the desired performative outcomes of the design processes.

Both the process complexity and the conceptual challenges showed that DGMIS requires a good balance between mocking up and building up, finding the right cutoff point for investing in the development of a working prototype. We combined this with organized practice sessions, in which the participants had to perform with what they had brought, to shift the focus from the individual instrument design to designing the collective performative experience.

As such, one significant insight we take from DGMIS is that scaffolding the system components can make a big difference in letting the players focus on performative aspects of a dynamic system.

Designing for and with Data

In DGMIS, we have always considered data as an integral design material, and as an essential component of the designed performance space; data formed the basis for instrument behavior, which always included reciprocal instrument interference. However, we observed that designing for and with data is not straightforward. In the context of GMIS, not only the instruments but also the data itself had to be designed from scratch in the sense that participants had to create data sources and the meta-data to be able to communicate with other teams for an expressive context. We observed that our students experienced difficulties in understanding how the sensor data that their instruments would generate could be used, and they quickly started to externalize this information as visual overviews on paper. The sketches started with the structure of the system, connecting individual musical instruments, and then served as a communication tool to talk about the data that would eventually flow between the instruments. Yet, the only way in which they could understand the role of the instrument data was through developing actual prototypes that allowed them to experience the effect of data. Only then could they make further choices and decisions. Once the real-time data were contextualized in how someone uses an instrument and what sound is produced, abstract meta-data and vague semantics would not matter much anymore.

This is different to how we typically use data in design, which follows the conventional triad of Data → Knowledge → Action: we analyse data, by which we understand a phenomenon, which allows us to take design action. In DGMIS, however, the relation is Data → Experience → Action, in which the embodiment of the data in both input (playing the GMIS) as well as output (hearing the collective result and one’s own influence) is critical. This is especially interesting as data is not used to model something, but rather used intuitively to shape an experience; after all, in the GMIS instruments data is both being generated (resulting in musical output) but also being received by another instrument meaning that by changing the form in which data is being broadcast you also give form to the way your data can be interpreted (or not). This relates to the apparent disconnect between discrete, precise values and the continuity of expressivity. We resolve this through active exploration in the intended medium, i.e., music. As such, DGMIS can be understood as an experience-based way of designing with data, that is local, self-generated, self-interpreted, and a reflection of a collective experience.

Generalising: A Five-Step Aapproach

To round off this reflection on a decade of DGMIS, can we translate and apply our experiences in other domains than musical expression? Not directly, but as a first step towards translation, we can look for traits and qualities of GMIS in other domains. For example, the smart home and the smart workspace are both contexts in which multiple users co-perform through shared engagement with technology, and in which the systems themselves have agency: they control various actuators and automate a part of the day-to-day experience. Also we can (and should) consciously consider these contexts as performative environments, and can start considering contextual improvisation qualities, e.g., considering daily routines (= making breakfast, or briefing a team), rituals (= leaving home or eating lunch together), unforeseen events (= visitors or emergency calls), and a sense of harmony (being in consonance or dissonance) and closure (at the end = in the evening or finalizing an important project). These everyday examples show that higher-order social patterns appear in other domains and we can speculate that DGMIS can give new impulses to design for this.

What is however central to proceeding from these questions is the (re-)identification and nurturing of complexity in a given new domain. What made DGMIS challenging but also insightful for designers was the constant presence of complexity and unpredictability that could not be resolved nor designed out. As a framework for working with this complexity we suggest (1) starting with a mapping of performative feedback loops bottom-up: which human or technology actors have agency in the system and what drives them? (2) Extending this mapping considering the top-down drivers of the system (for lack of a better term: the system’s success criteria) and trying to identify the performative roles that different human participants might assume in different practices. After this, we can (3) start exploring means for human and technological expression, and (4) add a data perspective to this to allow for mediated data flows between the different entities in the system (first conceptual, then technical). Finally, (5) we feel that designing within complexity requires constant attention to the collective process and collective experience of it, so that the target qualities of the system can be continuously be negotiated and calibrated as the designers proceed in (re-)designing a domain-specific system.

We emphasise collective experience here as it is in this experience that most of the systemic sensemaking takes place. Designing for complex, unstable, performative systems can in our experience not be solved on paper alone, but requires a constant experiential confrontation. This five-step process as extracted from the GMIS workshops sequence defines and relates the predominant roles and artifacts. However, we have not attempted this step and time will tell whether such a translation can be implemented in a straight-forward manner.

Conclusion

In this article, we have described a decade-long journey of researching (the education of) Group Music Improvisation Systems (GMIS). Our experiences applying GMIS in Industrial Design education range from months-long design projects to workshops and highly compressed pressure-cooker instances. We worked with different constraints and supportive means such as different introductions and framings, a technological platform, and—depending on the time available—more or less scripted templates of what could be designed.

As much as GMIS seem removed from many real-world application areas (perhaps excluding entertainment) they capture essential aspects of designing for systems that are used in concert and through multi-user interaction. GMIS can serve as educational tools engaging students and teachers, resulting in tangible demonstrable outcomes and encouraging creative design in highly technology-mediated forms of expression. In this role, GMIS predominantly allow for designing in depth and provide a new archetype of expressive interaction that is deliberately different from games and play; they embody constructive challenges beyond predetermined paths and independent from rule systems that games rely on. The design of GMIS is guided by qualities determined by the collective, a shared sense of aesthetics that are accessible, and yet profound. A process of constant zoom-in and zoom-out is required to stay in touch with the individual designs that compose a system and the designed experience. For any designer involved, it means to balance personal design ambitions against the group momentum and expectations of what the audience might feel and react to.

Future work will focus on the extension of DGMIS to different application areas beyond musical performance, for instance, as an approach to engage with everyday systems in home and workplace, in mobility and complex machine control. The fundamental design challenges remain: how do human groups interact and creatively engage in a common objective mediated and facilitated through a network of machines, a system of increasingly smarter things, that we can only influence to limited degrees. How do we translate and extend social processes of negotiation, agreement and inspiration to such settings and share background, expertise and intentions in constructing experiences for us and others?

Finally, DGMIS was an exploration into the design of unstable systems, which is seldom addressed in design education in the depth of working prototypes and performative experiences. It was a long-term challenge of how to design the systems and also the process leading toward unstable systems: through nurturing complexity and establishing a dual perspective on both the designed artifacts and the emergence of systemic qualities. This, in our view, is what systems design is about—and what contemporary design education news to focus on.

Acknowledgments

We thank all the students for their participation in this decade-long journey, as well as all project coaches. A special thank you to Christoffel Kuenen (Umeå Institute of Design, Umeå), Zhiyong Fu (Tsinghua University, Beijing), and Dengkai Chen and Mingjiu Yu (Northwestern Polytechnical University, Xi’an) for generously hosting us over the years.

References

- Alcántara, J. M., Markopoulos, P., & Funk, M. (2015). Social media as ad hoc design collaboration tools. In Proceedings of the European Conference on Cognitive Ergonomics (Article No. 8). New York, NY: ACM. https://doi.org/10.1145/2788412.2788420

- Atzori, L., Iera, A., & Morabito, G. (2010). The internet of things: A survey. Computer Networks, 54(15), 2787-2805. https://doi.org/10.1016/j.comnet.2010.05.010

- Bahn, C., Hahn, T., & Trueman, D. (2001). Physicality and feedback: A focus on the body in the performance of electronic music. In Proceedings of the International Computer Music Conference (pp. 44-51). Ann Arbor, MI: Michigan Publishing.

- Band, J., Funk, M., Peters, P., & Hengeveld, B. (2014). Varianish: Jamming with pattern repetition. EAI Endorsed Transactions on Creative Technologies, 1(1), No. E3. https://doi.org/10.4108/ct.1.1.e3

- Beaton, B., Harrison, S., & Tatar, D. (2010). Digital drumming: A study of co-located, highly coordinated, dyadic collaboration. In Proceedings of the Conference on Human Factors in Computing Systems (pp. 1417-1426). New York, NY: ACM. https://doi.org/10.1145/1753326.1753538

- Bengler, B., & Bryan-Kinns, N. (2013). Designing collaborative musical experiences for broad audiences. In Proceedings of the 9th Conference on Creativity & Cognition (pp. 234-242). New York, NY: ACM. https://doi.org/10.1145/2466627.2466633

- Bevilacqua, F., Baschet, F., & Lemouton, S. (2012). The augmented string quartet: Experiments and gesture following. Journal of New Music Research, 41(1), 103-119. https://doi.org/10.1080/09298215.2011.647823

- Bigos, D. (2017). The industrial internet of things is full of transformational potential. Retrieved May 10, 2021, from https://www.ibm.com/blogs/internet-of-things/iiot-has-transformational-potential/

- Blaine, T., & Fels, S. (2003). Collaborative musical experiences for novices. Journal of New Music Research, 32(4), 411-428. https://doi.org/10.1076/jnmr.32.4.411.18850

- Blaine, T., & Perkis, T. (2000). The Jam-O-Drum interactive music system: A study in interaction design. In Proceedings of the 3rd Conference on Designing Interactive Systems (pp. 165-173). New York, NY: ACM. https://doi.org/10.1145/347642.347705

- Bowers, J., & Hellström, S. O. (2000). Simple interfaces to complex sound in improvised music. In Proceedings of the Conference on Human Factors in Computing Systems (Ext. Abs., pp. 125-126). New York, NY: ACM. https://doi.org/10.1145/633292.633364

- Brown, A. R., & Sorensen, A. C. (2007). Aa-cell in practice: An approach to musical live coding. In Proceedings of the International Computer Music Conference (pp. 292-299). Copenhagen, Denmark: International Computer Music Association.

- Chan, W. -Y., Qu, H., & Mak, W. -H. (2010). Visualizing the semantic structure in classical music works. IEEE Transactions on Visualization and Computer Graphics, 16(1), 161-173. https://doi.org/10.1109/TVCG.2009.63

- Chew, E. (2005). Foreword to special issue on music visualization. Computers in Entertainment, 3(4), 1-3. https://doi.org/10.1145/1095534.1095541

- Davidson, J. W. (1997). The social in musical performance. In A. C. North & D. J. Hargreaves (Eds.), The social psychology of music (pp. 209-228). Oxford, UK: Oxford University Press.

- Endrjukaite, T., & Kosugi, N. (2012). Music visualization technique of repetitive structure representation to support intuitive estimation of music affinity and lightness. Journal of Mobile Multimedia, 8(1), 49-71.

- Fels, S. (2004). Designing for intimacy: Creating new interfaces for musical expression. Proceedings of the IEEE, 92(4), 672-685. https://doi.org/10.1109/jproc.2004.825887

- Fonteles, J. H., Rodrigues, M. A. F., & Basso, V. E. D. (2013). Creating and evaluating a particle system for music visualization. Journal of Visual Languages & Computing, 24(6), 472-482. https://doi.org/10.1016/j.jvlc.2013.10.002

- Fortino, G., Russo, W., Savaglio, C., Shen, W., & Zhou, M. (2018). Agent-oriented cooperative smart objects: From IoT system design to implementation. IEEE Transactions on Systems, Man, and Cybernetics: Systems, 48(11), 1939-1956. https://doi.org/10.1109/TSMC.2017.2780618

- Frens, J. W., & Hengeveld, B. J. (2013). To make is to grasp. In Proceedings of the 5th International Congress of International Association of Societies of Design Research (pp. 26-30). Tokyo, Japan: IASDR.

- Fritsch, E., Shklovski, I., & Douglas-Jones, R. (2018). Calling for a revolution: An analysis of IoT manifestos. In Proceedings of the Conference on Human Factors in Computing Systems (pp. 1-13). New York, NY: ACM. https://doi.org/10.1145/3173574.3173876

- Funk, M. (2019, May 22). OOCSI. Zenodo. https://doi.org/10.5281/zenodo.1321219

- Funk, M., & Hengeveld, B. (2018). Designing within connected systems. In Proceedings of the Conference on Designing Interactive Systems (Ext. Abs., pp. 407-410). New York, NY: ACM. https://doi.org/10.1145/3197391.3197400

- Funk, M., Hengeveld, B., Frens, J., & Rauterberg, M. (2013). Aesthetics and design for group music improvisation. In Proceedings of the 1st International Conference on Distributed, Ambient, and Pervasive Interactions (pp. 368-377). Berlin, Germany: Springer https://doi.org/10.1007/978-3-642-39351-8_40

- Grekow, J. (2011). Emotion based music visualization system. In M. Kryszkiewicz, H. Rybinski, A. Skowron, & Z. W. Raś (Eds.), Proceeding of the 19th Symposium on Methodologies for Intelligent Systems: Foundations of Intelligent Systems (pp. 523-532). Berlin, Germany: Springer. https://doi.org/10.1007/978-3-642-21916-0_56

- Hähnel, T., & Berndt, A. (2011). Studying interdependencies in music performance: An interactive tool. In Proceedings of the International Conference on New Interfaces for Musical Expression (pp. 48-51). Oslo, Norway: NIME.

- Hansen, A.-M. S., Andersen, H. J., & Raudaskoski, P. (2012). How two players negotiate rhythm in a shared rhythm game. In Proceedings of the 7th Audio Mostly Conference on A Conference on Interaction with Sound (pp. 1-8). New York, NY: ACM. https://doi.org/10.1145/2371456.2371457

- Hengeveld, B. (2015). Composing interaction: Exploring tangible notation systems for design. In Proceedings of the 9th International Conference on Tangible, Embedded, and Embodied Interaction (pp. 667-672). New York, NY: ACM. https://doi.org/10.1145/2677199.2687915

- Hengeveld, B., Frens, J., & Funk, M. (2013). Investigating how to design for systems through designing a group music improvisation system. In Proceedings of the 5th International Congress of International Association of Societies of Design Research (pp. 1-12). Tokyo, Japan: IASDR.

- Hengeveld, B., Funk, M., & Doing, V. (2014). Beat my bass, pluck my drum. In Proceedings of the Conference on Designing Interactive Systems (Ext. Abs., pp. 49-52). New York, NY: ACM. https://doi.org/10.1145/2598784.2602779

- Hsu, W. (2007). Design issues in interaction modeling for free improvisation. In Proceedings of the 7th International Conference on New Interfaces for Musical Expression (pp. 367-370). New York, NY: ACM. https://doi.org/10.1145/1279740.1279821

- Jordà, S. (2005). Multi-user instruments: Models, examples and promises. In Proceedings of the International Conference on New Interfaces for Musical Expression (pp. 23-26). Vancouver, Canada: NIME.

- Jordà, S., Geiger, G., Alonso, M., & Kaltenbrunner, M. (2007). The reacTable: Exploring the synergy between live music performance and tabletop tangible interfaces. In Proceedings of the 1st International Conference on Tangible and Embedded Interaction (pp. 139-146). New York, NY: ACM. https://doi.org/10.1145/1226969.1226998

- Junggeburth, M., Giacolini, L., van Rooij, T., van Hout, B., Hengeveld, B., Funk, M., & Frens, J. (2013). Experio: A laser-triggered dance music generator. In Proceedings of the 8th International Conference on Design and Semantics of Form and Movement (pp. 170-174). Eindhoven, The Netherlands: Technische Universiteit Eindhoven.

- Juslin, P. N. (1997). Emotional communication in music performance: A functionalist perspective and some data. Music Perception: An Interdisciplinary Journal, 14(4), 383-418. https://doi.org/10.2307/40285731

- Kim-Boyle, D. (2009). Network musics: Play, engagement and the democratization of performance. Contemporary Music Review, 28(4-5), 363-375. https://doi.org/10.1080/07494460903422198

- Klügel, N., Frieß, M. R., Groh, G., & Echtler, F. (2011). An approach to collaborative music composition. In Proceedings of the International Conference on New Interfaces for Musical Expression (pp. 32-35). Oslo, Norway: NIME

- Mui, C. (2016, March 4). Thinking big about the industrial internet of things. Forbes. Retrieved from https://www.forbes.com/sites/chunkamui/2016/03/04/thinking-big-about-industrial-iot/

- Ostos Rios, G. A., Funk, M., & Hengeveld, B. J. (2013). EMjam: Jam with your emotions. In Proceedings of the 8th International Conference on Design and Semantics of Form and Movement (pp. 128-136). Eindhoven, The Netherlands: Technische Universiteit Eindhoven.

- Peplowski, K. (1998). The process of improvisation. Organization Science, 9(5), 560-561. https://doi.org/10.1287/orsc.9.5.560

- Puzoń, B., & Kosugi, N. (2011). Extraction and visualization of the repetitive structure of music in acoustic data: Misual project. In Proceedings of the 13th International Conference on Information Integration and Web-Based Applications and Services (pp. 152-159). New York, NY: ACM. https://doi.org/10.1145/2095536.2095563

- Rasamimanana, N., Bevilacqua, F., Schnell, N., Guedy, F., Flety, E., Maestracci, C., Zamborlin, B., Frechin, J. -C., & Petrevski, U. (2011). Modular musical objects towards embodied control of digital music. In Proceedings of the 5th International Conference on Tangible, Embedded, and Embodied Interaction (pp. 9-12). New York, NY: ACM. https://doi.org/10.1145/1935701.1935704

- Rios, G. O., Funk, M., & Hengeveld, B. (2016). Designing for group music improvisation: A case for jamming with your emotions. International Journal of Arts and Technology, 9(4), 320-345. https://doi.org/10.1504/IJART.2016.081331

- Rowe, R. (1999). The aesthetics of interactive music systems. Contemporary Music Review, 18(3), 83-87. https://doi.org/10.1080/07494469900640361

- Ryan, A. (2014). A framework for systemic design.

FormAkademisk - Forskningstidsskrift for Design og Designdidaktikk, 7(4). https://doi.org/10.7577/formakademisk.787 - Schiettecatte, B., & Vanderdonckt, J. (2008). AudioCubes: A distributed cube tangible interface based on interaction range for sound design. In Proceedings of the 2nd International Conference on Tangible and Embedded Interaction (pp. 3-10). New York, NY: ACM. https://doi.org/10.1145/1347390.1347394

- Stockholm, J., & Pasquier, P. (2008). Eavesdropping: Audience interaction in networked audio performance. In Proceedings of the 16th Conference on Multimedia (pp. 559-568). New York, NY: ACM. https://doi.org/10.1145/1459359.1459434

- Valbom, L., & Marcos, A. (2007). An immersive musical instrument prototype. IEEE Computer Graphics and Applications, 27(4), 14-19. https://doi.org/10.1109/MCG.2007.76

- Valone, J. (1985). Musical improvisation as interpretative activity. The Journal of Aesthetics and Art Criticism, 44(2), 193-194.

- Van Hout, B., Giacolini, L., Hengeveld, B., Funk, M., & Frens, J. (2014). Experio: A design for novel audience participation in club settings. In Proceedings of the 14th International Conference on New Interfaces for Musical Expression (pp. 46-49). London, UK: NIME.

- Weinberg, G., Aimi, R., & Jennings, K. (2002). The beatbug network: A rhythmic system for interdependent group collaboration. In Proceedings of the Conference on New Interfaces for Musical Expression (pp. 106-111). Dublin, Ireland: NIME.

- Wöldecke, B., Marinos, D., Pogscheba, P., Geiger, C., Herder, J., & Schwirten, T. (2011). RadarTHEREMIN - Creating musical expressions in a virtual studio environment. In Proceeding of the International Symposium on VR Innovation (pp. 345-346). New York, NY: IEEE. https://doi.org/10.1109/ISVRI.2011.5759671